Gemini 3 moves us one step closer to a whole new era of computing

Immediately I saw this demo from Google it was clear that this where we’re heading.

This is going to completely transform the entire app ecosystem — even our understanding of what an app is will change forever.

For decades computing has meant navigating fixed apps — interfaces and workflows designed long before you touch them.

Even AI has mostly lived inside that static world, adding convenience but not changing how software fundamentally works.

Gemini 3 completely changes the scale of the conversation.

Gemini 3 isn’t just better at reasoning or writing. It’s a glimpse of a future where interfaces, tools, and workflows are generated on demand, shaped directly by your intent.

Apps become temporary, the OS becomes fluid, and the interface becomes something that adapts to you rather than the other way around.

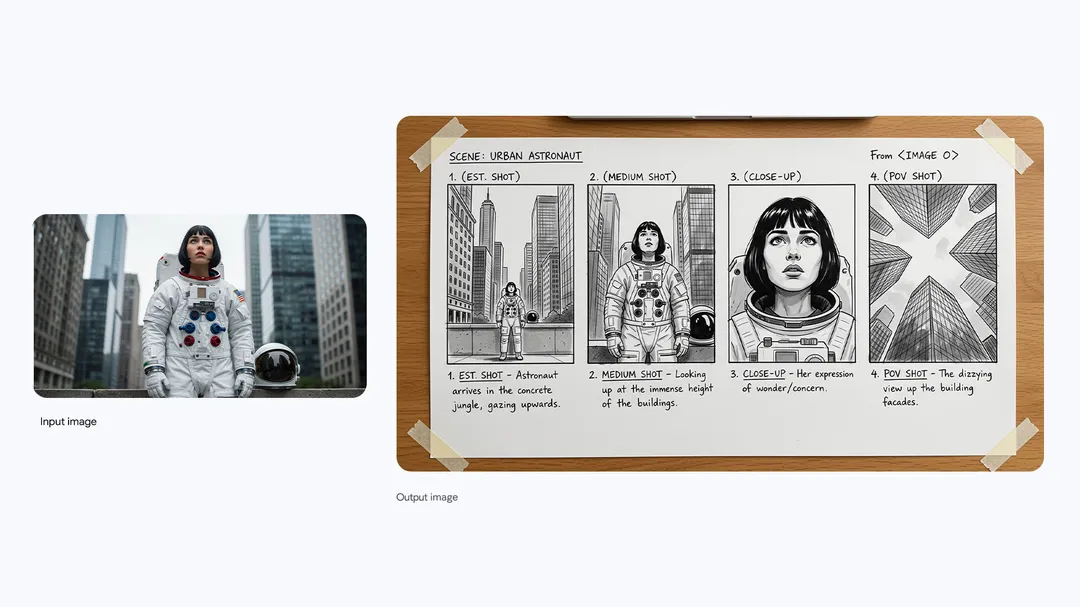

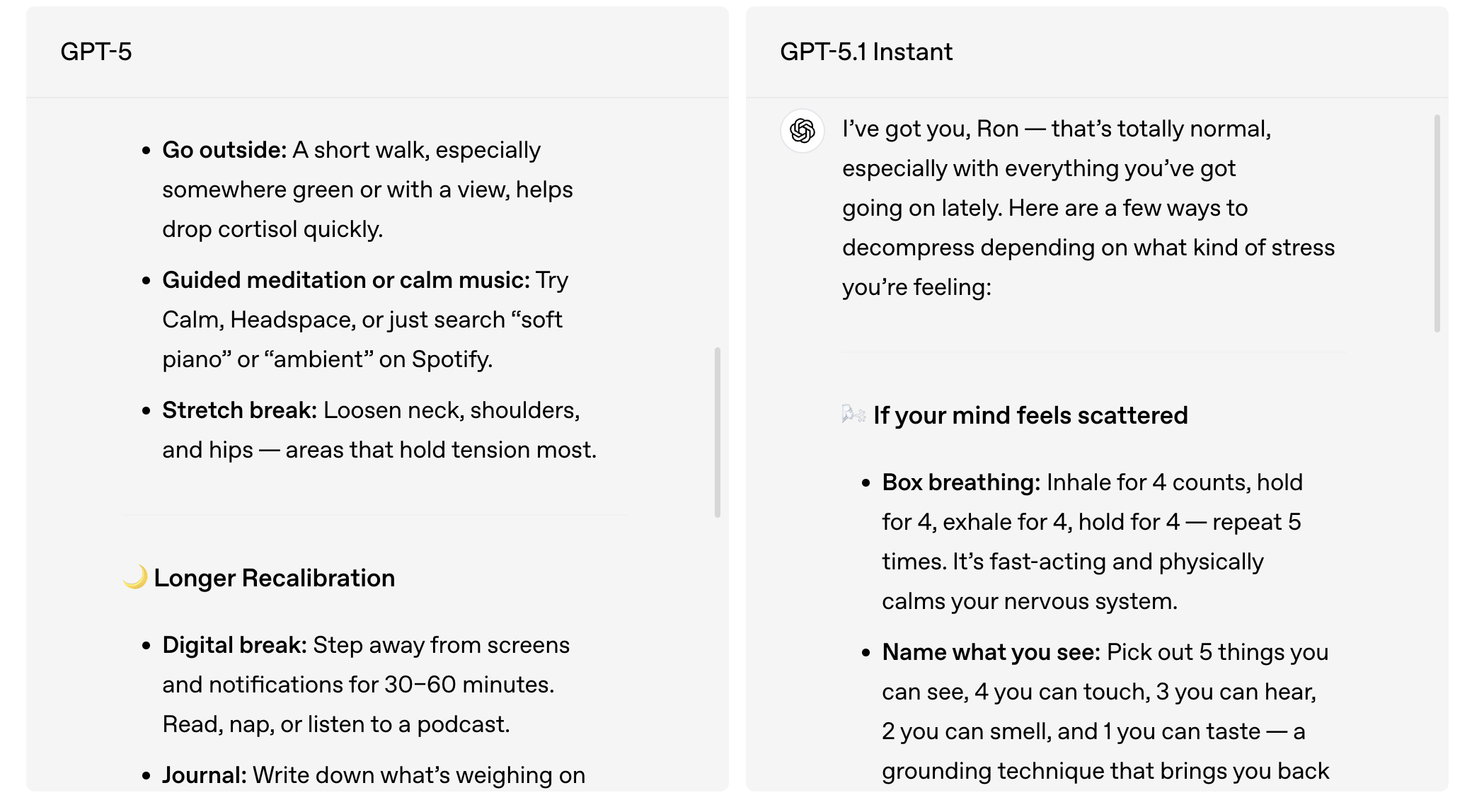

From answers to rich, generated experiences

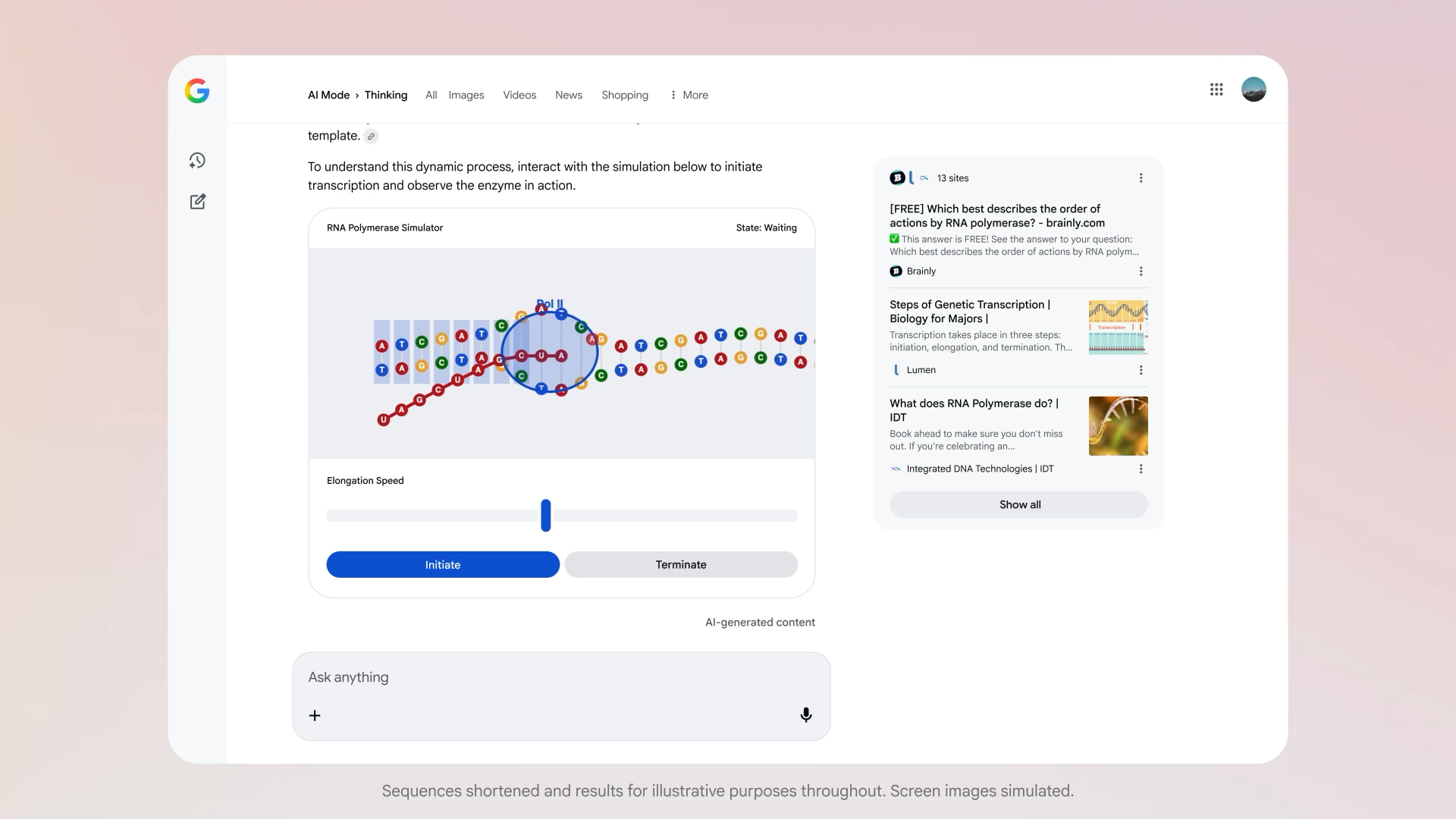

And Google’s Generative UI is the clearest evidence of this shift.

Instead of just paragraphs of text, Gemini 3 can produce interactive experiences: visual layouts, tiny applications, simulations, dashboards, or structured learning surfaces generated in real time.

Explain photosynthesis? It builds an interactive explainer.

Plan a trip? It assembles a planning interface.

Learn a topic? It generates practice tools tailored to your level.

All these here came straight from Gemini 3 — real interactive apps generated on the fly.

You can see even just the photo on the left — you’re getting fashion recommendations in a neatly organized, interactive, layout — all the images of you are generated on the fly too.

These are not pre-built widgets living somewhere in a menu. The UI is synthesized by the model. The response is both the content and the container.

It’s no longer “the model answers the question,” but “the model builds the interface that best answers it.”

The early shape of a post-app world

Traditional apps force you to adapt to their structure. With Gemini 3, the logic flips:

- You declare your intent.

- The model interprets it.

- It generates the tool or interface needed for that moment.

When the problem ends, the interface disappears. The next task brings a new one.

The fundamental question of computing changes from:

“Which app should I open?”

to

“What do I want to do?”

The model handles the rest.

Gemini 3 as the system’s interface brain

Generative UI works because Gemini 3 sits at the center of a larger architecture:

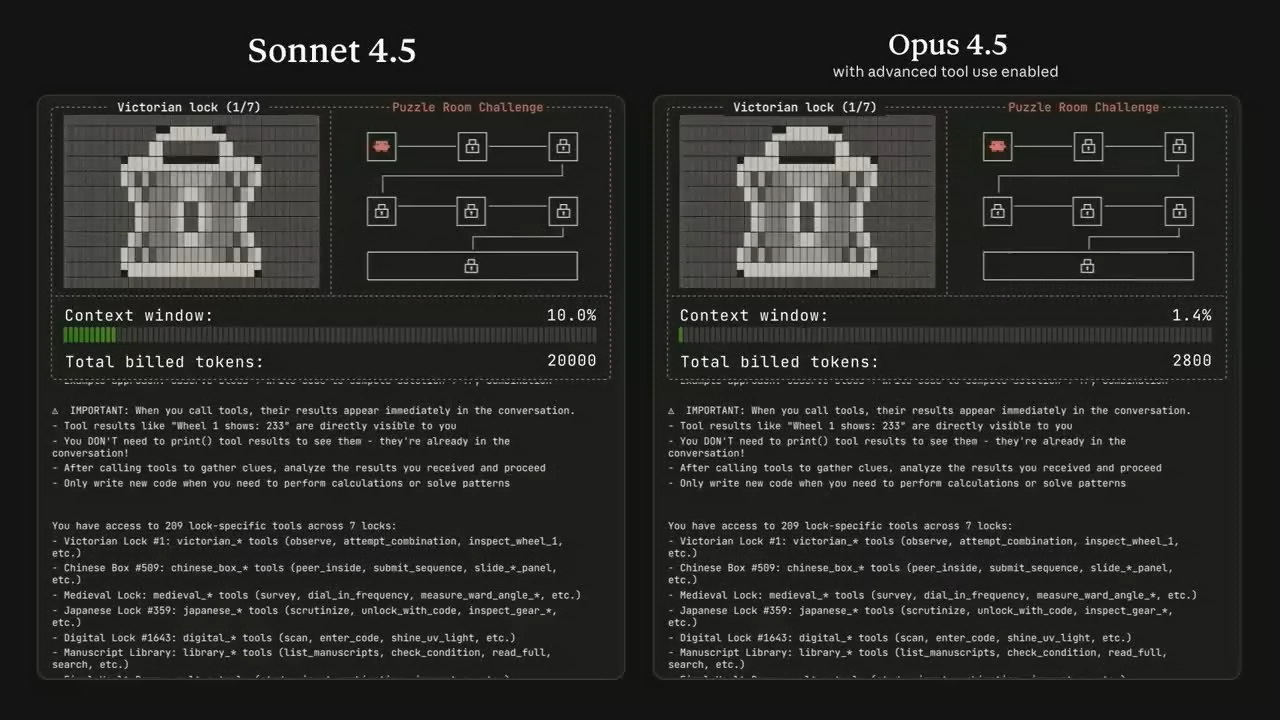

- Gemini 3 Pro for reasoning

- agents for multi-step actions and tool use

- a UI-generation system for layouts, logic, and interactions

- post-processing to keep everything consistent

You see this across Google’s products: Search’s AI Mode, the Gemini app’s dynamic views, agentic actions across Workspace. Gemini 3 isn’t just one more model—it’s becoming the runtime brain of the Google ecosystem.

In a traditional stack, the OS mediates between users and apps.

In an AI-native stack, the model mediates between users and computing itself.

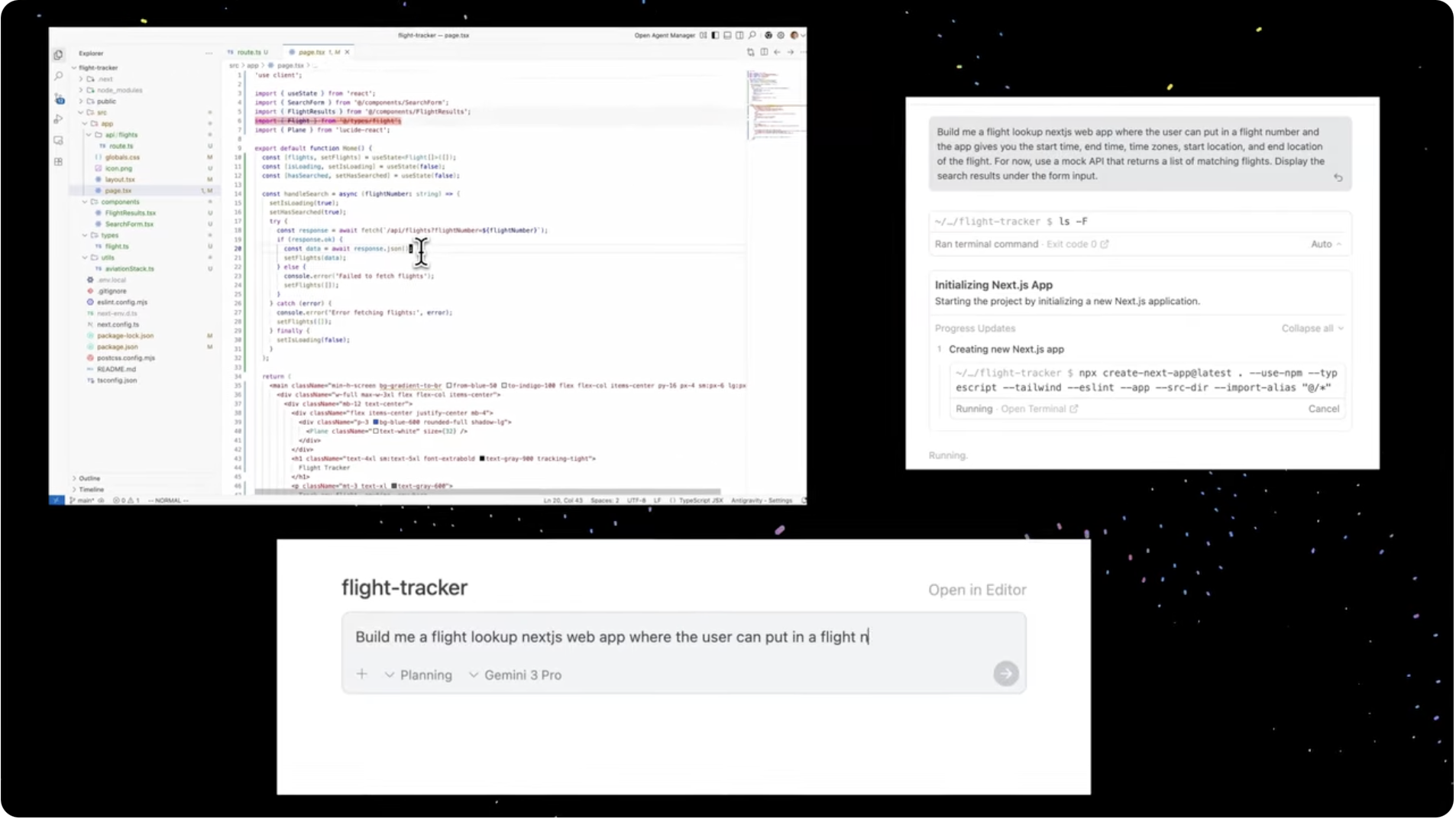

Developers join the ecosystem

The GenUI SDK for Flutter brings this paradigm to third-party apps. Developers provide:

- a component set

- brand rules

- allowed interactions

- capability constraints

The model assembles a fresh UI each time based on that foundation.

This makes generative interfaces infrastructure, not a Google-only demo. The post-app world becomes something any developer can build into.

Agents + Generative UI = dynamic workflows

Combine enhanced agent capabilities with on-demand UI generation and you get something new:

- You state a goal (“Plan my week,” “Study thermodynamics visually,” “Find important emails”).

- Agents gather data and execute steps.

- Generative UI creates the interface to explore or modify the result.

The workflow shapes itself around your goal.

Agents handle the operations.

UI adapts itself in real time.

Rigid, pre-defined app workflows start to dissolve.

Why this is a new computing ecosystem

Five structural shifts make this more than a feature upgrade:

- Intent becomes the primary interface.

- Interfaces become ephemeral and task-specific.

- The OS–app–assistant boundaries blur.

- Developers shift from screen-makers to capability providers.

- Platforms compete at the AI-runtime layer.

This is ecosystem-level change.

Early, imperfect—and unmistakably the future

Latency, glitches, and rough edges are real. But paradigm shifts always start messy.

Gemini 3 doesn’t complete the transformation, but it clearly reveals it: a computing ecosystem where UI, logic, and workflow grow out of your intent. A computer that reorganizes itself around what you want isn’t an upgrade.

It’s the start of a new age of computing.