AI is destroying the entire app industry

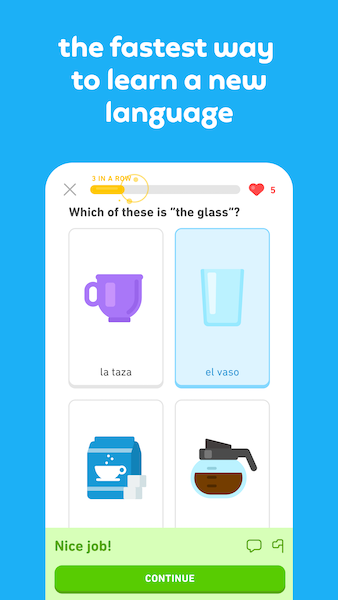

The writing is on the wall… Microsoft’s CEO isn’t even hiding it.

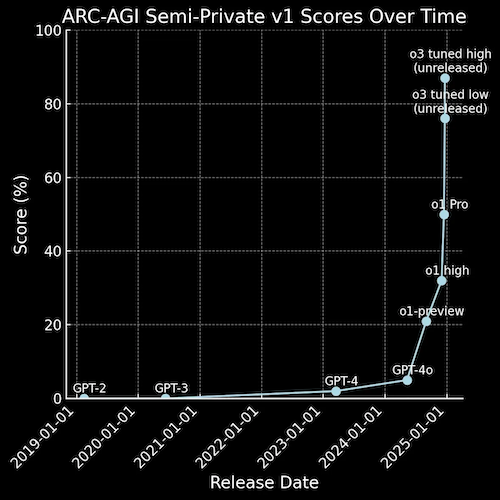

Autonomous AI agents are going to destroy the app industry.

Many apps will become practically useless — agents will do everything they can in a far more unique and personalized way.

Lol, stfu and stop this rubbish Tari. What is wrong with you? It’s just stupid AI hype for goodness sake, smh.

Oh really, you sure about that?

Because it’s already happening right now.

The basic generic-info apps/websites are already being destroyed.

The ones that do nothing more than display generic information — like most of those question-answering websites.

In the past how did you get answers to general questions about life and the world?

Typically you searched on Google. Then you clicked on one of the blue links to a website that gave you the information.

I used to write articles for exactly this — answering coding questions like “javascript convert array to map”.

But now how do most people get answers for general knowledge like this?

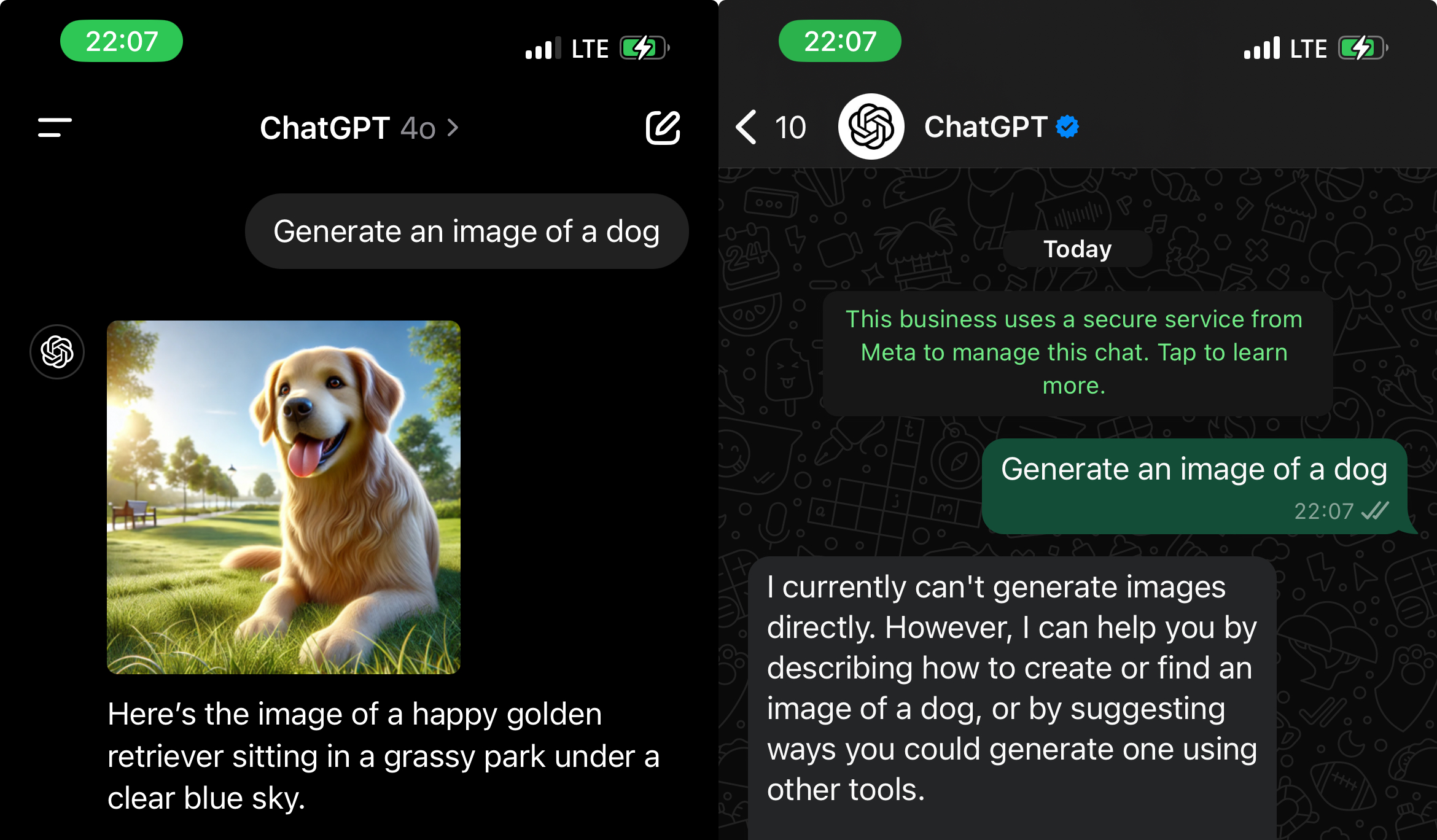

ChatGPT. Gemini. Gemini in Google Search… conversational AI.

It was painful for me to admit this once 😅 — but the fact is there is no contest here whatsoever between these websites and the AIs.

❌ Before:

A generic soulless bunch of pre-define text littered with ads and pop-ups. So much painful bloat to meet a word count and target certain keywords.

✅ Now:

The other is a personalized AI that gives you the exact answer to your unique exact question — and let’s you follow up with even more precise and context-specific questions.

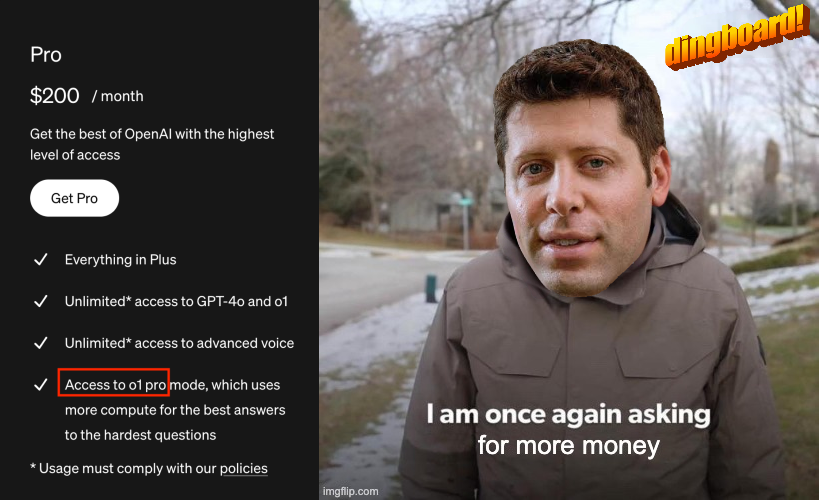

This same thing is going to happen to mobile and web apps.

Think of an app like Duolingo.

❌ Before:

A predefined interface with a predefined flow and a predefined set of actions.

You can only learn a language the way the devs think is best with the built-in actions they let you take with the specific UI components they decide on the specific screens they created to put them.

✅ After:

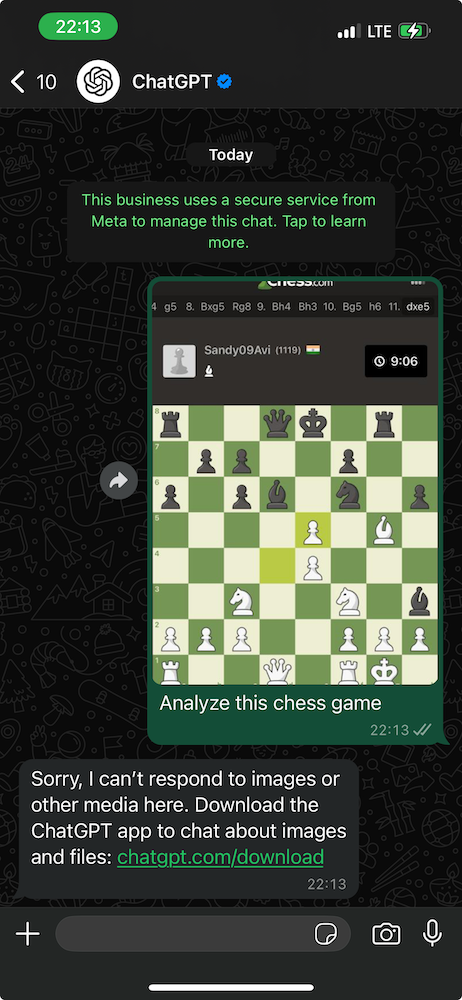

An ultra-personalized interface created by an AI agent connected to several APIs and tools.

Look you could just say “teach me a language” to your agent.

It will automatically remember that convo you guys had about those languages you’ve always wanted to learn.

It will spin up a screen (from thin air) with image buttons (from thin air) to let you choose between all those languages. Zero code.

When you choose French it’ll remember you’re already at B1 level and craft a learning plan just for you.

No more Duolingo stories — now freshly generated stories in a topic you enjoy with the precise vocab level you need to improve.

Asking you questions on those stories to let you respond with free text or voice or multi-choice between images.

It could create an interactive video chat with an AI-generated coach to practice your speaking and listening.

With virtual reality and AI video creation you could even simulate immersing yourself in France and speaking with the locals — and now you’d be getting feedback on whatever you say.

It will automatically set phone and email reminders to help you stick with your plan — and adjust this plan according to your changing schedule.

Now tell me, how could Duolingo or Babbel or whatever possibly compete against this.

Even if apps don’t die, they will become far easier to make than they are right now.

The economic value in app development will drop drastically to almost nothing. Creating an app will become as easy as creating a Medium article.

We’re already seeing early glimpses of agentic capabilities with tools like Windsurf and Cursor Composer — the tide is moving in only one direction.

It will no longer be about what cool features your app has — like when people compare Notion to Apple Notes to Google Keep to Evernote to whatever.

It will be about the data.

What unique data does your app provide that no one else has?

Apps like Tinder and Spotify are not going to die so easily.

Anyone will be able to create a Tinder clone in 3 minutes with much cooler features — but it will be useless.

Only Tinder will have data of all those women and men. Only Spotify and the rest will have the license for all those songs.

The same thing is already happening with YouTube — the value of being able to create and edit a video is dying. We got Invideo, we got ElevenLabs, now we’ve got Veo 2 and there’s no going back.

All the value is moving to the unique information or experience the video can provide.

For your app, the value will also be in what they can do in the physical world.

Uber and Lyft are not going anywhere no matter how powerful AI agents get. Their main value is the ride-sharing, not fancy app features.

It’s happening folks. Major value transfer is on its way and we’re only going to have to adapt.