This AI dev tool from Vercel is monstrously good

I made a huge mistake ignoring this unbelievable tool for so long.

Verce’s v0 is completely insane… This is a UI generator on crazy crazy steroids.

Imagine create a fully functioning web app from nothing but vague ideas and mockups and screenshots.

Not even a single atom of code has to be written down anywhere.

Traditional UI generators stop at creating dead, boring code snippets from UI.

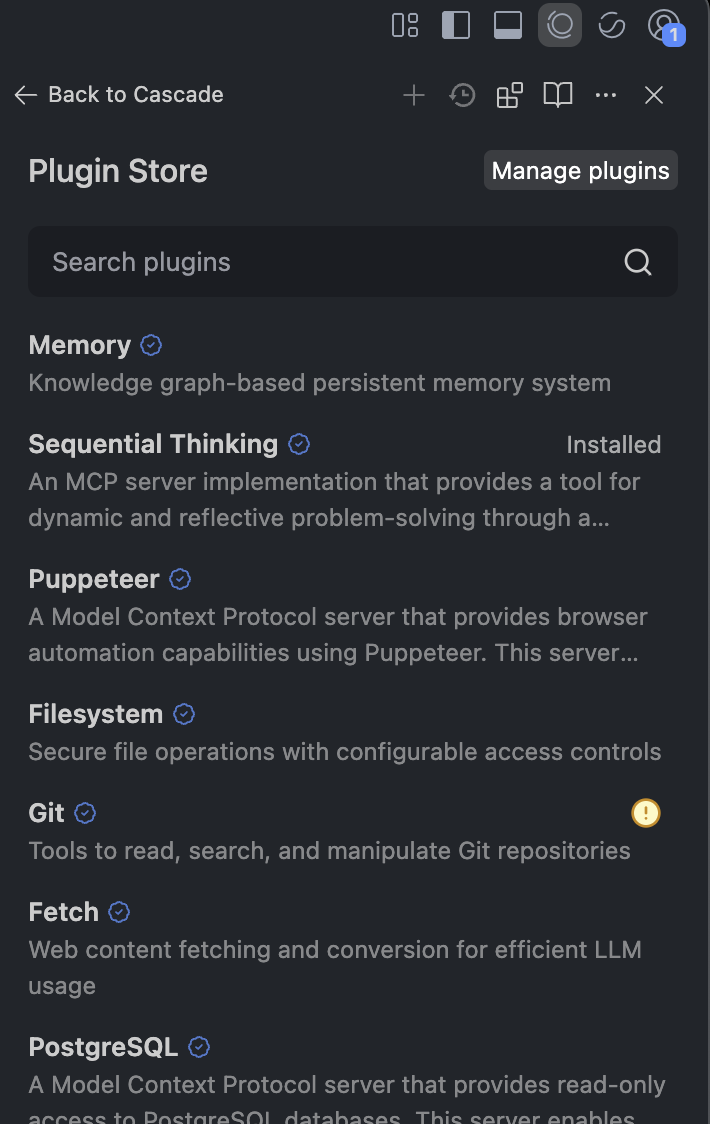

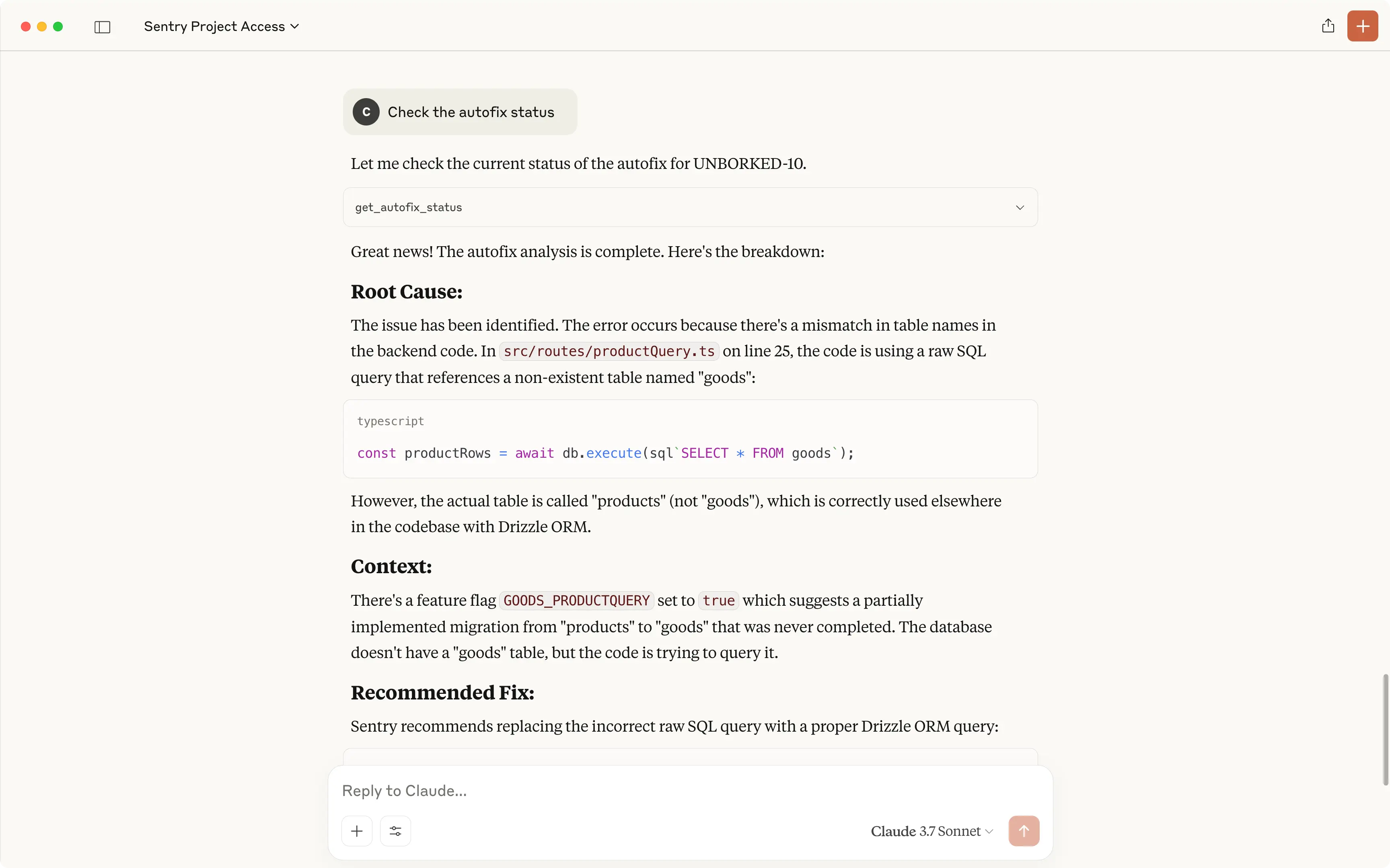

v0 acts like an agent: it plans steps, fetches data, inspects pages, fixes missing dependencies or runtime errors, and can even hook into GitHub and deploy straight to Vercel.

You see everything it’s doing — you can pause or tweak anything at any time.

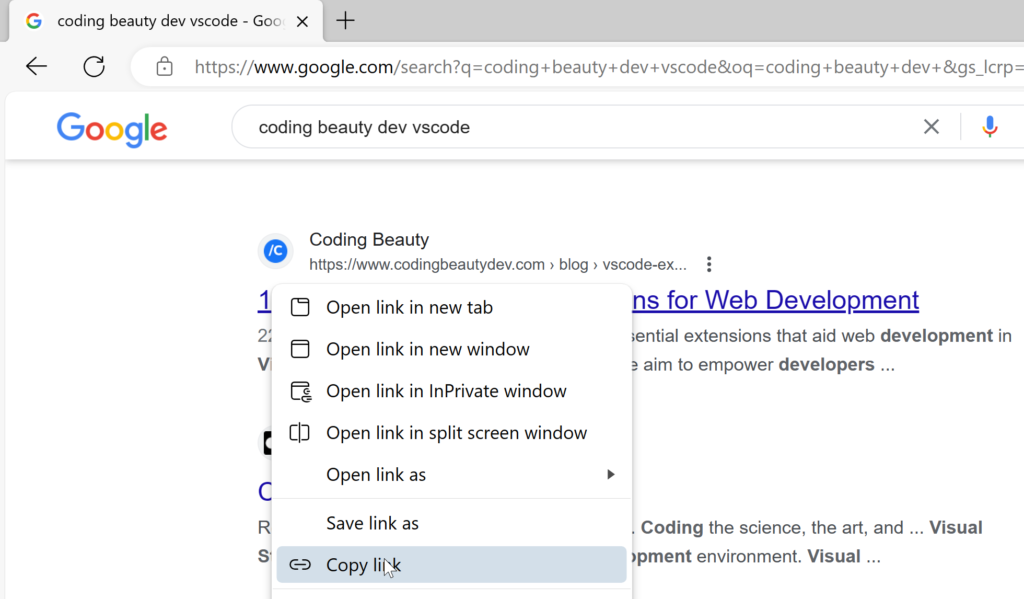

One of the best selling points is how anyone can share any design with anyone.

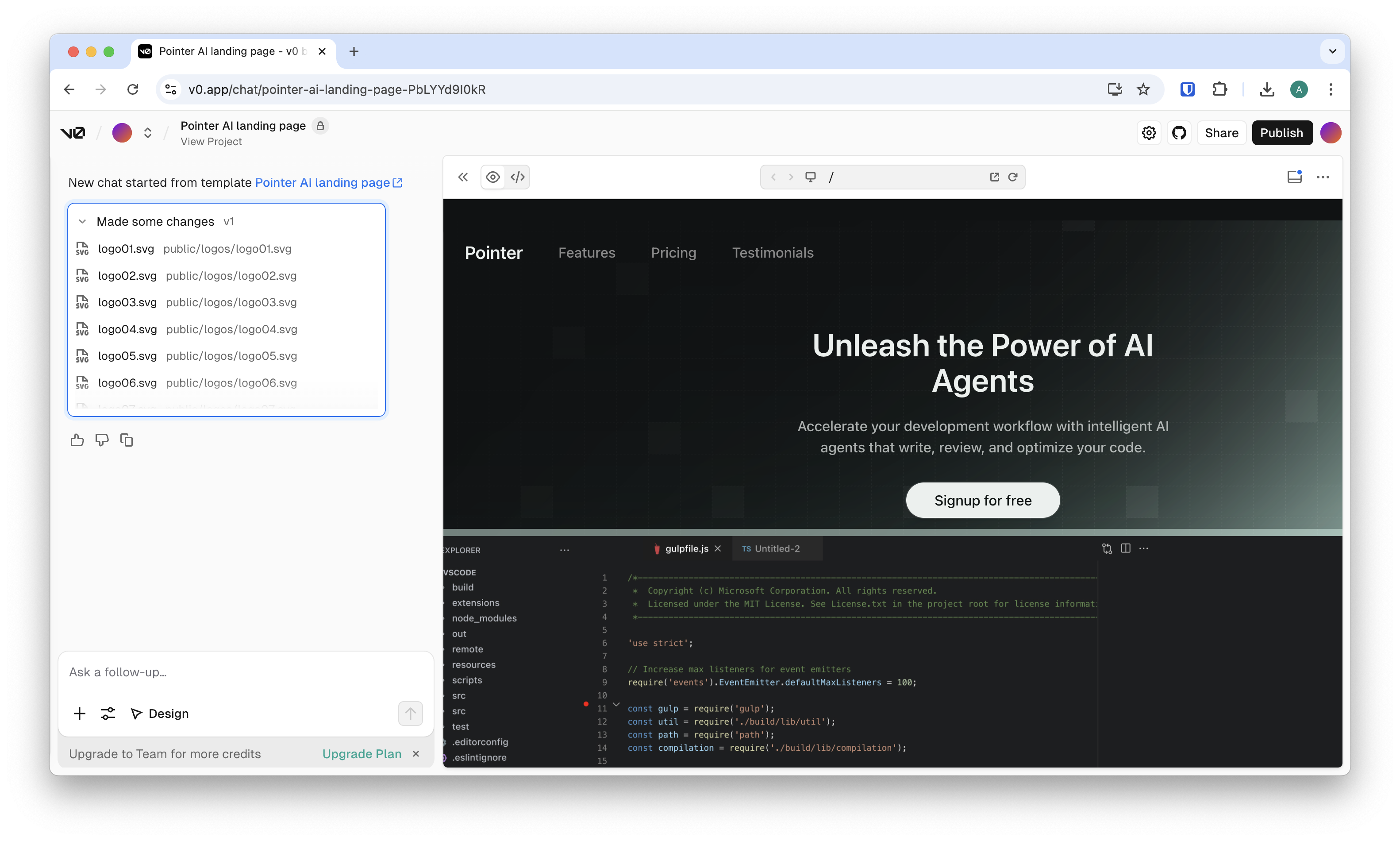

There are a ridiculous number of freely available templates from the community that anyone can use and modify.

It’s like GitHub for web apps and UI designs.

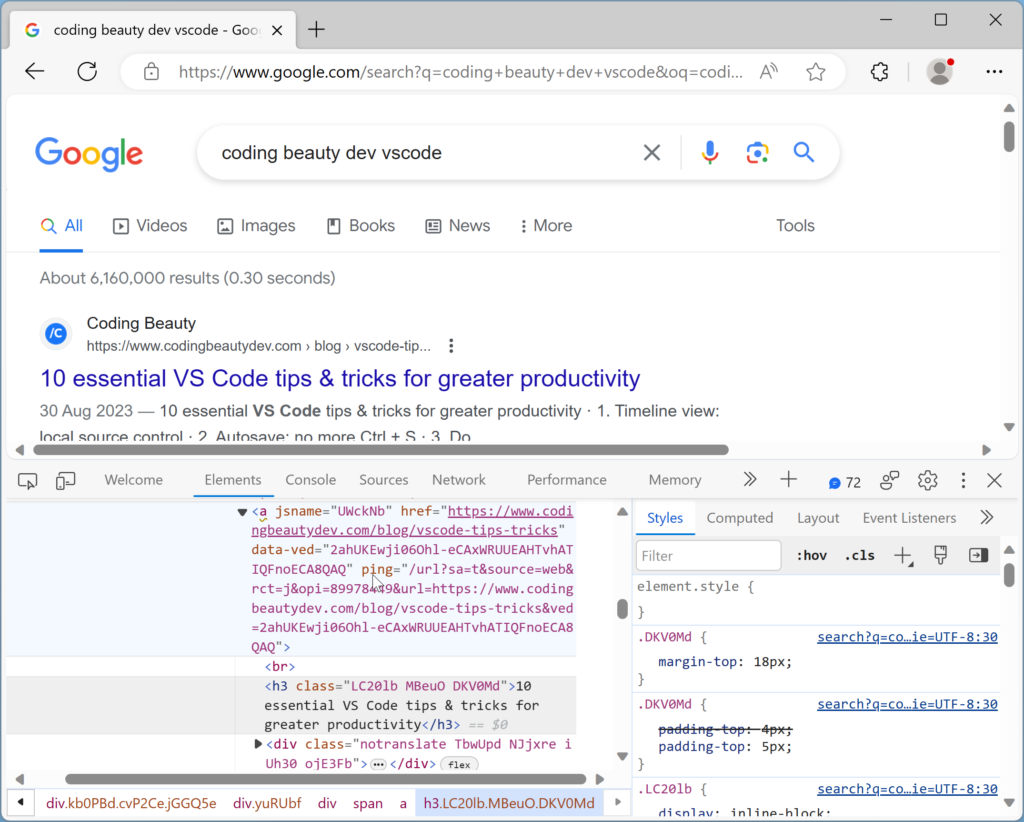

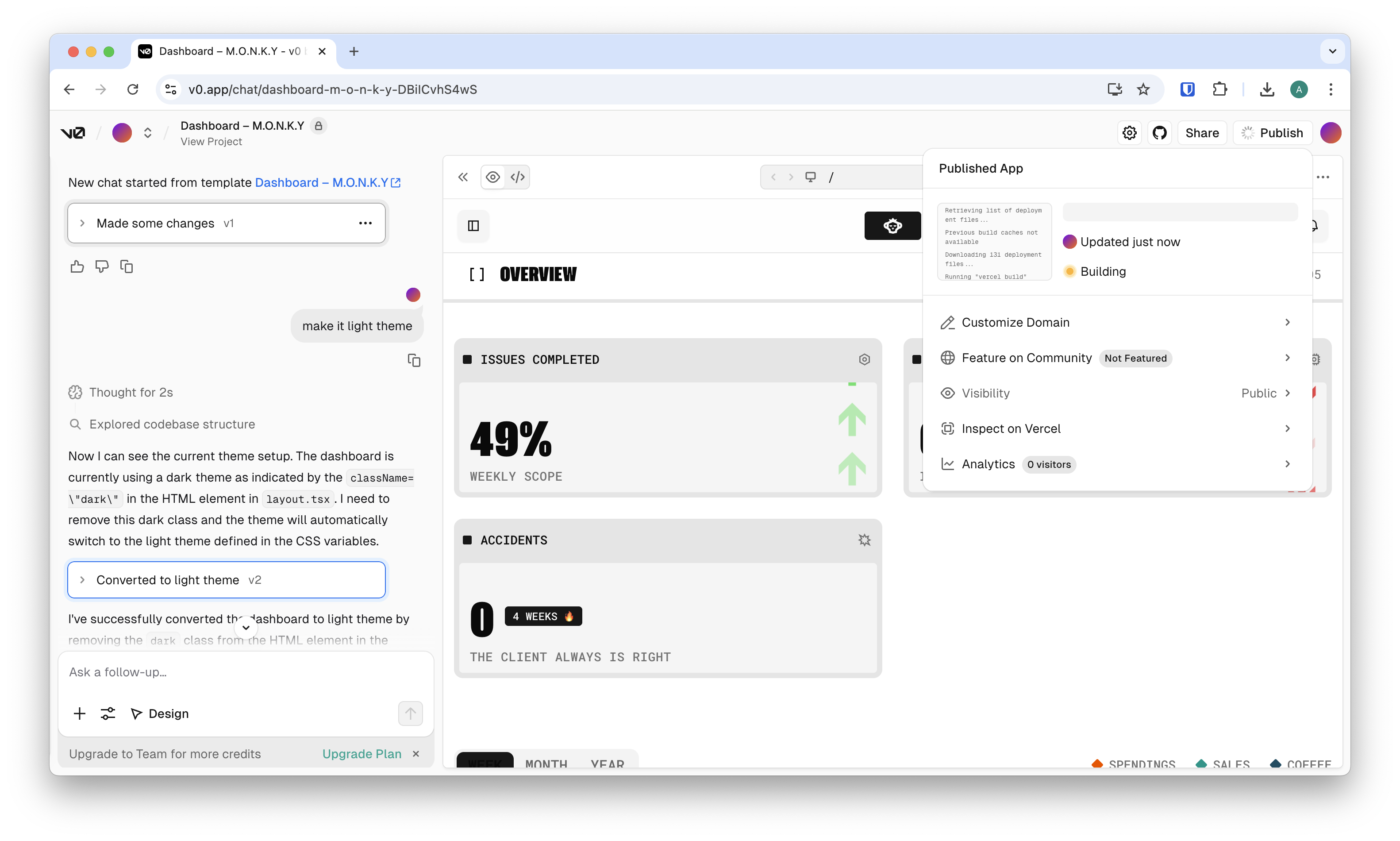

Look how I just loaded this project from the community:

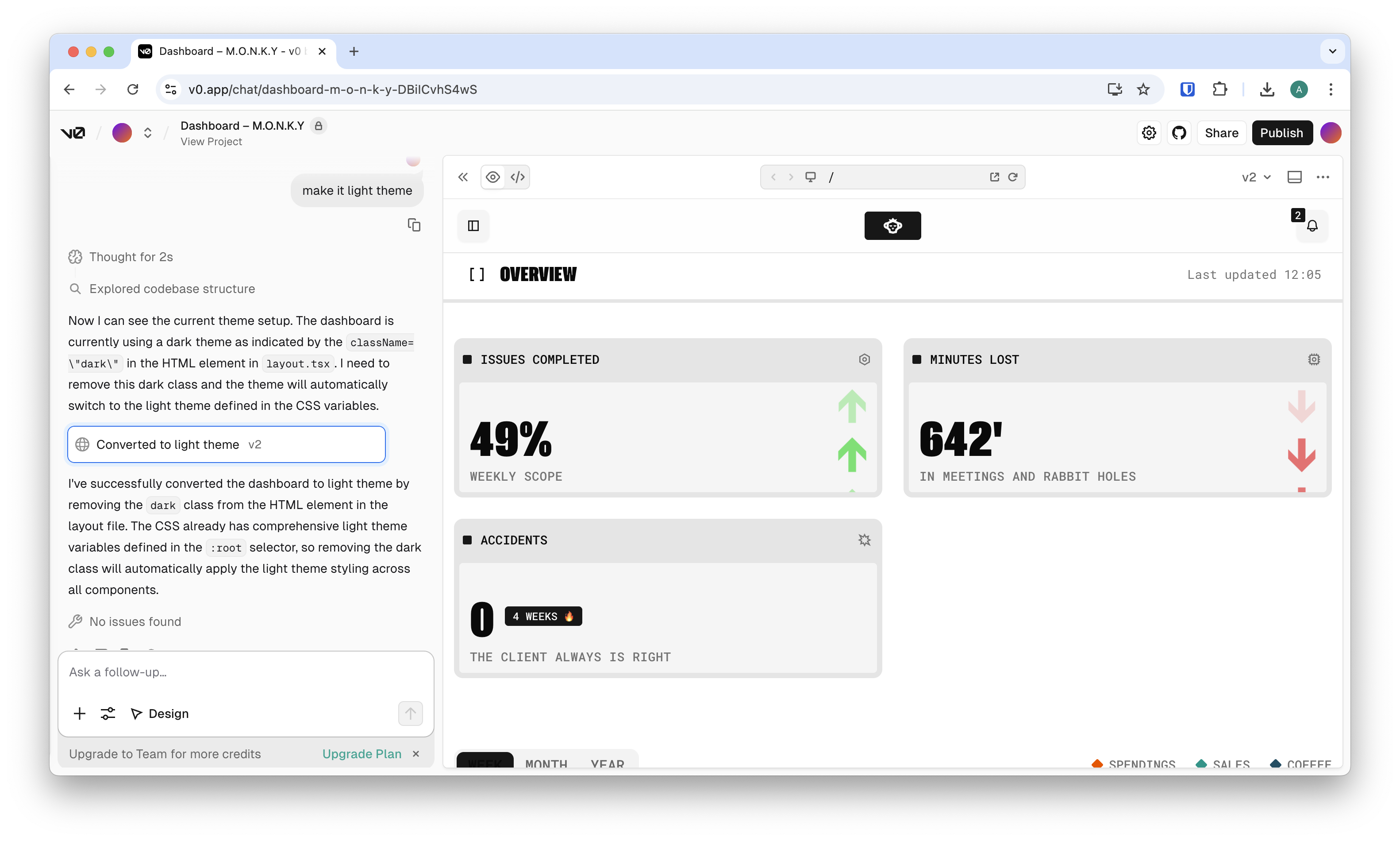

Then I asked it to make the theme a light theme — so damn effortless…

And that’s really just that — I can publish immediately — it builds just like any other Vercel project:

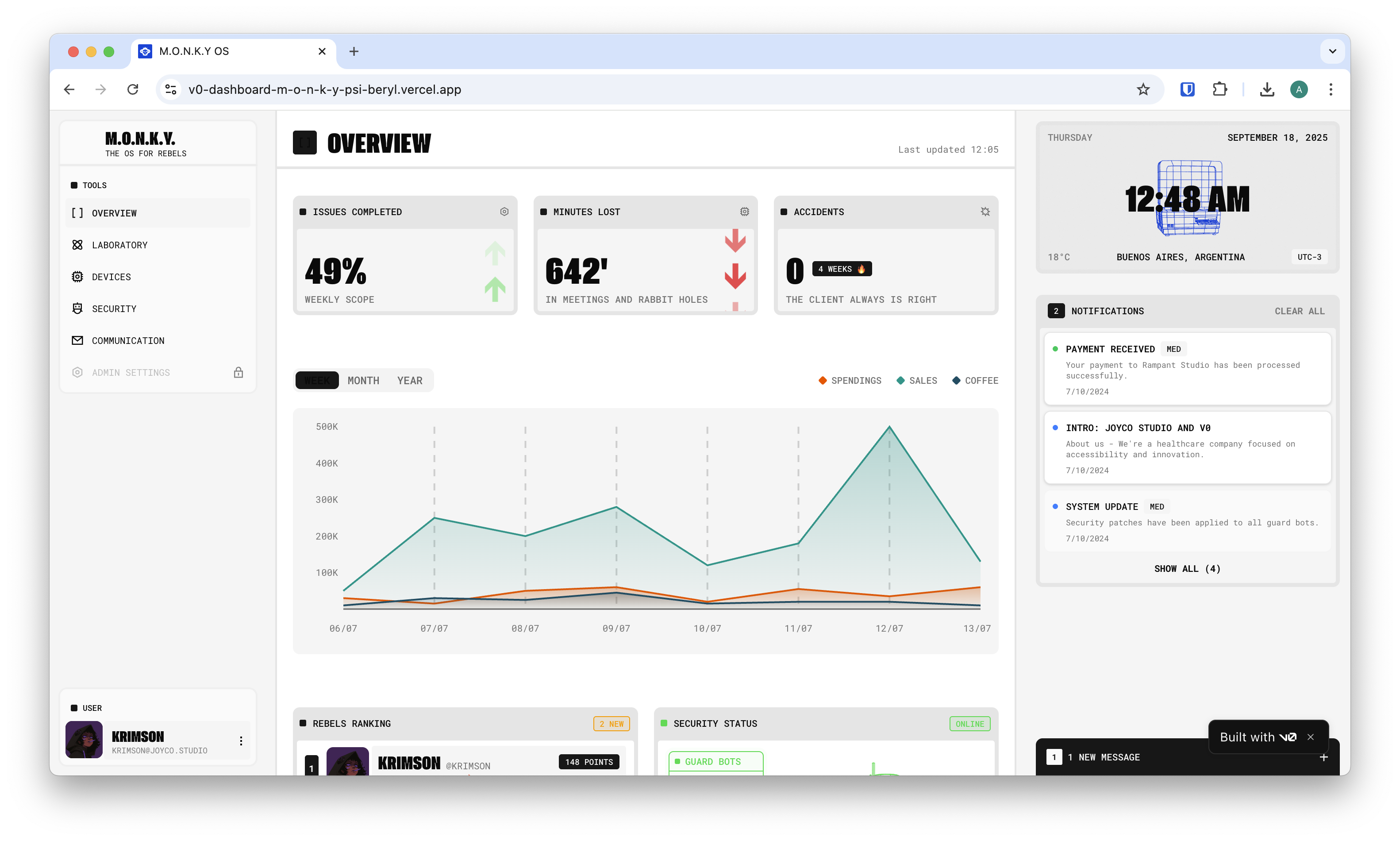

The result: an actual live site we can work with:

It works across popular stacks — React, Vue, Svelte, or plain HTML+CSS — and gives you three powerful views:

- Live preview to see your app instantly

- Code view for full control

- Design Mode for visual tweaks without touching code

By default, v0 uses shadcn/ui with Tailwind CSS, but you can also plug in your own design system to keep everything on-brand.

Using v0 is simple and fast:

- Describe your app in text or upload screenshots/Figma files.

- Iterate visually with Design Mode, adjusting typography, spacing, or colors without spending credits.

- Connect real services like databases, APIs, or AI providers.

- Deploy with a single click to Vercel — add your own domain if you like.

Because GitHub is built in, you can link a repo, choose a branch, and let v0 sync changes both ways.

What you can build with it

v0 is great for:

- Turning mockups into production-ready UIs

- Spinning up full-stack apps with authentication and a database

- Creating dashboards, landing pages, and internal tools

- Adding AI features by plugging in your own OpenAI, Groq, or other API keys

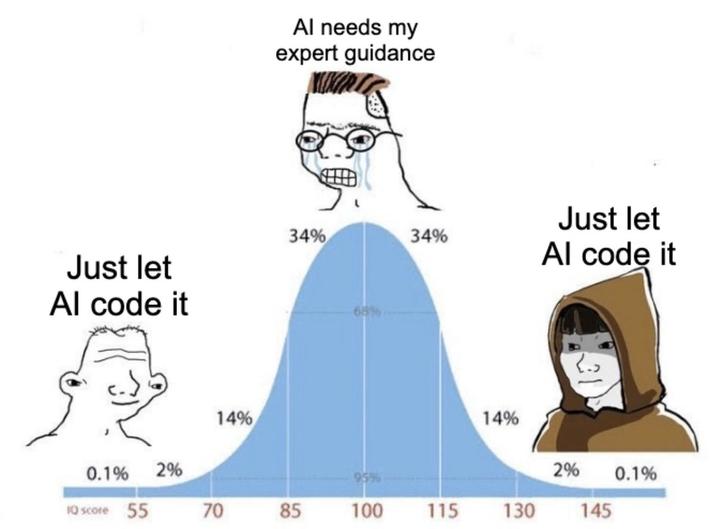

Essentially, it’s a fast lane for designers, product managers, and developers who want to get to a real, working app without months of hand-coding.

Integrations that matter

v0 connects to popular back-end and AI tools out of the box:

- Databases like Neon, Supabase, Upstash, and Vercel Blob

- AI providers including OpenAI, Groq, fal, and more

- UI components via shadcn’s “Open in v0” buttons

For teams building their own workflows, Vercel also offers a v0 Platform API to programmatically tap into its text-to-app engine.

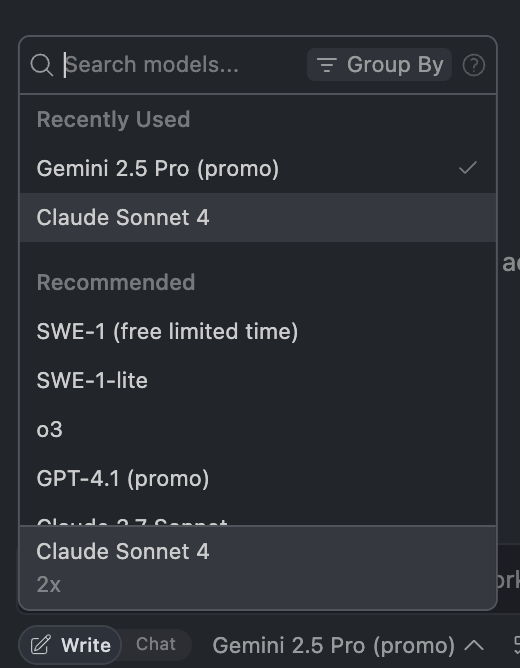

Pricing and recent changes

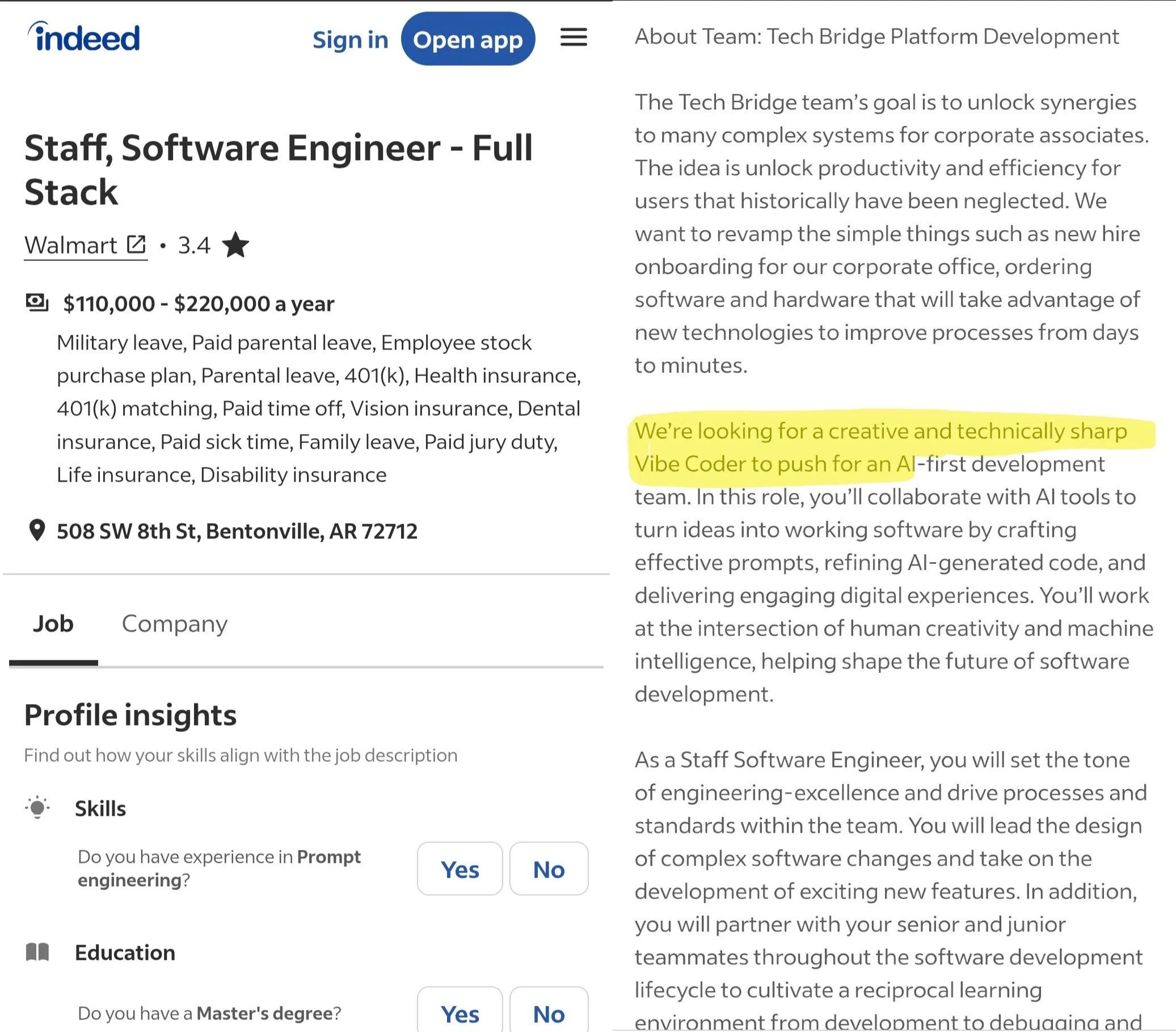

In 2025, Vercel shifted v0 to a credit-based pricing model with monthly credits on Free, Premium, and Team plans. Purchased credits on paid plans last a year.

It also moved from v0.dev to v0.app to signal its new focus on being an agentic app builder — one that can research, reason, debug, and plan, not just generate code.

Security and reality check

Because it’s powerful and fast, v0 has also been misused by bad actors to clone phishing sites. That’s not Vercel’s intention — it’s a reminder to always review and test generated code, just as you would a junior developer’s pull request.

When v0 shines

v0 is ideal if you:

- Need to ship MVPs, landing pages, or internal tools quickly

- Already work with Tailwind/shadcn or have a design system in place

- Want to iterate fast with an AI assistant that can fix its own errors

You’ll still want to review for security, performance, and business logic. But for speed, flexibility, and polish, it’s one of the best ways to get an app live today.

Getting started

Sign up at v0.app, describe your project, iterate visually, hook up your back end, and deploy. In minutes, you can go from idea to a working app.

Vercel’s v0 isn’t just another “AI website builder.” It’s a full-stack, agentic assistant that understands modern web development and helps you actually ship.

This is definitely worth trying if you’re looking for a fast and flexible way to go from concept to production.