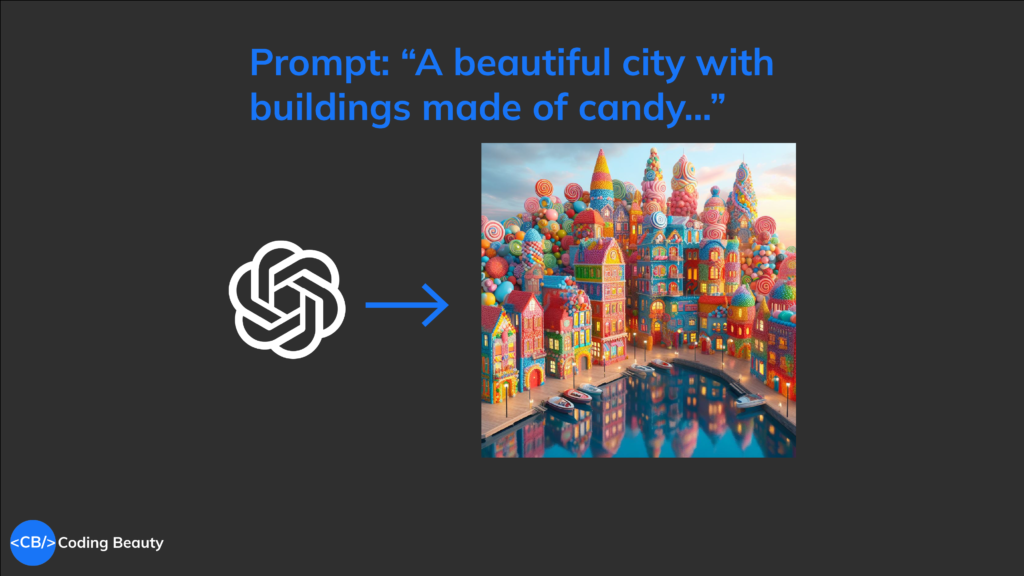

Coding as digital creations growing into greatness

What if we see coding as a delicate act of nurturing, a digital development brought about by our creativity and the intricate logical reasoning of our minds?

There’s a reason we’re called software *developers*. Developers develop; developers build and grow.

If we can get ourselves to view a software project as a unit of its own, with all its capabilities, potential, and goals, we instantly start to observe numerous interesting connections between programming and other nurturing acts like parenting, gardening, and self-improvement.

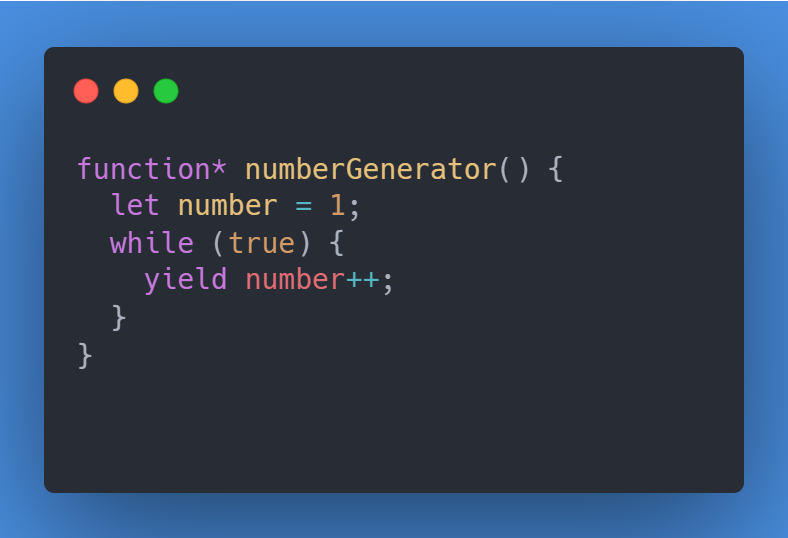

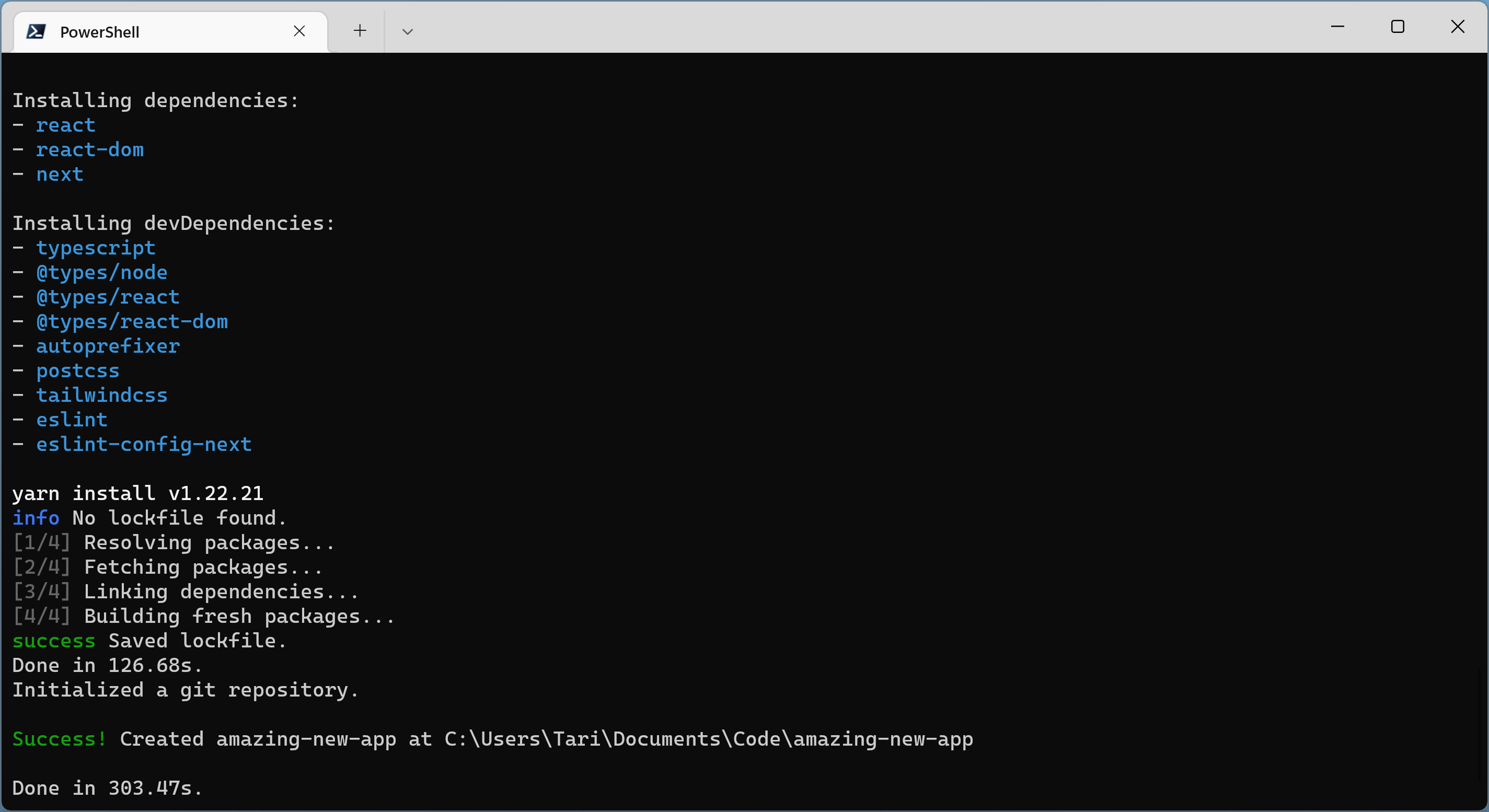

From the onset: a freshly scaffolded project, a file, or a folder. There is always a beginning, a birth.

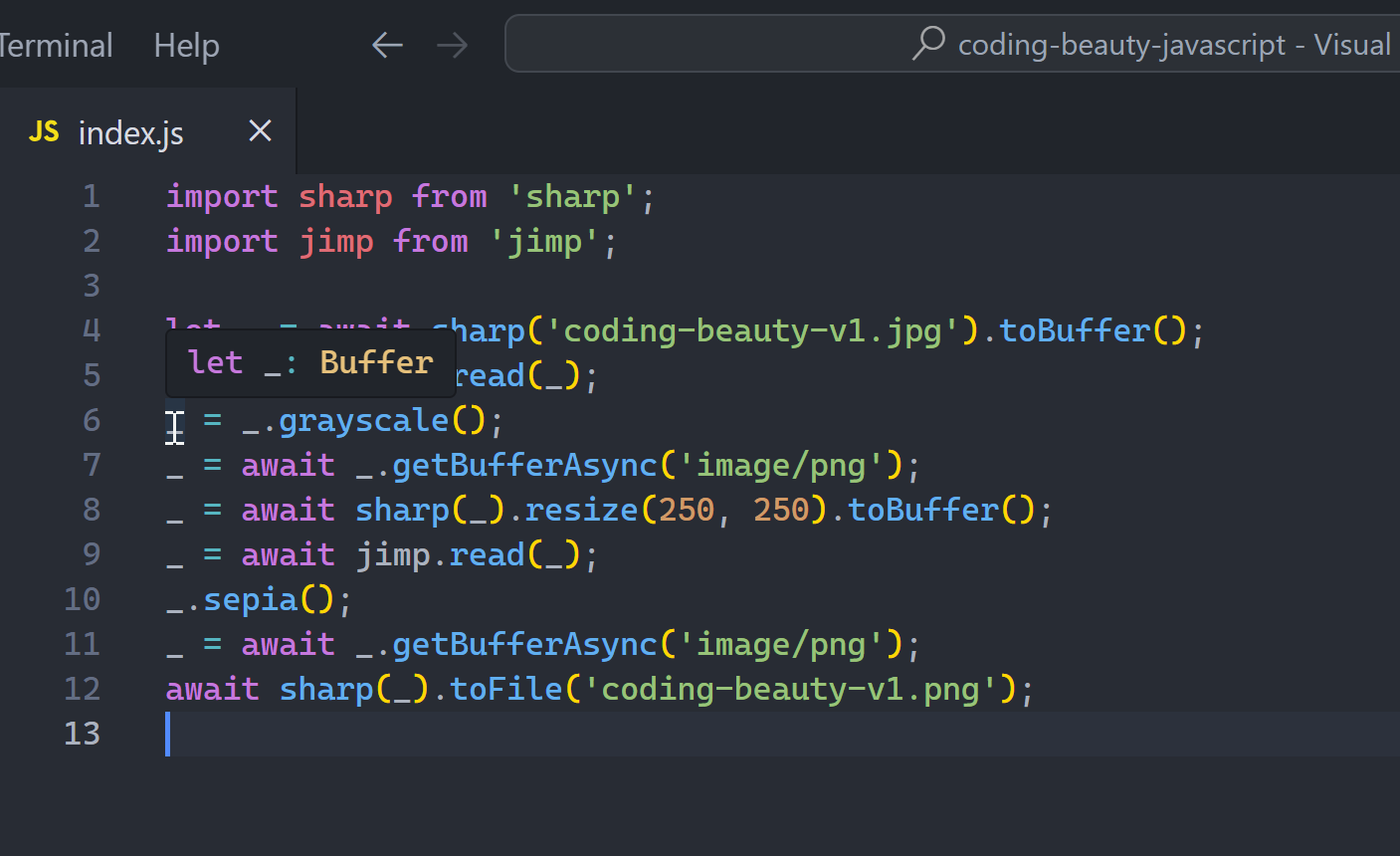

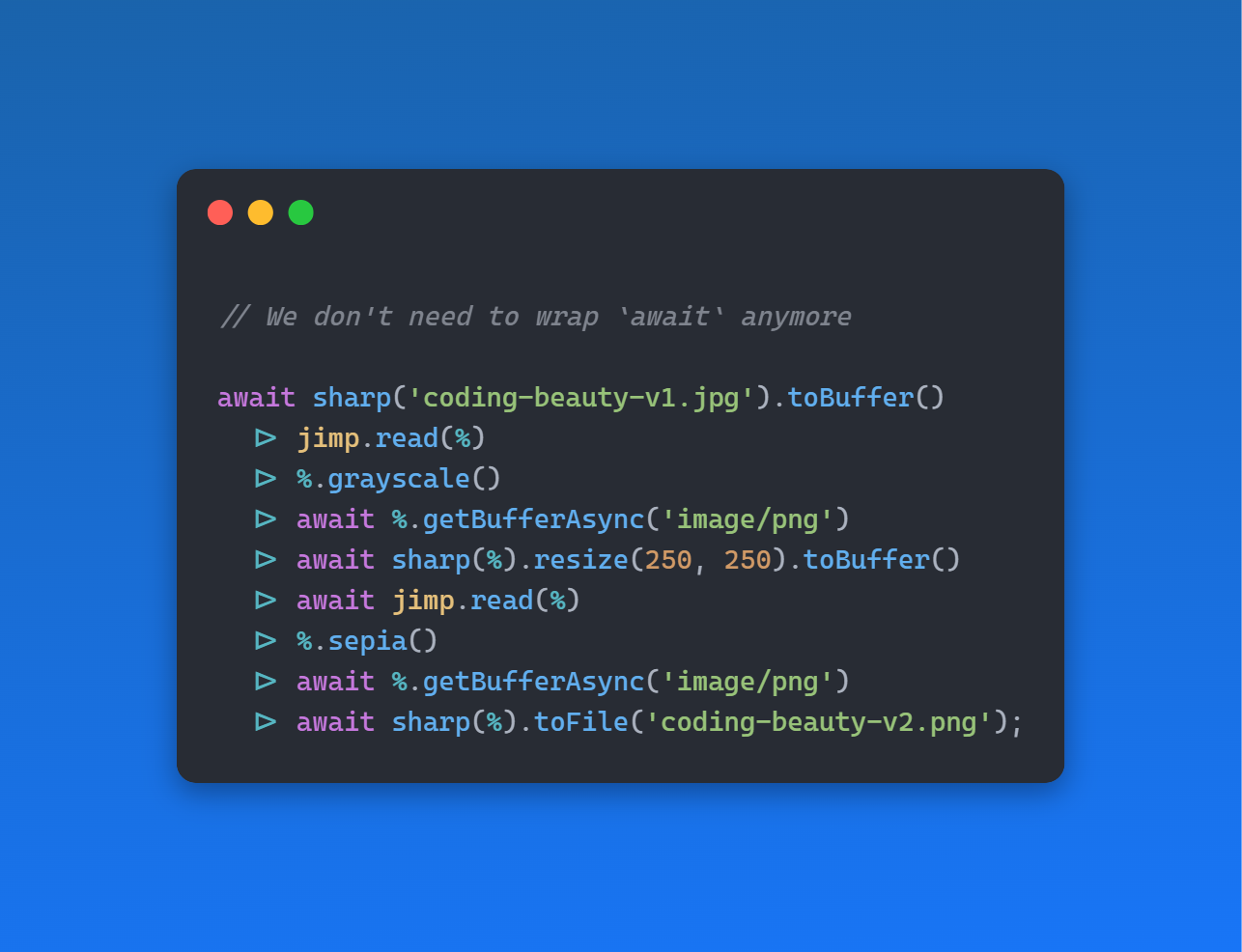

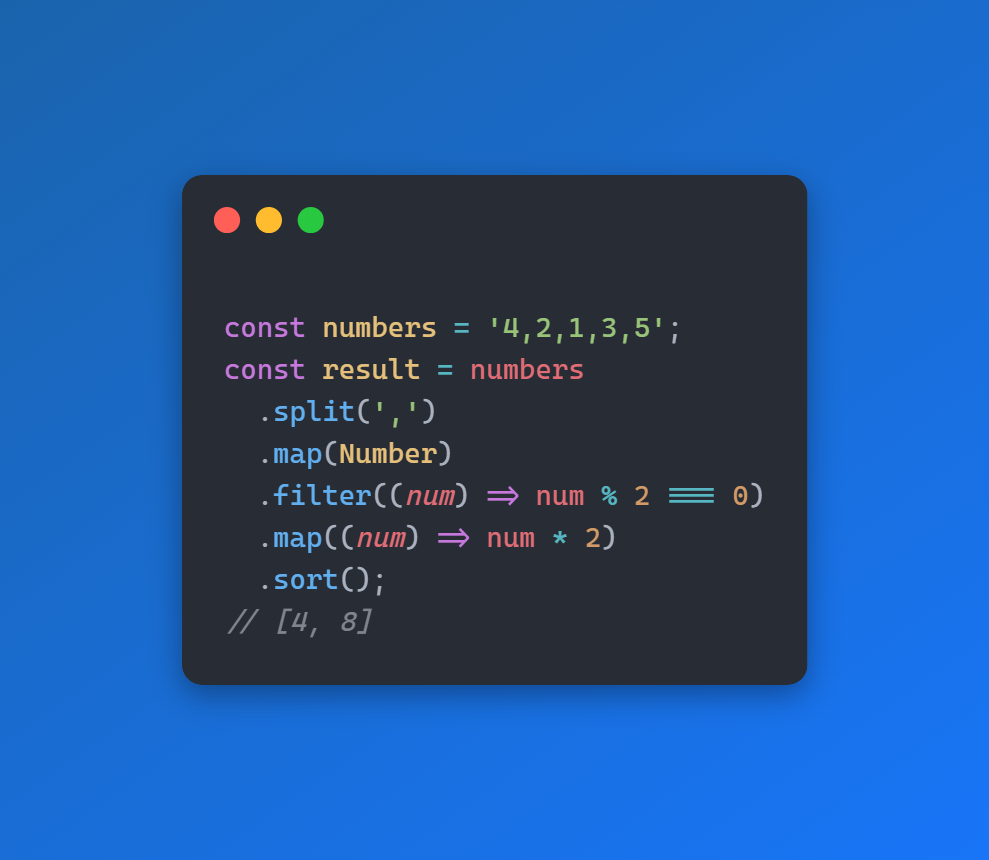

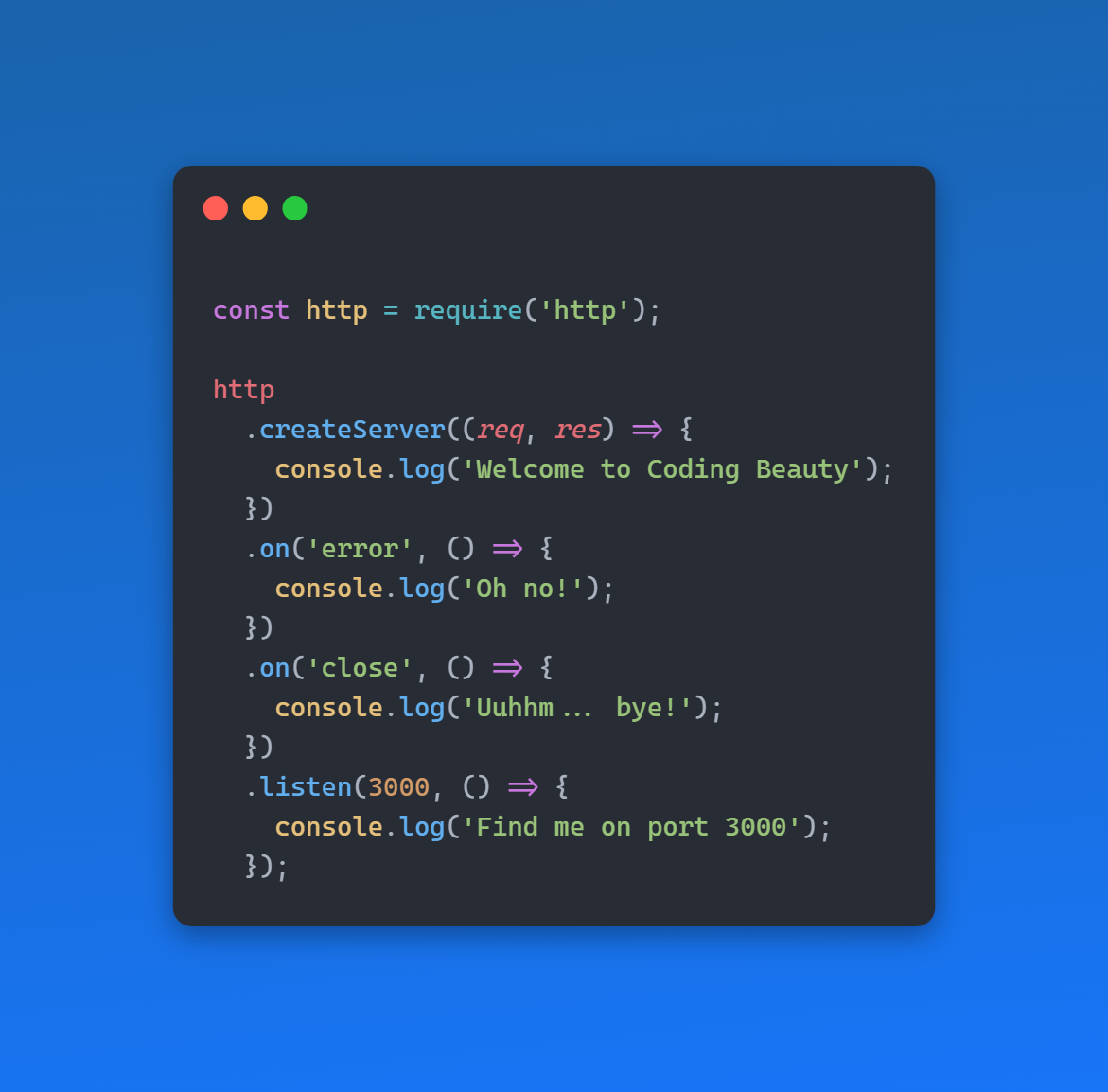

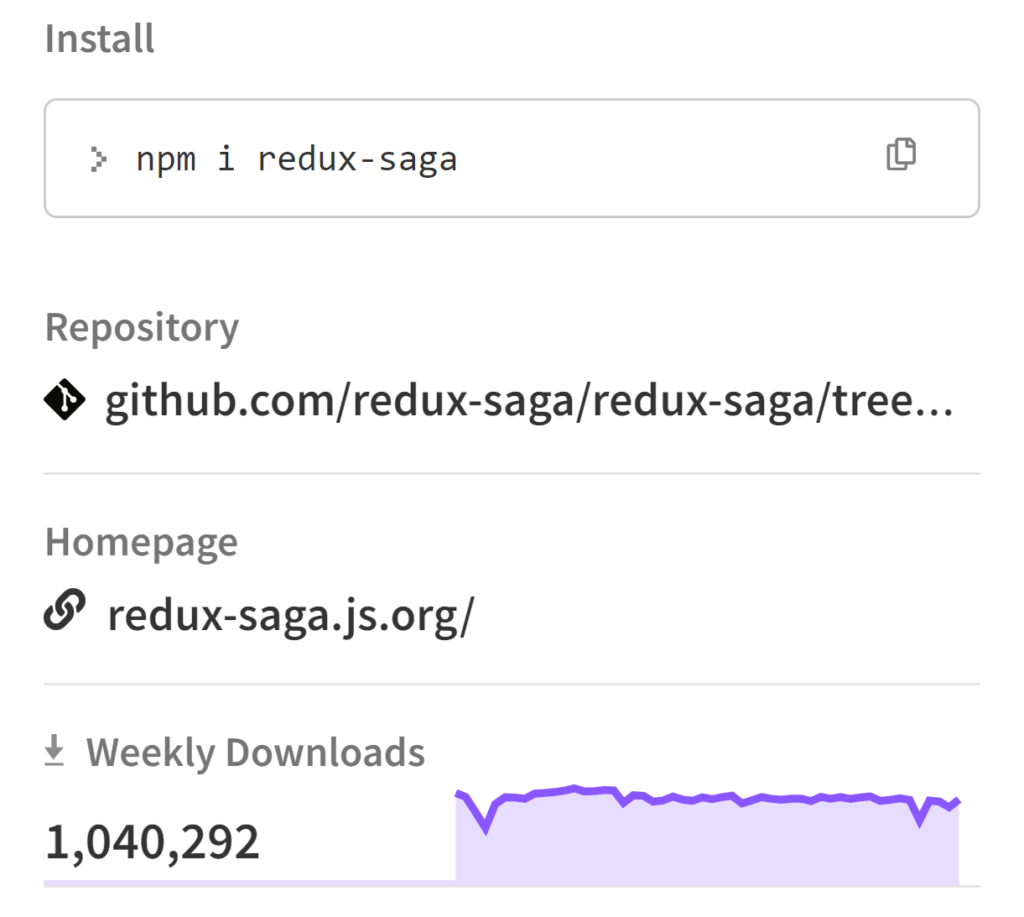

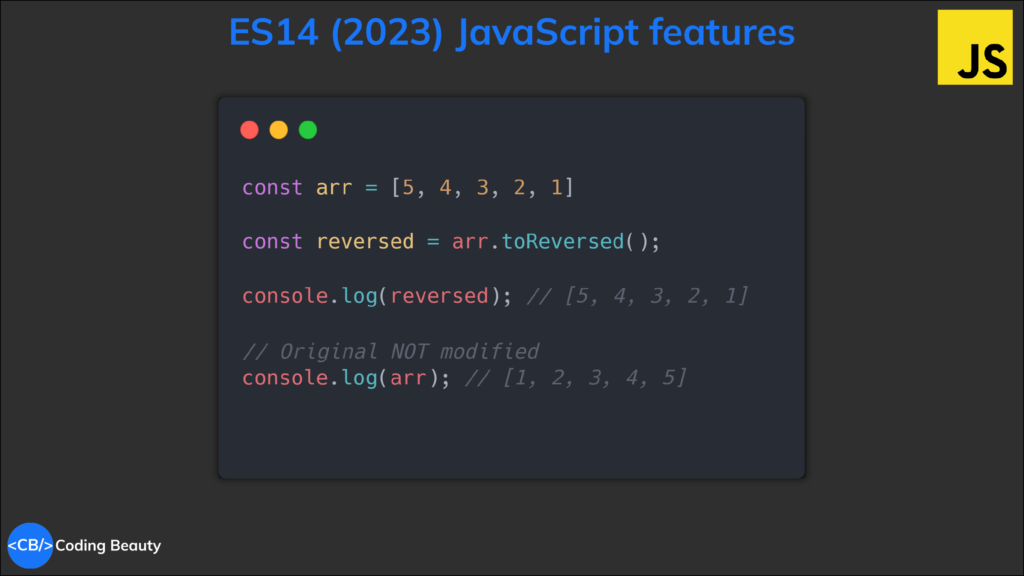

With every single line of code added, deleted, and refactored, the software grows into greatness. With every additional library, package, and API fastened into place, low-level combines into high-level; the big picture unfolds; a fully formed digital creation emerges.

Goal in sight: Plan

Similar to nurturing a budding sapling, the coding process begins with the seed of an idea. You envision the type of product you hope your software will become.

You and your team are to carefully plan and design the structure, algorithms, and flow of the code, much like parents are to create a loving and supportive home by providing the necessary resources, values, and guidance to foster their child’s development.

This thoughtful approach lays the foundation for the entire coding project and boosts the chances of a bright future.

From here to there: Grow

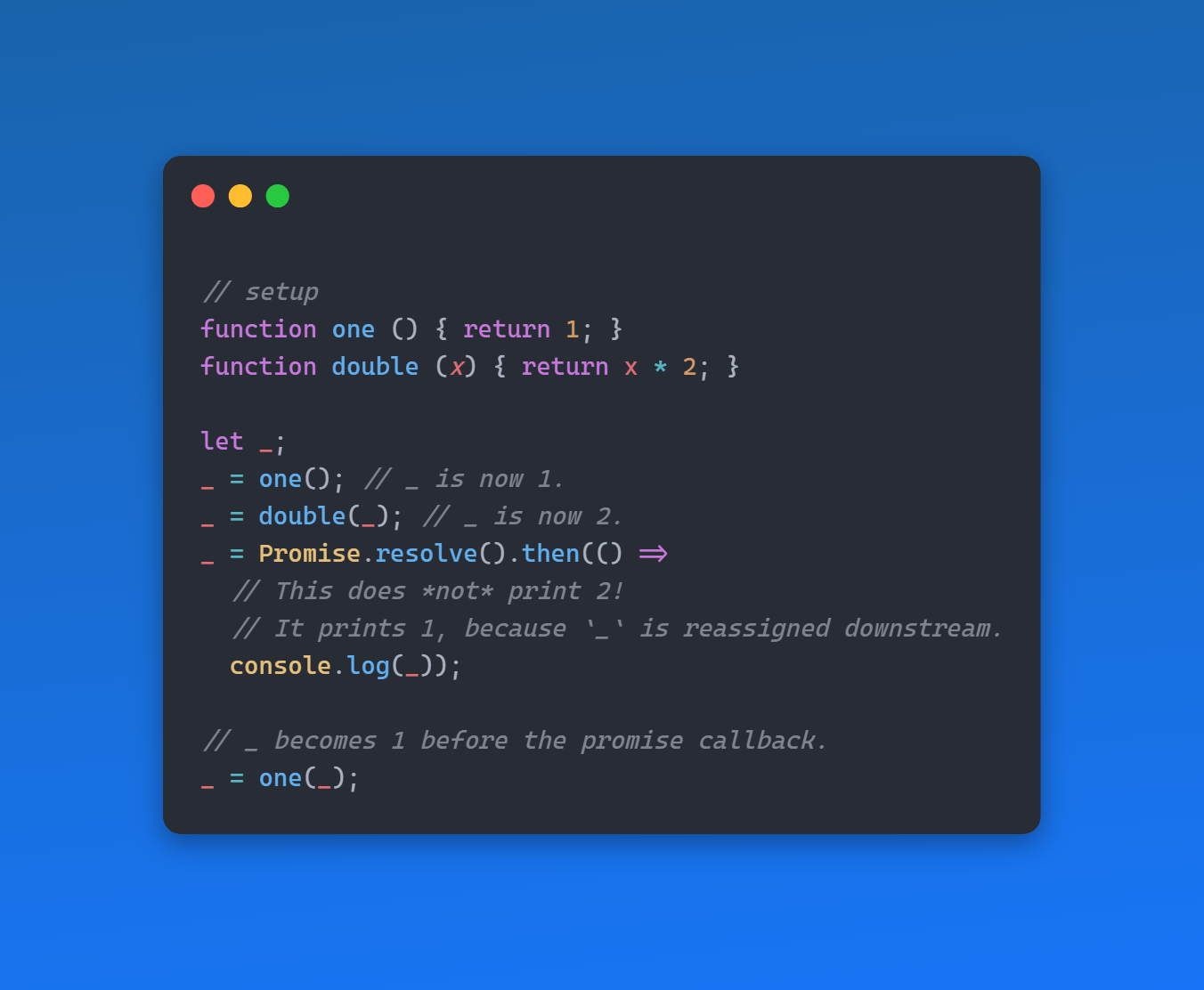

Your codebase needs to grow and improve, but growth on its own is a very vague and generic word. It’s just… an increase.

For any type of growth of any entity to be meaningful, it has to be in the direction of *something*. Something valuable and important.

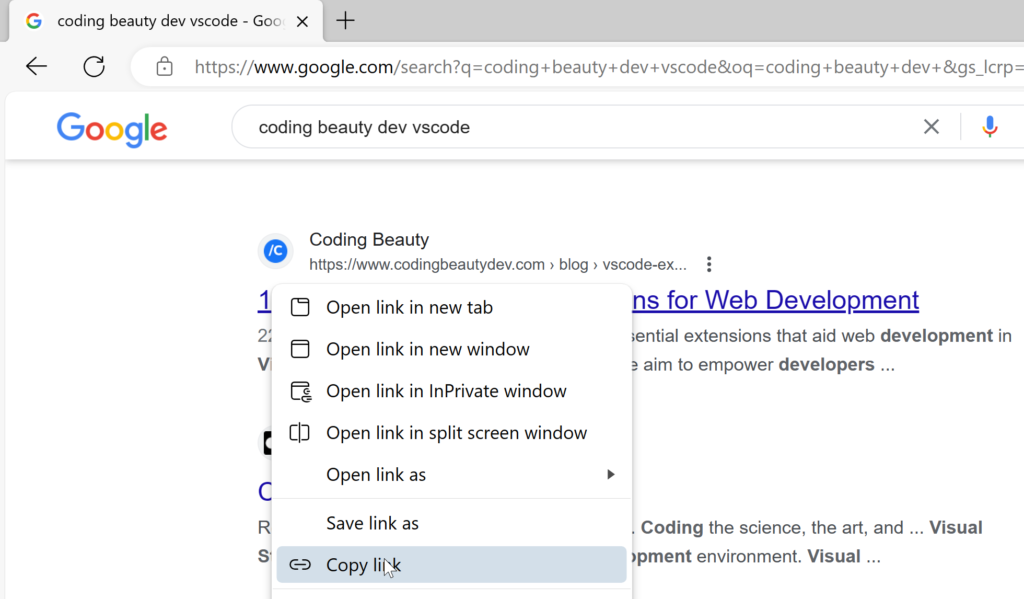

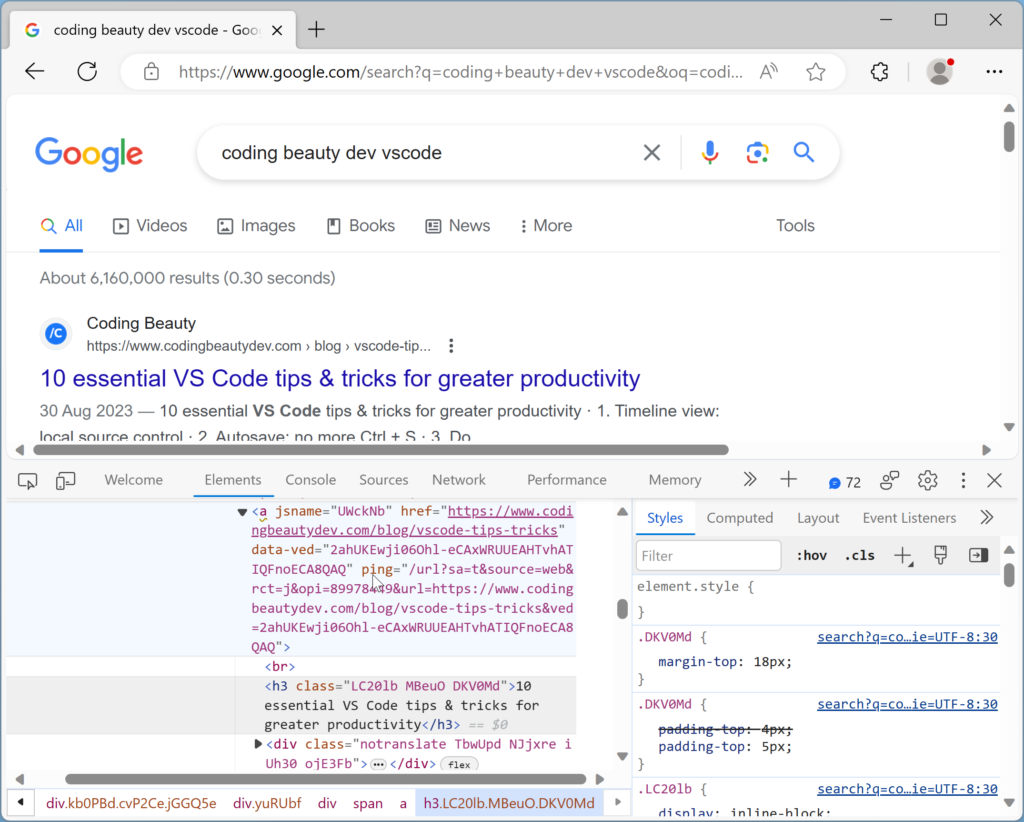

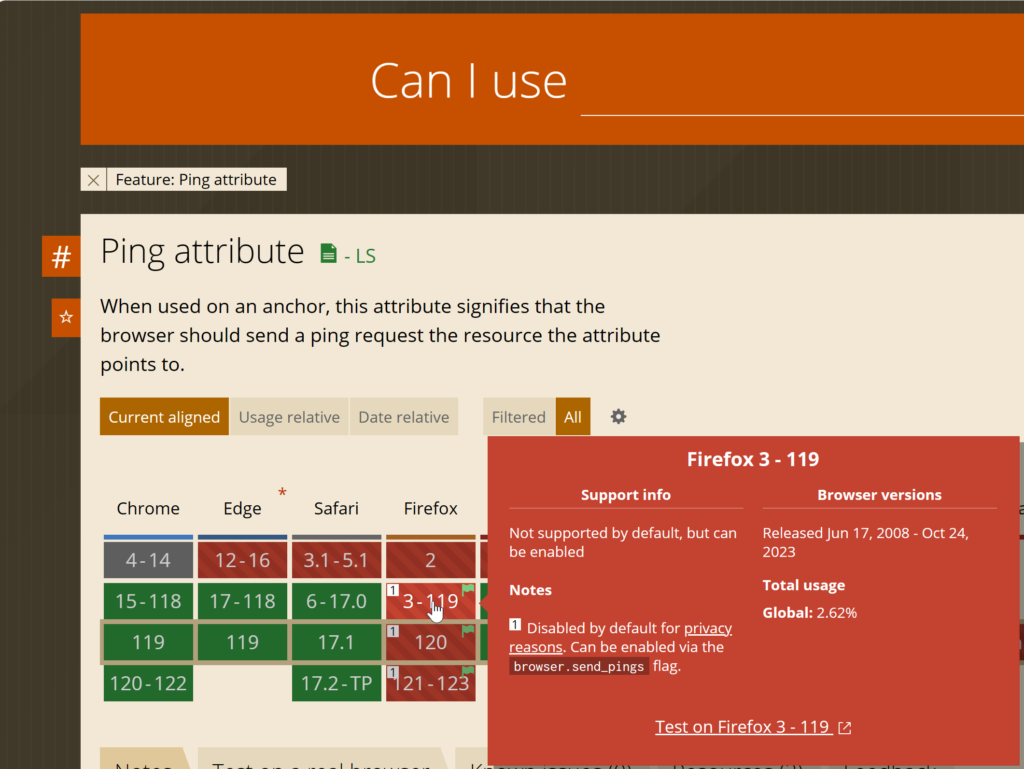

What’s the first thing I get right now when I search for the meaning of “personal” growth on Google?

This definition prefers to remain vague and makes it clear that personal growth isn’t the same for everyone. You choose your values, set goals based on them, and develop yourself and your environment according to those goals.

But this doesn’t mean you can pick just any goal you want for a happy life; Certain goals are way more beneficial for your well-being than others. A target of progressively gobbling up to 10,000 calories of ice cream every day can never be put in the same league as running a marathon or building solid muscle at the gym.

So the same thing applies to coding. Consciously hoping to write 10,000 lines of code or add 1,000 random features to your software is a terrible aspiration, and will never guarantee high-quality software.

Apart from the explicitly defined requirements, here are some important growth metrics to measure your software project by:

On a lower level, all about the code:

- Readability: How easy is it for a stranger to understand your code, even if they know nothing about programming?

- Efficiency: How many units of a task can your program complete in X amount of time?

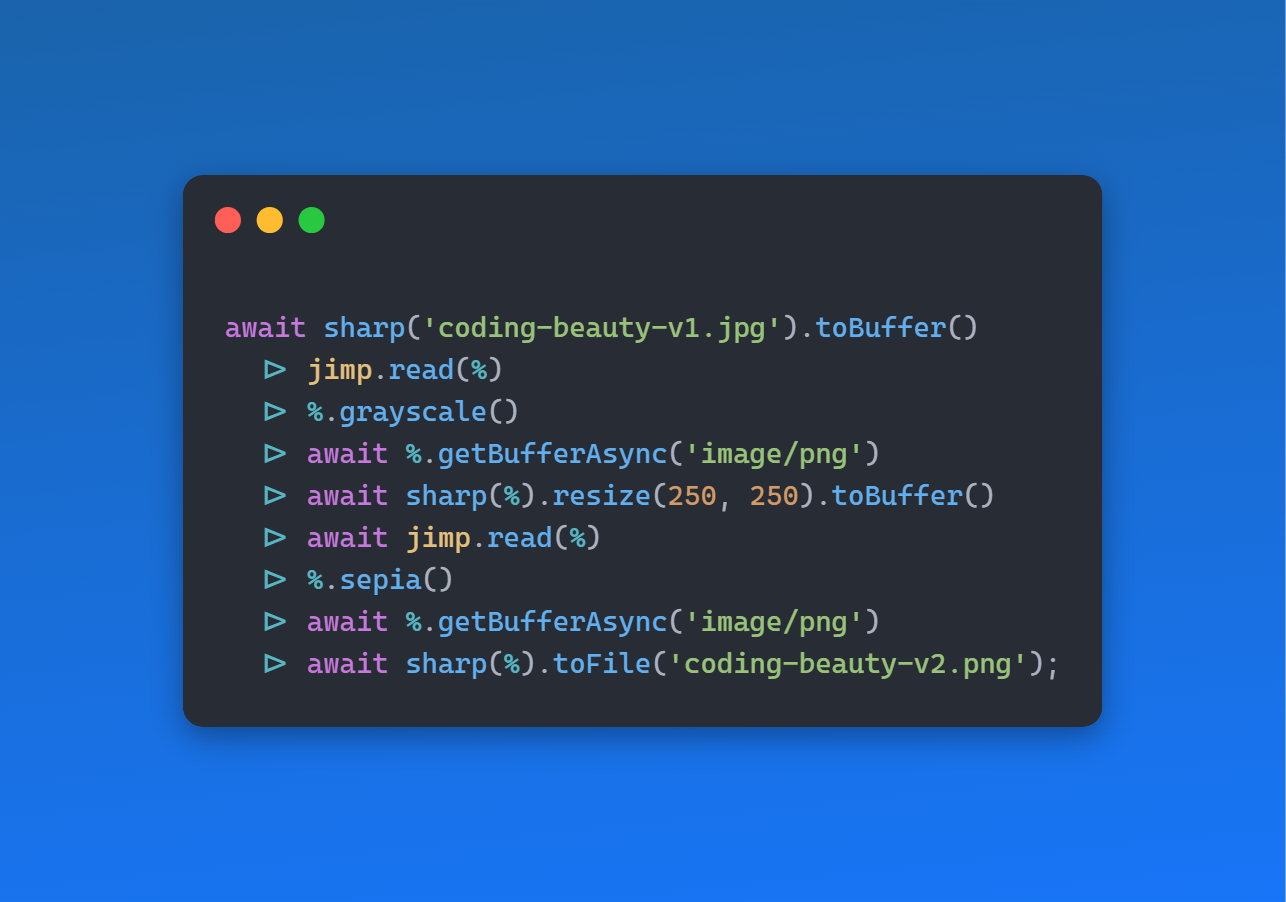

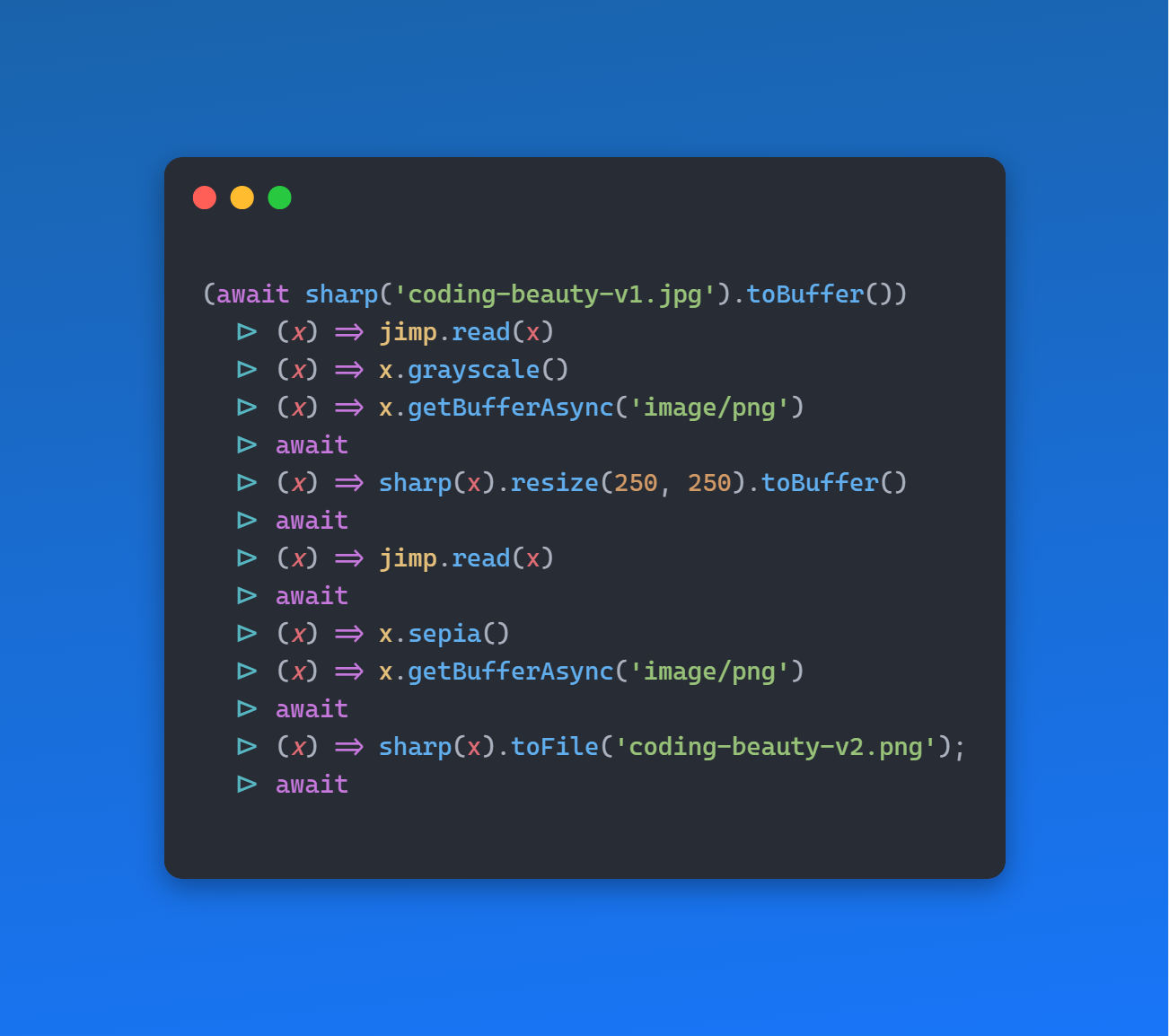

- Modularity: Is your code organized into separate modules for different responsibilities? Can one module be swapped out without the entire project crashing into ruins?

- Testability and test quality & quantity: How easy is it to test these modules? Do you keep side-effects and mutation to a minimum? And yes, how many actual tests have you written? Are these tests even useful and deliberate? Do you apply the 80/20 rule during testing?

On a higher level closer to the user:

- Effective, goal-focused communication: with copy, shape, icons, and more.

- Perceived performance: Including immediate responses to user actions, loading bars for long-running actions, buffering, etc.

- Number of cohesive features: Are the features enough, and relevant to the overall goal of the software?

- Sensual pleasure: Lovely aesthetics, stunning animations, sound effects, and more.

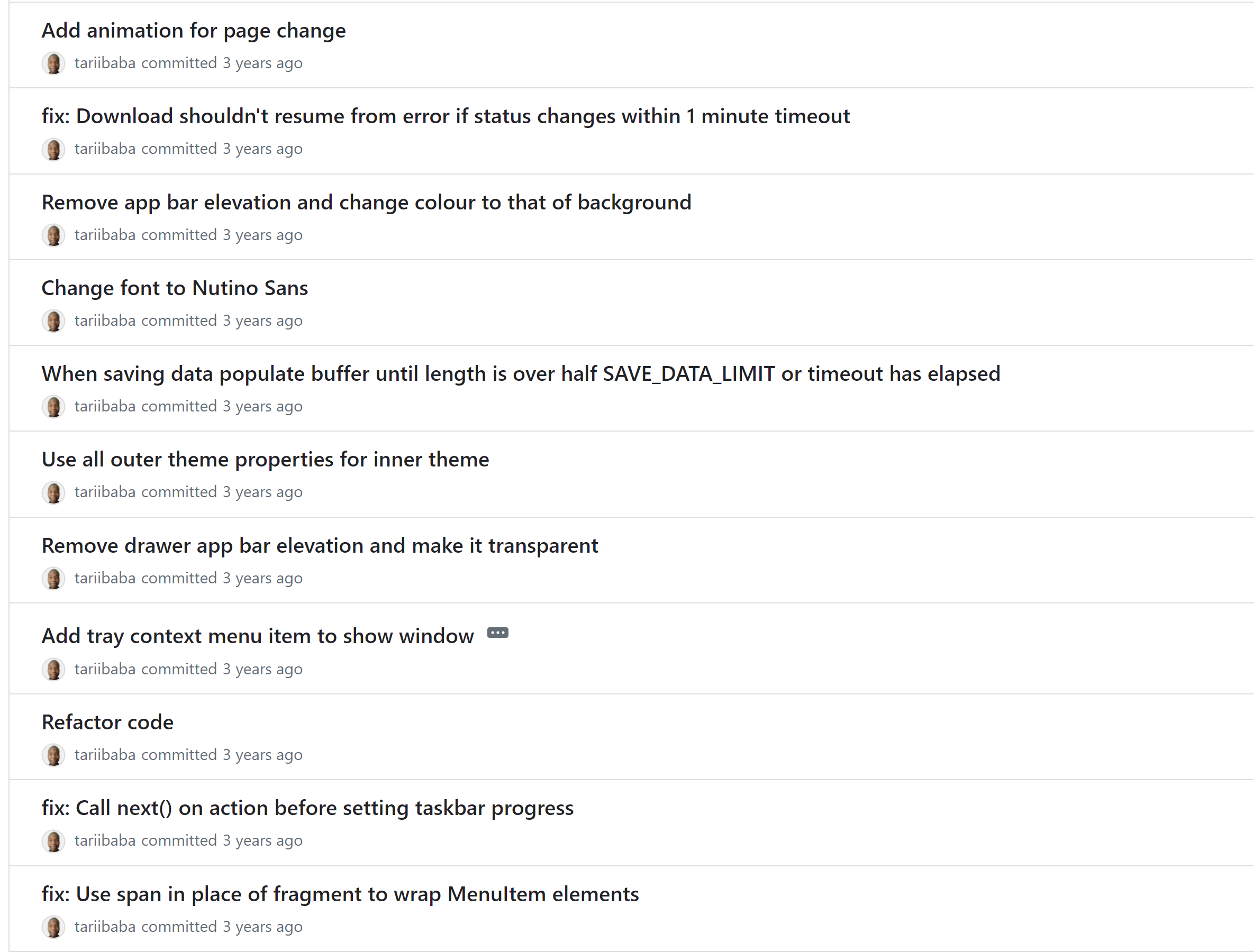

This delightful growth process is one of the things that transforms coding from a cold logical activity into a creative, empowering, addictive pursuit. You continually strive, with the ultimate desired outcome in sight, line after line, commit after commit, feature after feature; everlasting progress in an energizing purposeful atmosphere.

What man actually needs is not a tensionless state but rather the striving and struggling for some goal worthy of him. What he needs is not the discharge of tension at any cost, but the call of a potential meaning waiting to be fulfilled by him.

Viktor E. Frankl (1966). “Man’s Search for Meaning”

And yes, just like raising a responsible, well-rounded individual, we may never actually reach a definite, final destination. But it gave us a strong sense of direction. We are going *somewhere* and we have the map.

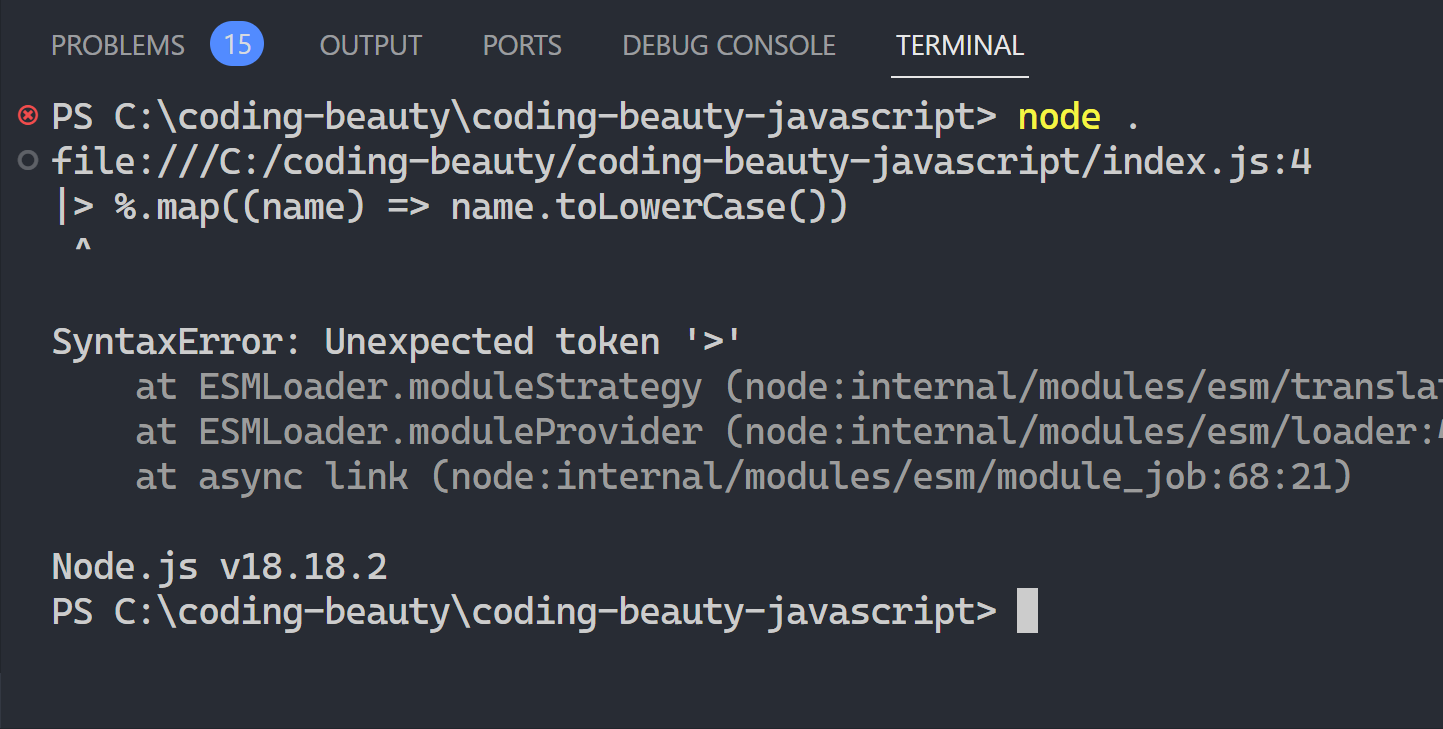

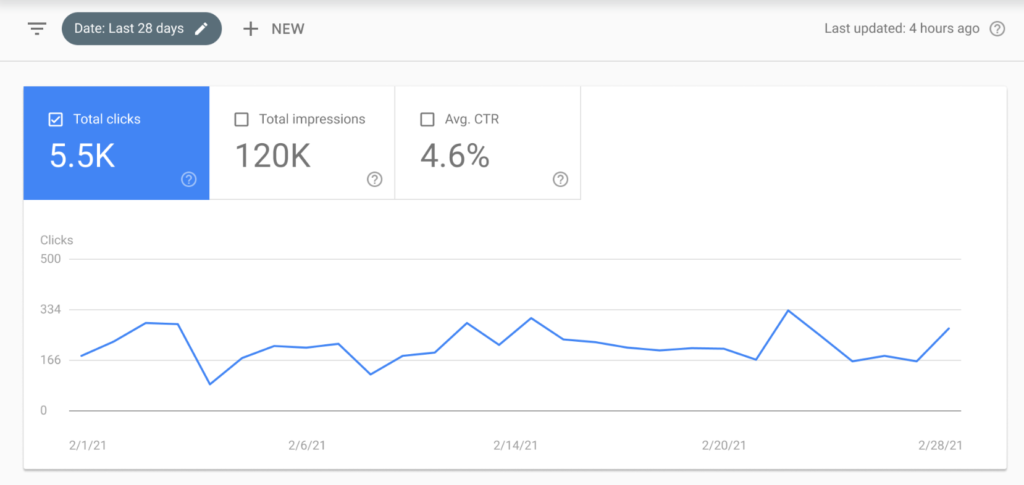

Have you heard the popular estimates of devs spending on average as much as 75% of their time debugging software?

Yes, it would be nothing but a pipe dream to expect this journey to be easy.

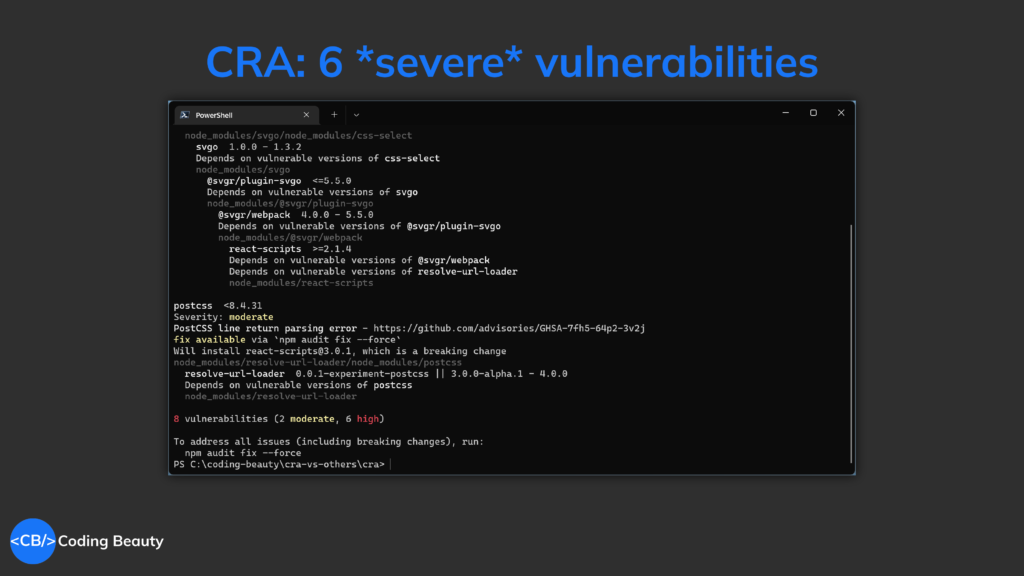

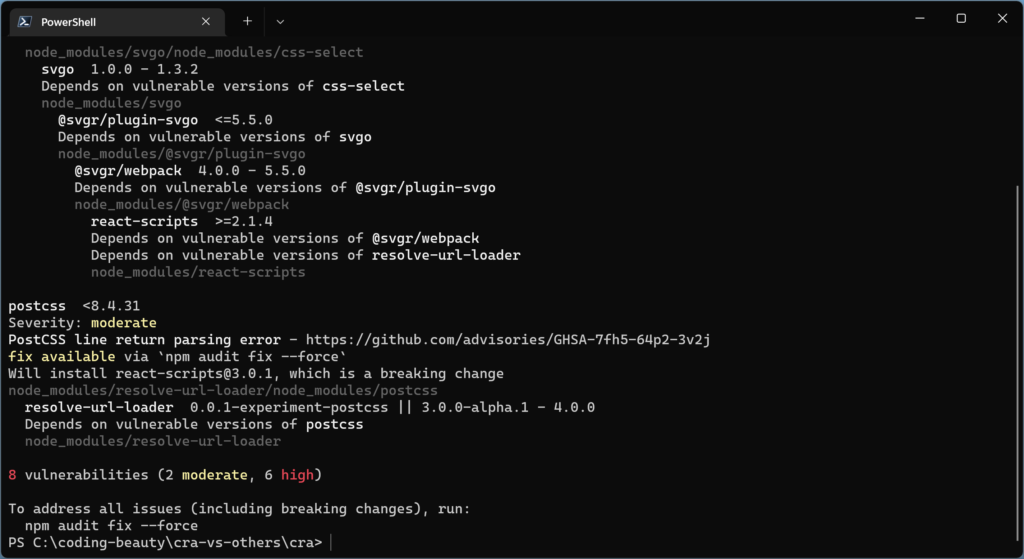

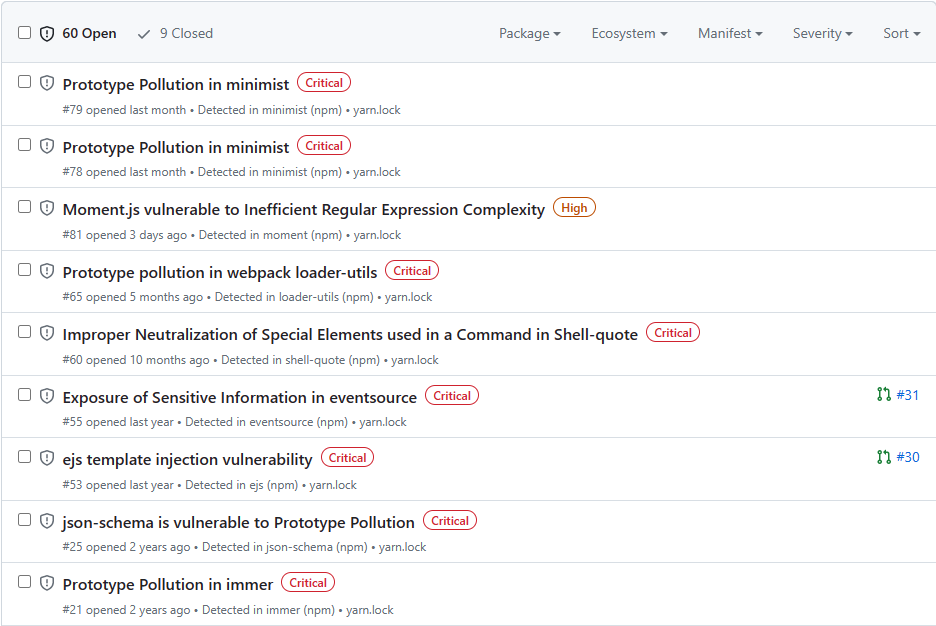

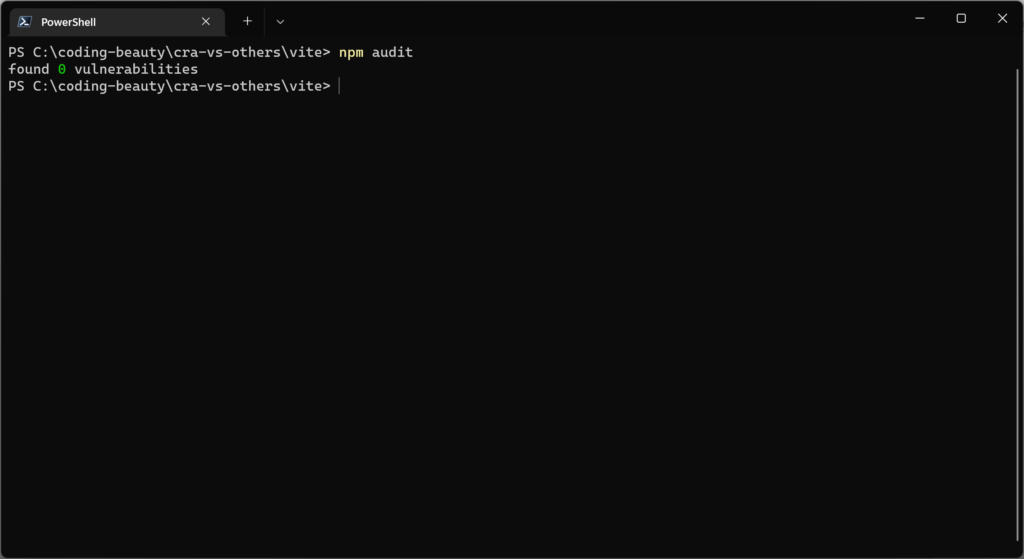

Indeed, as a loving parent does all they can to shield their dearest child from harm’s way, programmers must safeguard their code from bugs and vulnerabilities to produce robust and reliable software. We’ve already talked about testing, and these precautions drastically reduce the chances of nasty errors and mistakes.

But just like with building good personal habits and social skills, you are still going to make mistakes along the way.

With any serious complex codebase, there are still going to be loud, frustrating bugs that bring you to the point of despair and hopelessness. But you simply cannot quit. You are responsible; you are the architect of this virtual world, the builder of digital solutions and innovations. You possess the power to overcome obstacles and transform difficulties into opportunities for growth — your growth, your software’s growth.

And what of when the path toward the goal changes dramatically? Close-mindedness won’t take you far.

Parents need to be adaptable and flexible in adjusting to the child’s changing unique needs and development stages: Software requirements are hardly set in stone. You must be able to incorporate new features into your codebase — and destroy them as time goes on.

Pro-active scalability is nice to have in every software system, but this isn’t always feasible; in the real world you have time and cost constraints and you need to move fast. What was a great algorithm at 1 thousand users could quickly become a huge performance bottleneck at 1 million users. You may need to migrate to a whole new database for increasingly complex datasets needing speedy retrieval.

Takeaway: What coding should be

Like tending to a seedling or guiding a child’s development, coding requires careful planning, thoughtful design, and continuous adaptation. It’s an everlasting process of meaningful growth, measured not only by lines of code but by metrics such as readability, efficiency, beauty, and user-focused features. With each line added, bugs debugged, and obstacles overcome, the software and its creator thrive together on a path filled with purpose, determination, and endless possibilities.