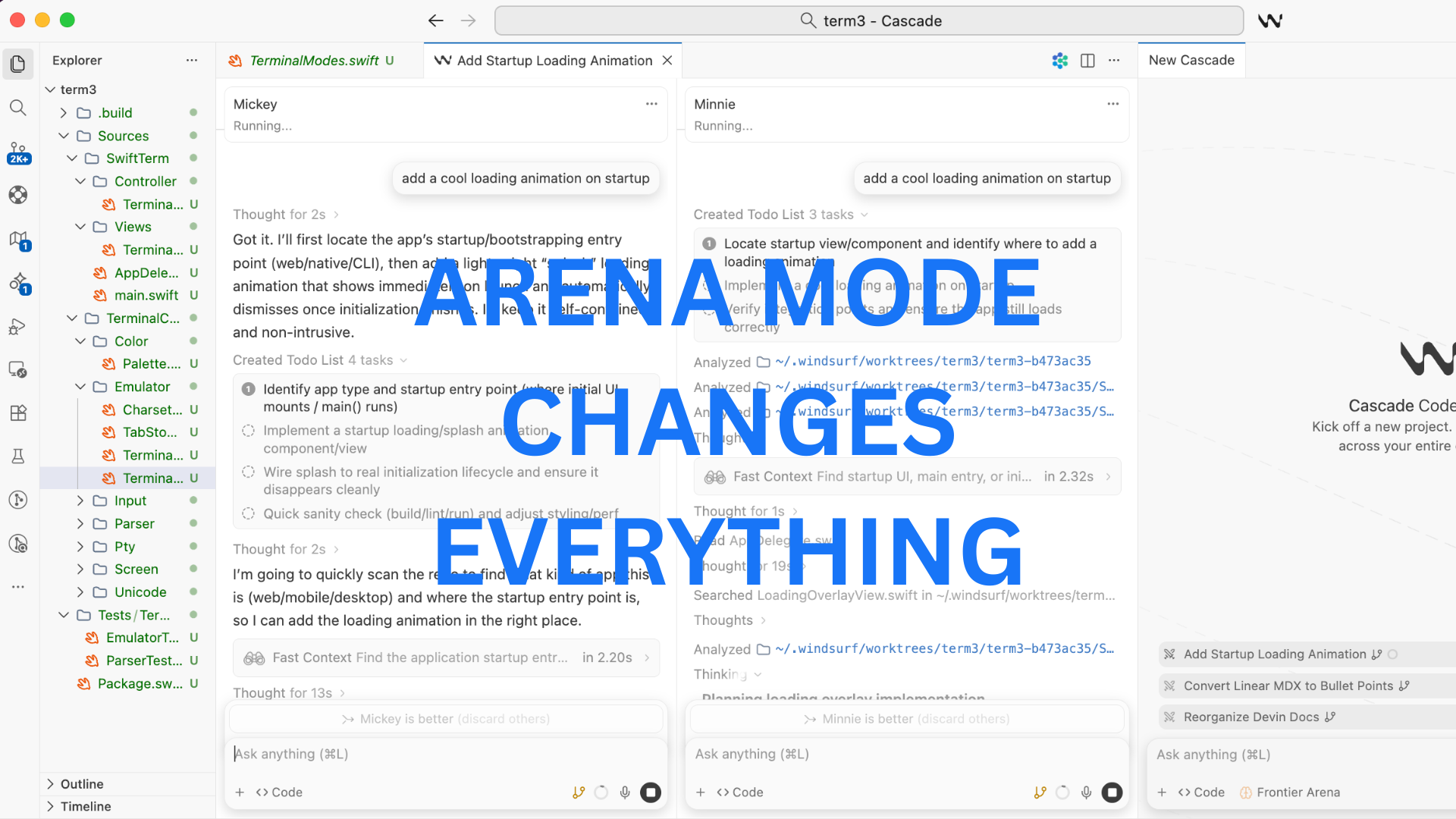

This incredible IDE upgrade lets you always know the best coding model to use

Wow this is huge.

Windsurf just completely revolutionized AI coding with this incredible new IDE feature.

With the new Arena Mode in Windsurf, you can finally know exactly how strong your coding models are…

By putting them against each other inside your actual project, on the same prompt, at the same time—and then you just pick the winner with absolute clarity.

No more guessing or vibes like many developers are still doing

No “someone on YouTube said this model is better”…

Just:

Which one actually helped me more right now, for this particular use case?

What Arena Mode actually does

Normally when you use a particular coding model you’re making the assumption that it’s one of the best for the job.

Arena Mode challenges that head on.

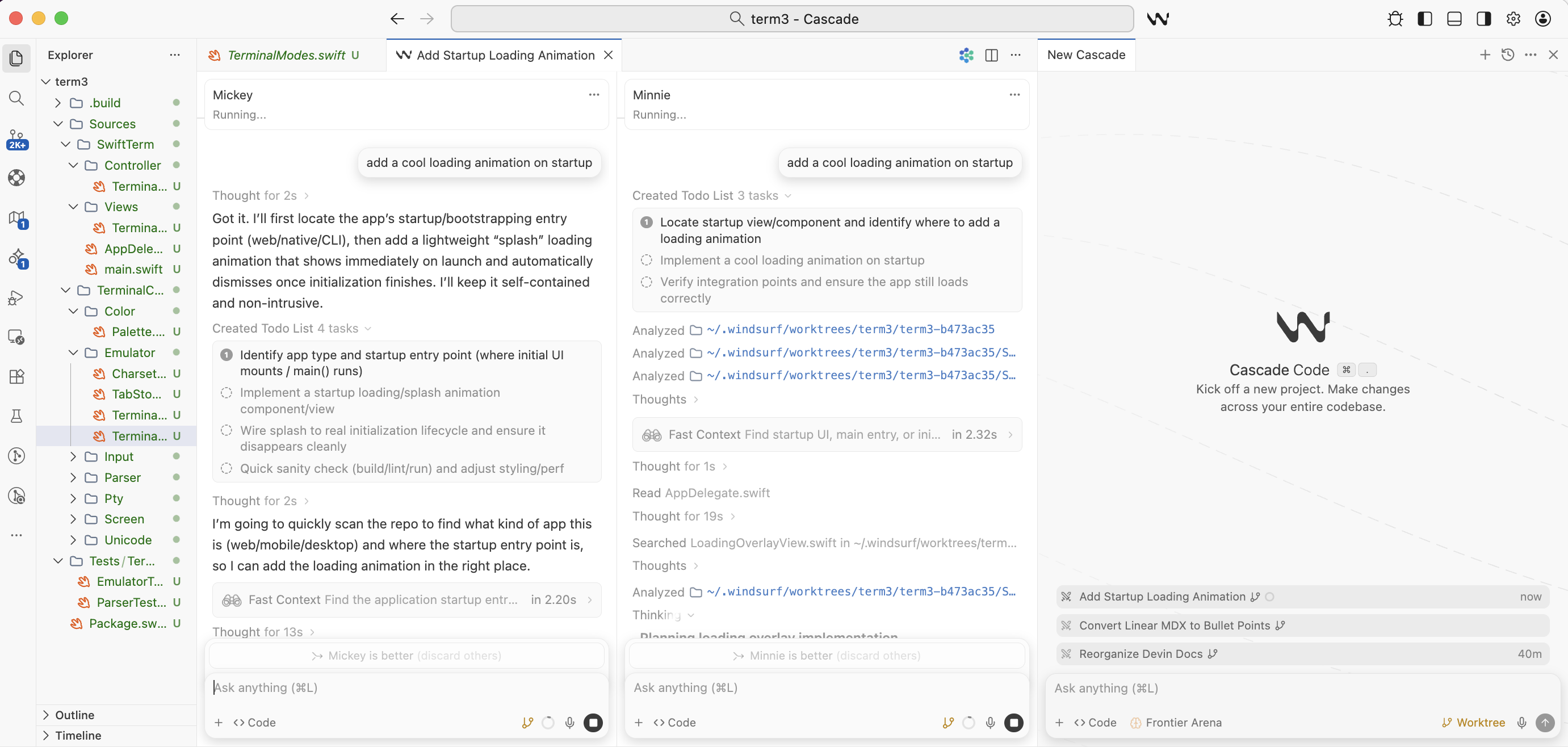

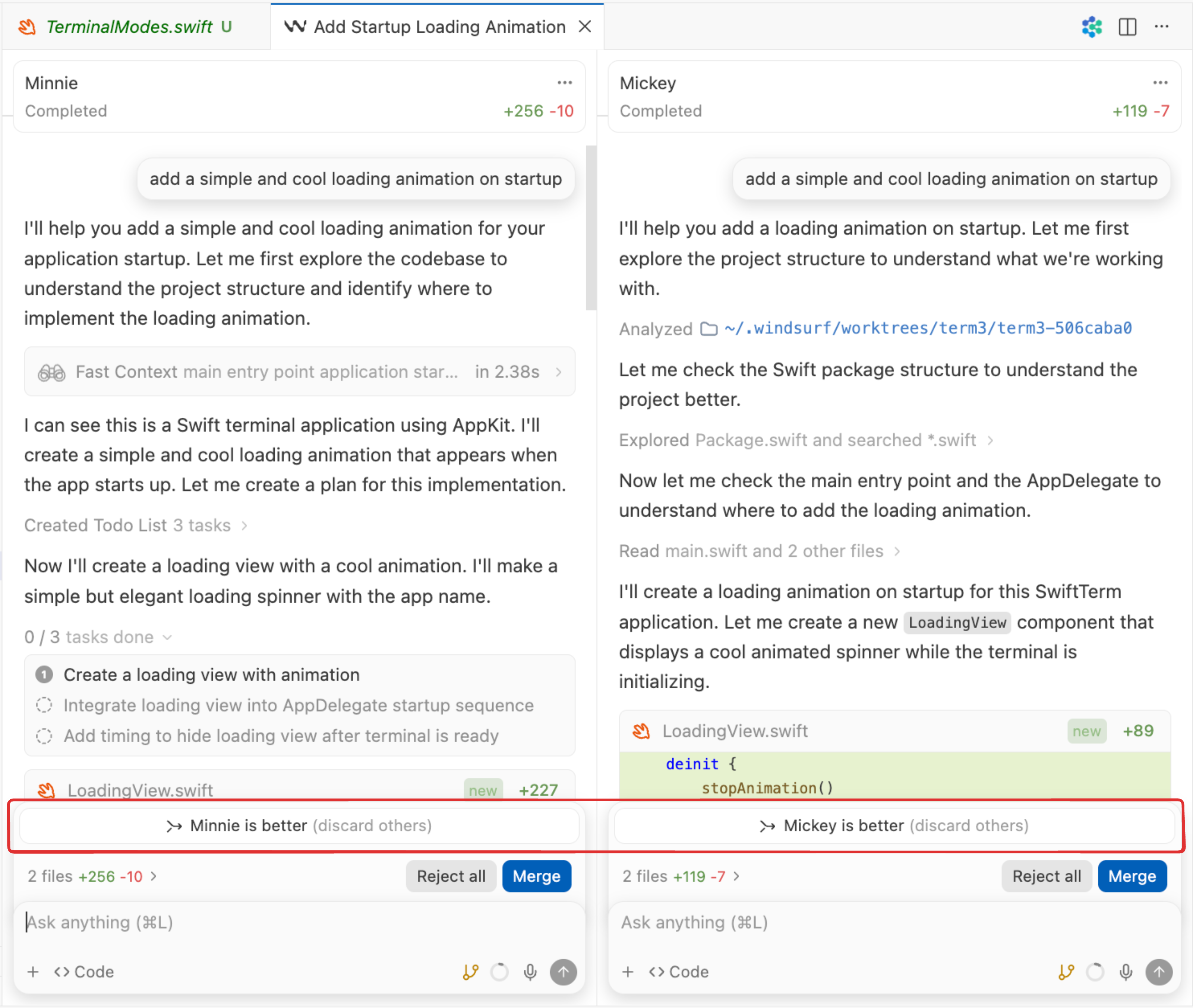

With Arena Mode, Windsurf spins up multiple parallel Cascade sessions, each powered by a different model, and runs them side-by-side on the same task. You see the outputs next to each other, compare the approaches, and decide which one wins.

Once you choose, Windsurf keeps going with the winner and drops the rest. Simple.

It sounds small, but it completely changes how you think about model choice.

The underrated magic: isolated git worktrees

This is what makes Arena Mode feel legit instead of gimmicky and unstable.

Each model runs in its own git worktree. That means:

- No stepping on each other’s changes

- No weird merge situations

- No “wait, which model edited this file?”

You can actually try two different solutions—accept changes, inspect diffs, and judge them like real code—because they are real code.

This alone makes Arena Mode way more useful than copying prompts between tools or tabs.

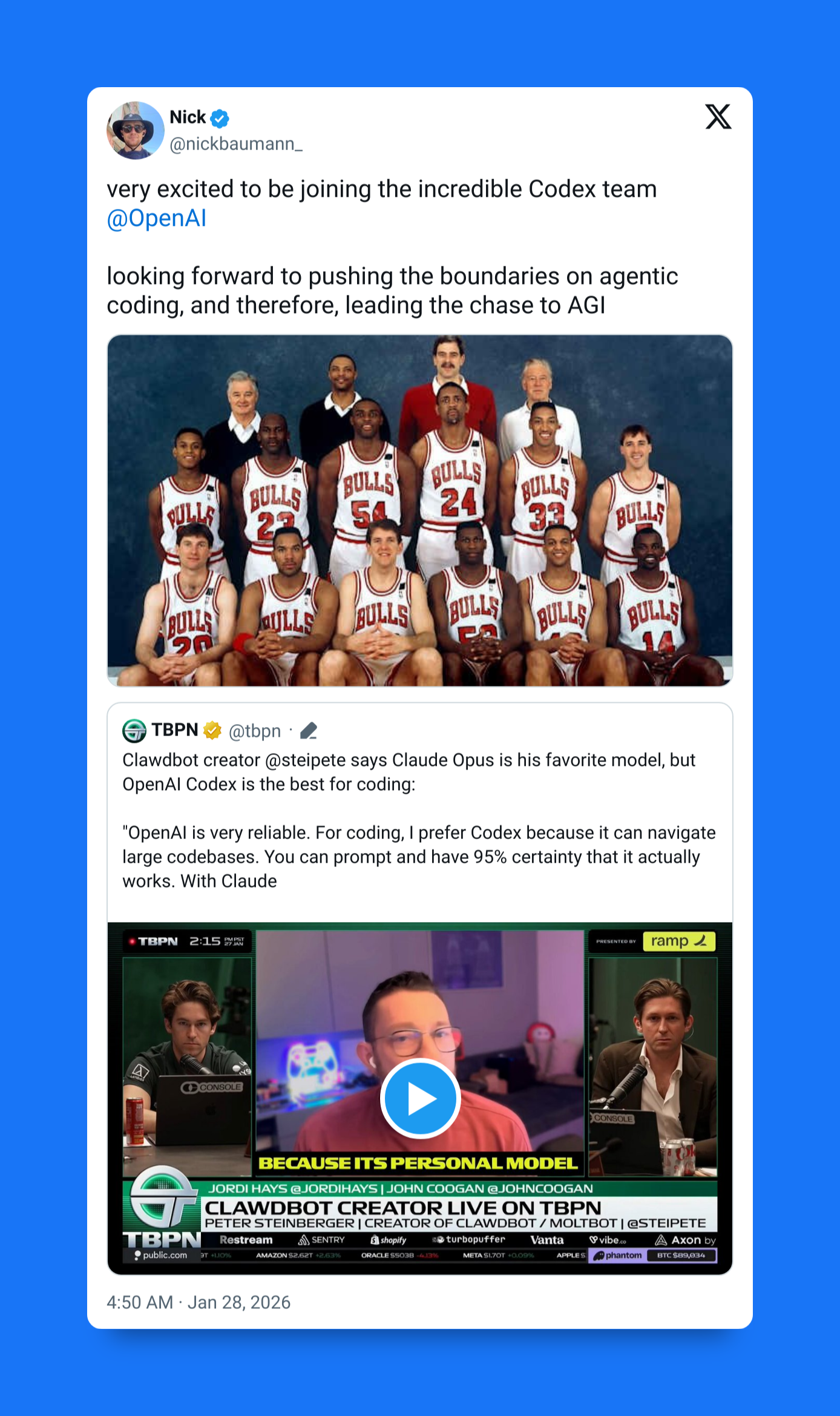

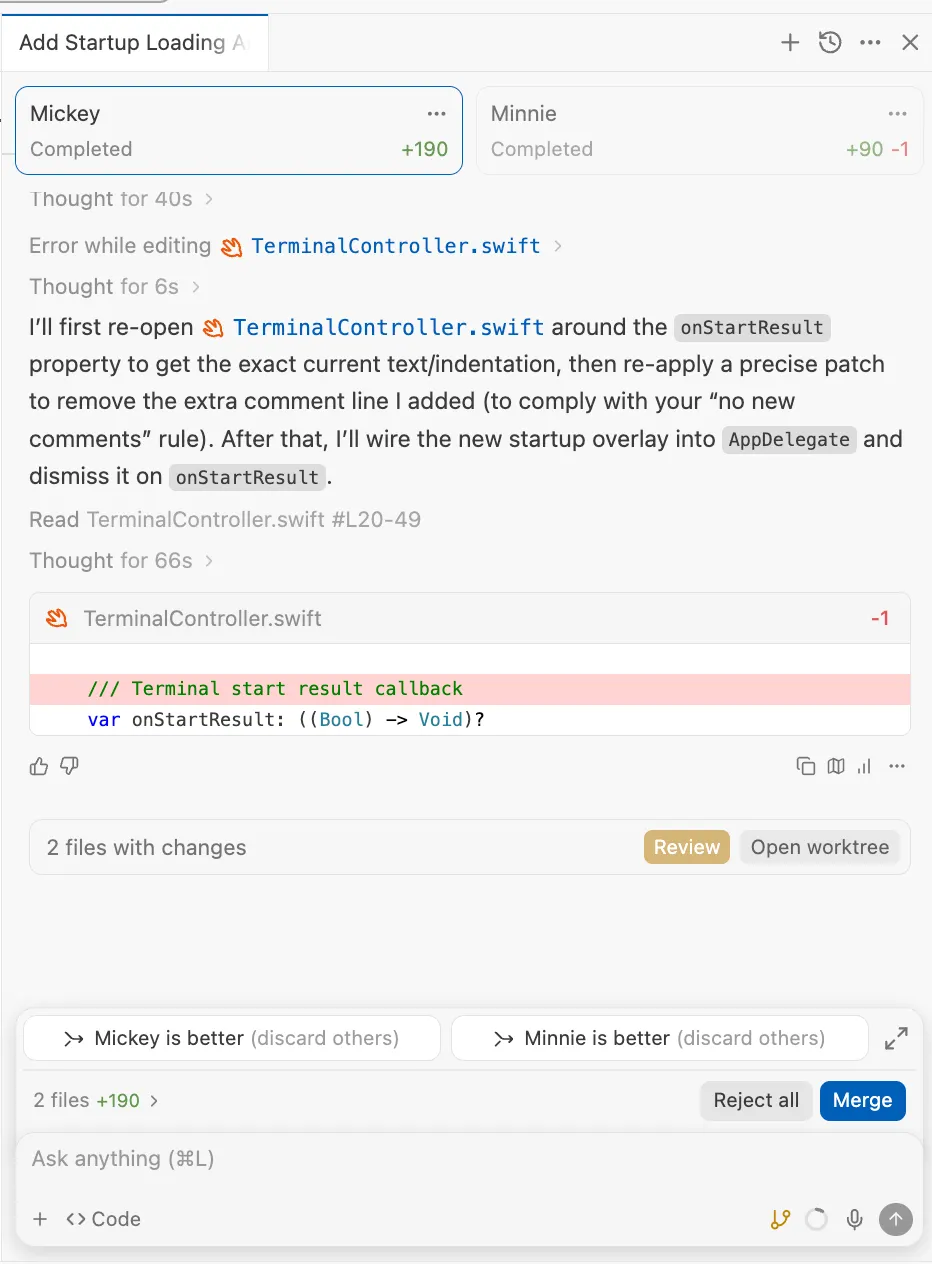

Battle Groups: removing brand bias

We need this because many of us are biased towards certain models by default…

Instead of picking specific models, you choose a group and Windsurf randomly selects contenders for you.

You don’t see which model is which until after you vote.

So you’re forced to judge based on:

- clarity

- correctness

- style

- how well it fits your codebase

Not reputation.

Once you pick a winner, Windsurf reveals the models and reshuffles things for the next round. It’s part productivity tool, part science experiment.

Your choices actually matter (beyond your editor)

Arena Mode isn’t just for your local workflow.

Every time you pick a winner:

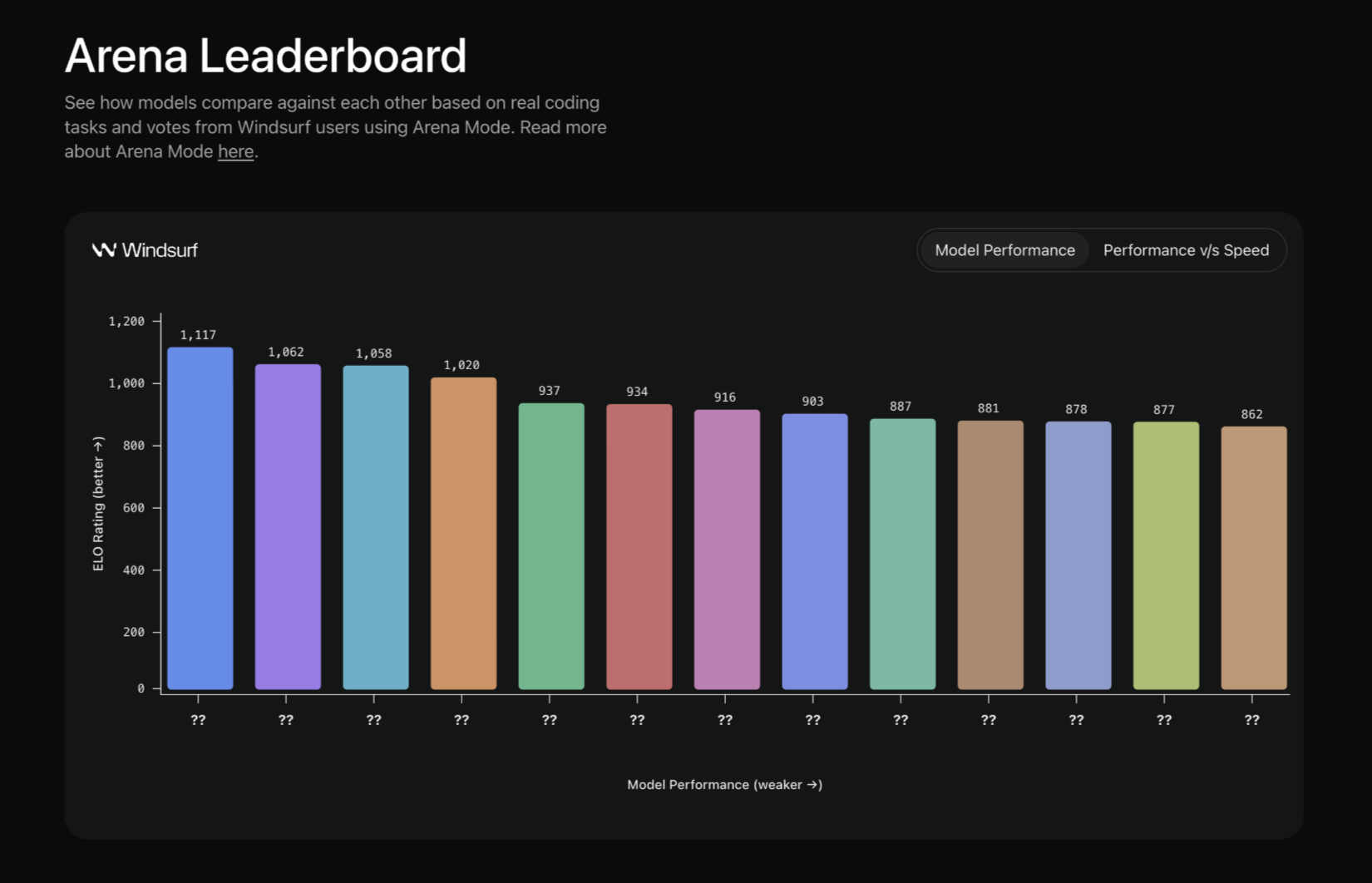

- Windsurf builds a personal leaderboard that reflects what works best for you

- Your votes also feed into a global leaderboard based on real coding tasks

That’s the big idea behind these new wave of Windsurf updates: model evaluation shouldn’t live in abstract benchmarks. It should come from actual developers, working in real repos, solving real problems.

Arena Mode turns everyday coding into feedback.

When Arena Mode shines the most

Arena Mode is especially invaluable when:

- You’re doing a non-trivial refactor and want different approaches

- You’re debugging something gnarly and want multiple hypotheses

- You’re testing a new or unfamiliar model without committing to it

- You want to compare “safe and boring” vs “bold and clever” solutions

It’s less useful for tiny edits, but for anything that requires judgment, tradeoffs, or taste, it’s great.

A couple things to know before jumping in

It’s not totally plug-and-play:

- Your project needs to be a git repo

- Only git-tracked files are copied into Arena worktrees by default (extra setup needed for untracked files)

Obviously not deal-breakers, but worth knowing so you don’t get confused the first time you try it.

Even more upgrades

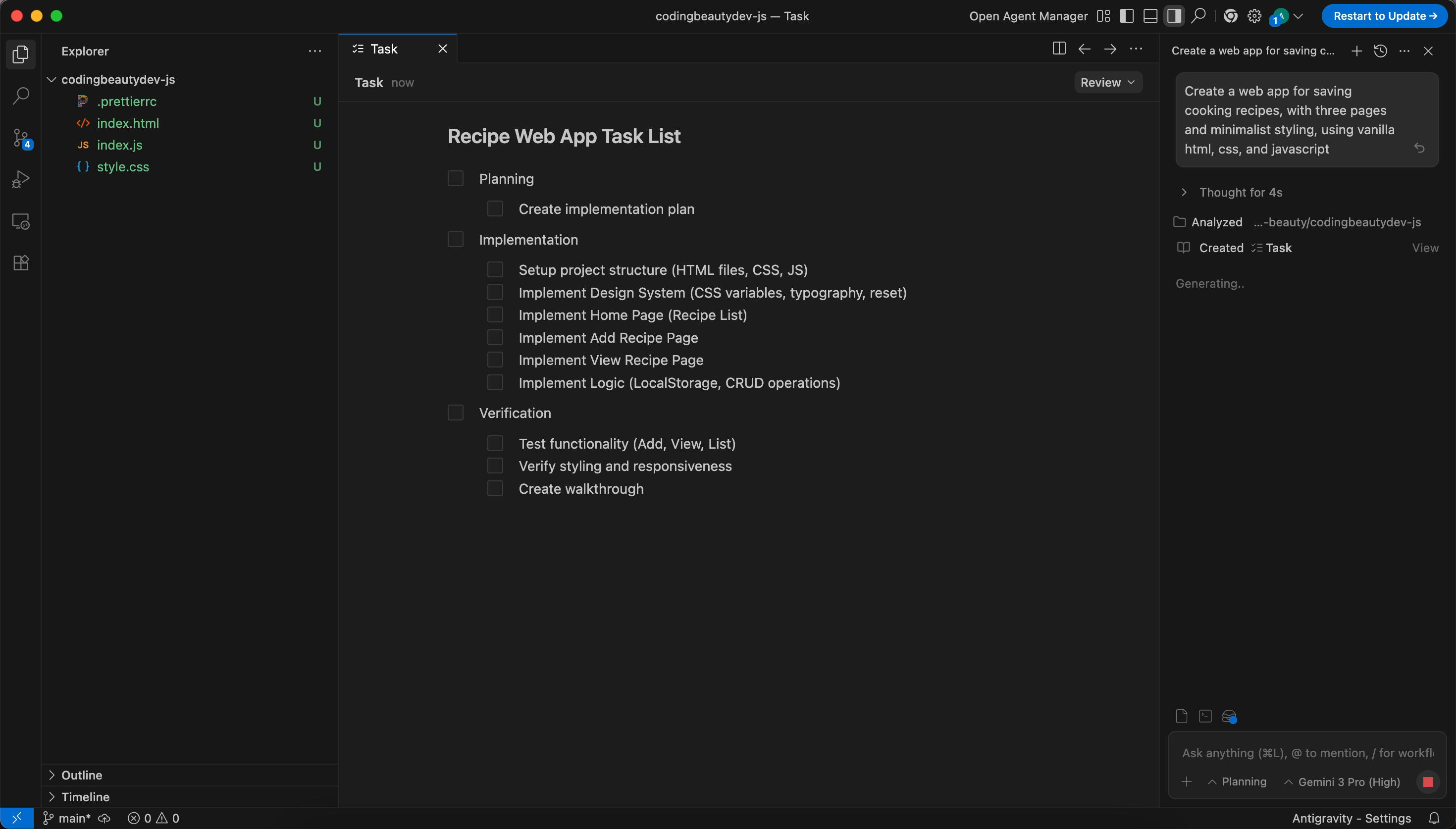

Windsurf has also add a new Plan Mode feature for step-by-step planning before code generation.

It pairs nicely with Arena:

- Plan the solution

- Let two models implement it

- Keep the better one

Simple and short.

Instead of telling you which model is best, with Arena Mode Windsurf is saying:

“You decide. In your code. On your problems.”