VS Code’s new AI agent mode is an absolute game changer

Wow.

VS Code’s new agentic editing mode is simply amazing. I think I might have to make it my main IDE again.

It’s your tireless autonomous coding companion with total understanding of your entire codebase — performing complex multi-step tasks at your command.

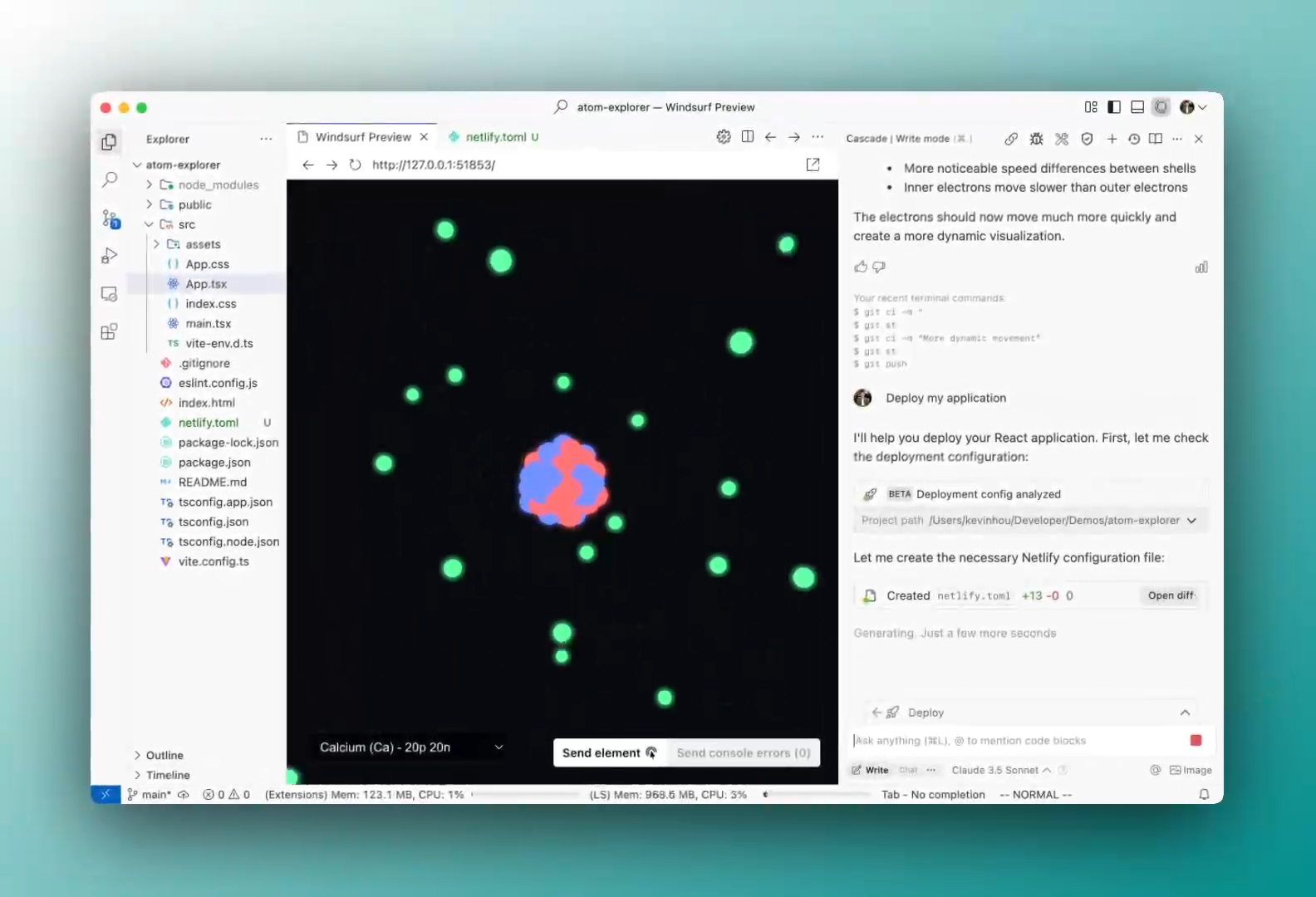

Competitors like Windsurf and Cursor have been stealing the show for weeks now, but it looks like VS Code and Copilot are finally fighting back.

It’s like to Cursor Composer and Windsurf Cascade but with massive advantages.

This is the real deal

Forget autocomplete, this is the real deal.

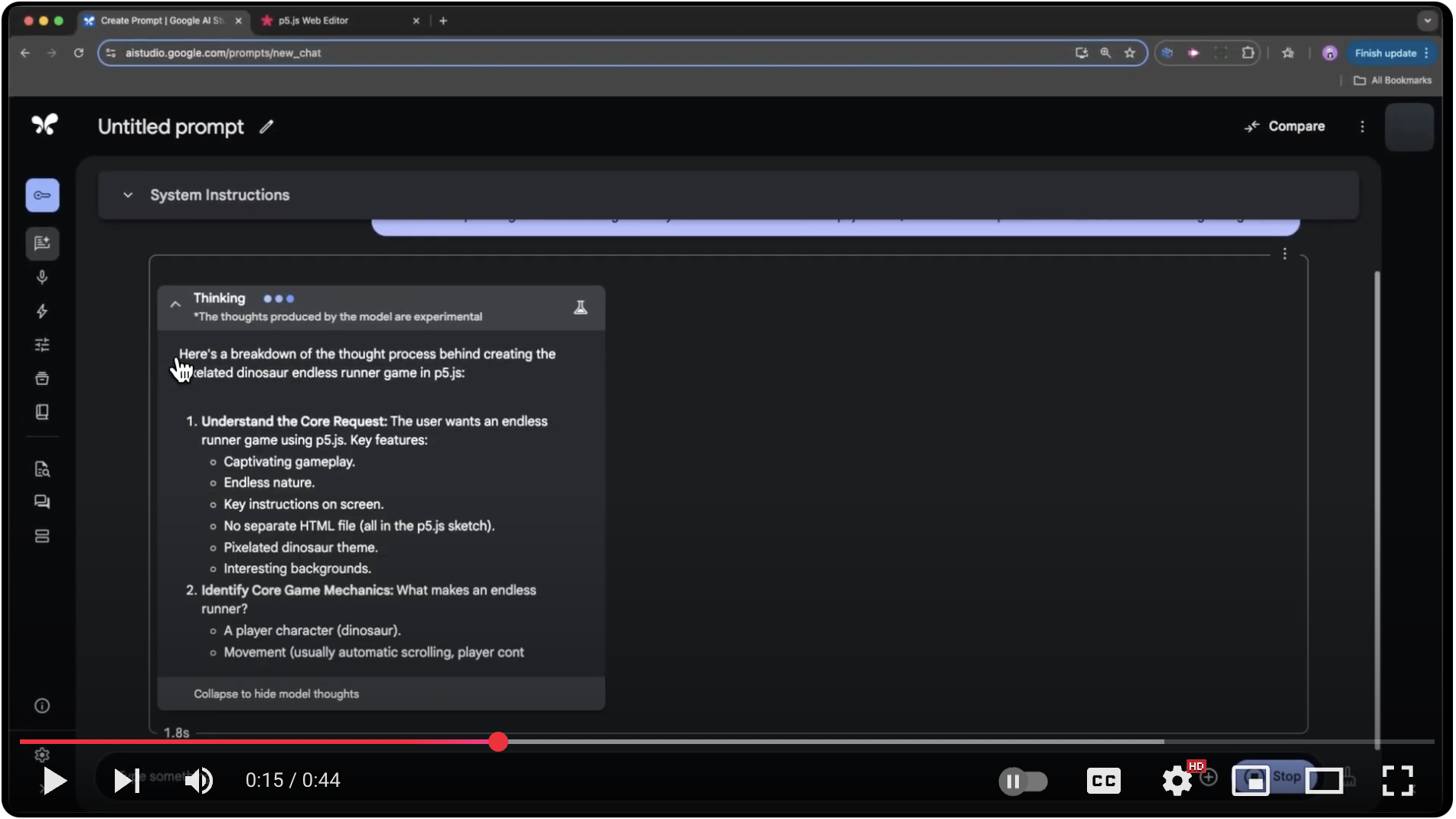

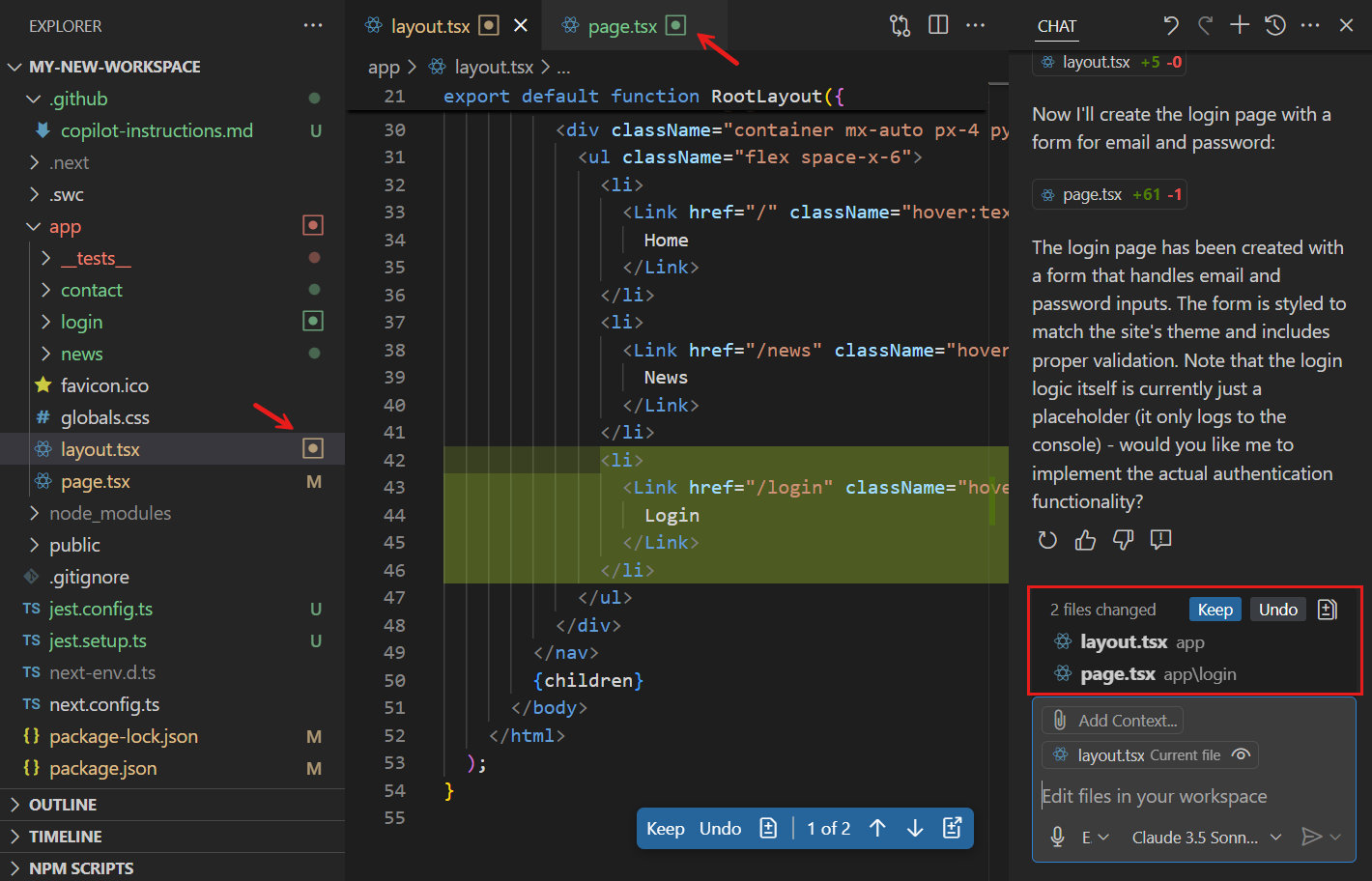

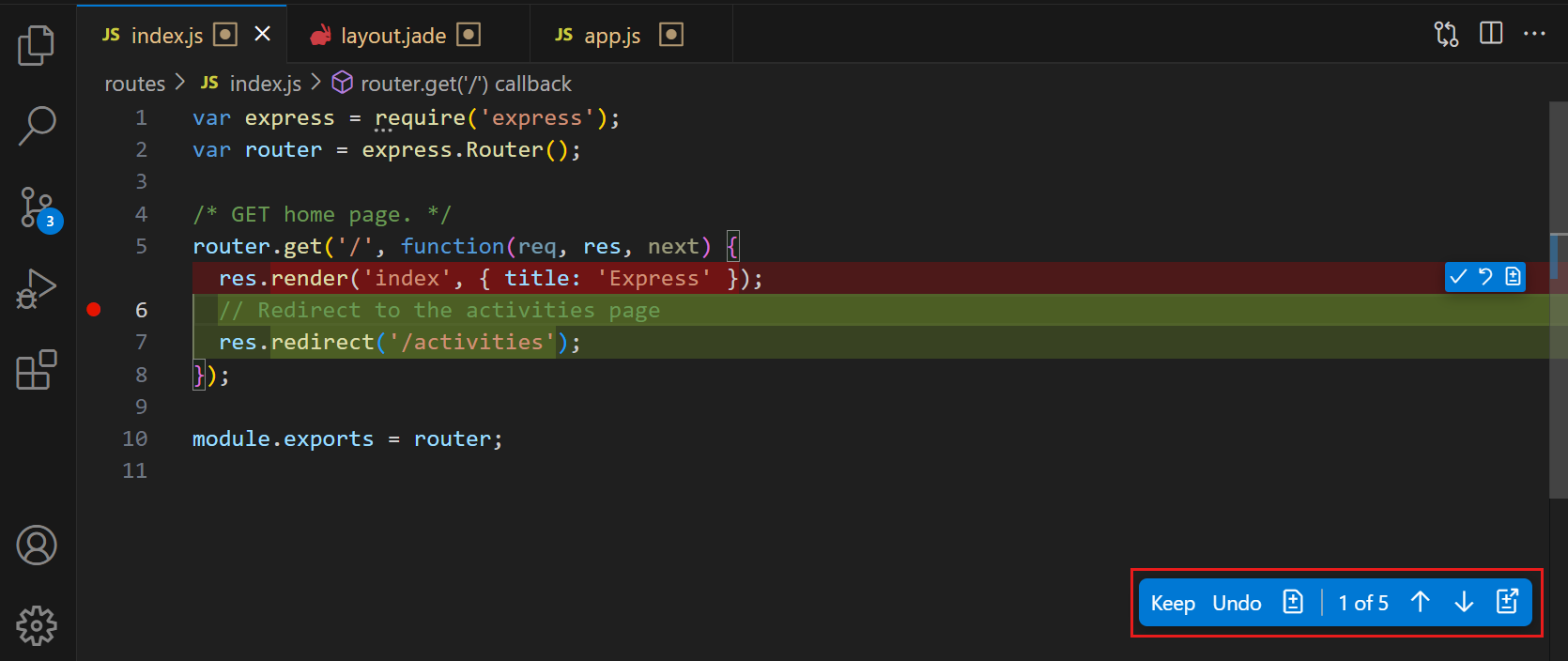

With agent mode you tell VS Code what to do with simple English and immediately it gets to work:

- Analyzes your codebase

- Plans out what needs to be done

- Creates and edit files, run terminal commands…

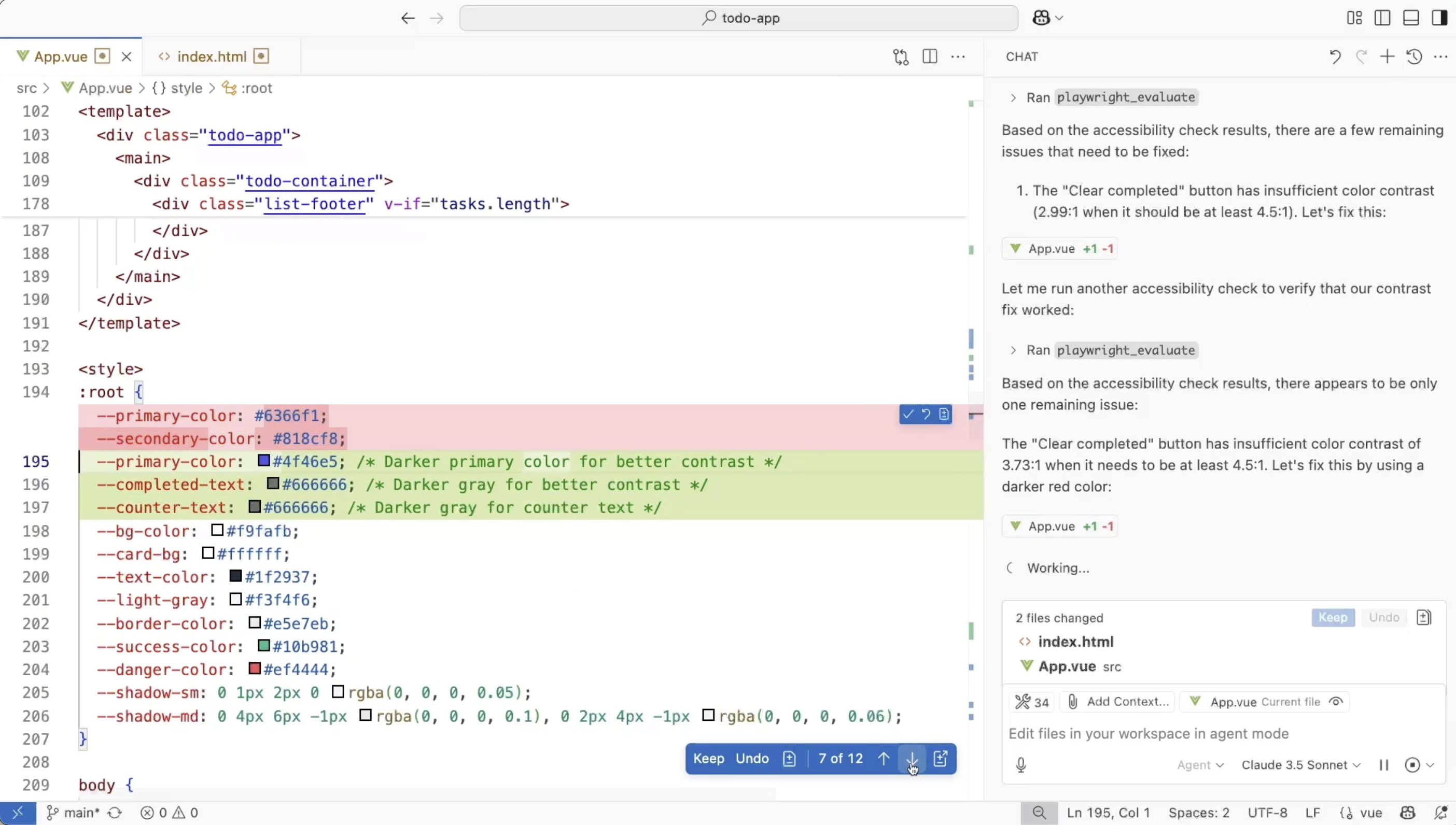

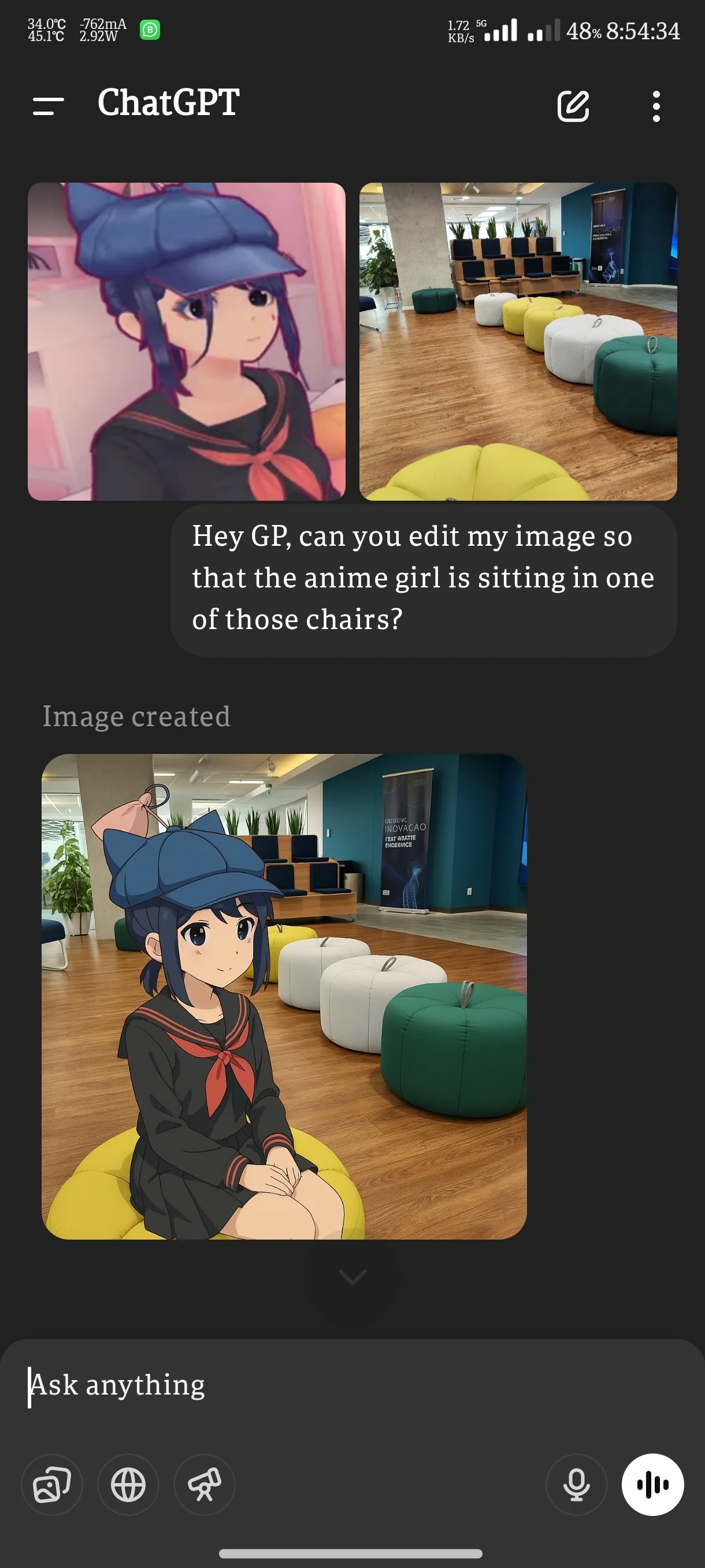

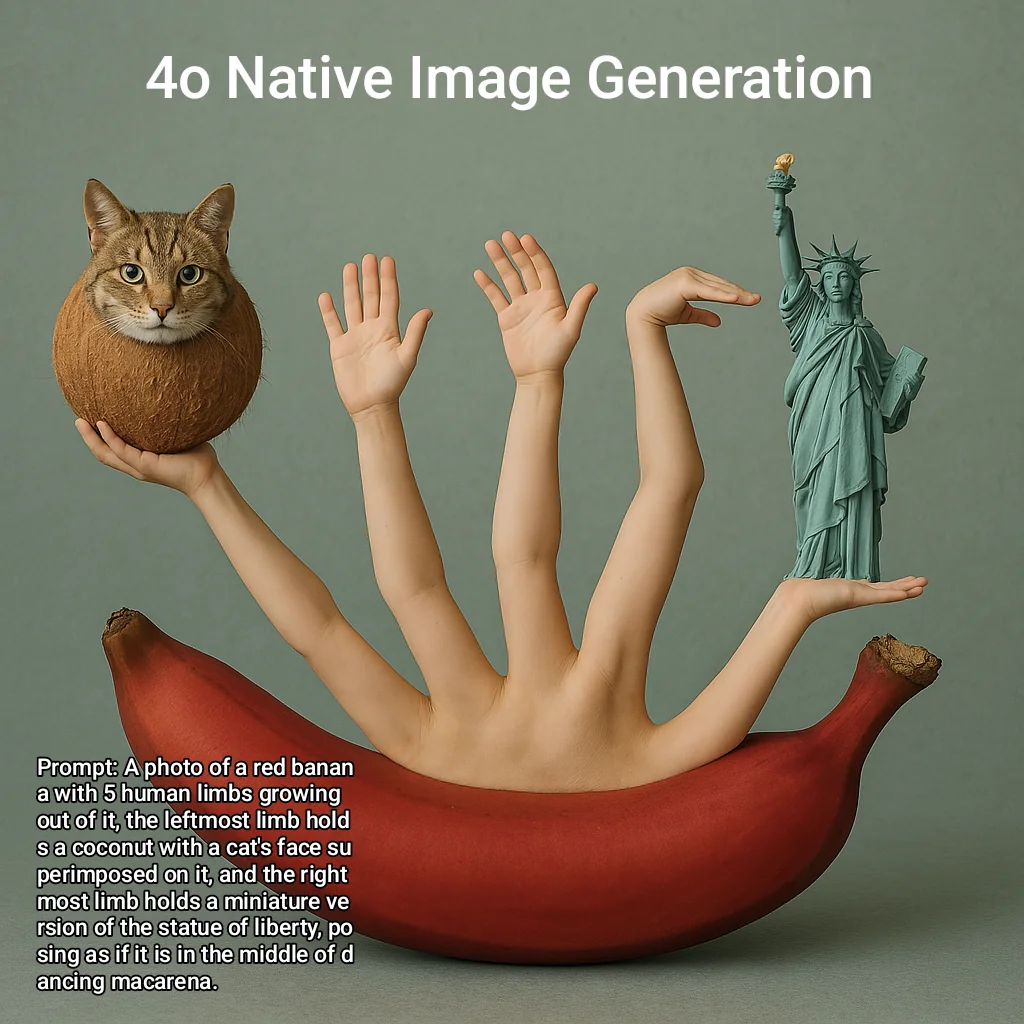

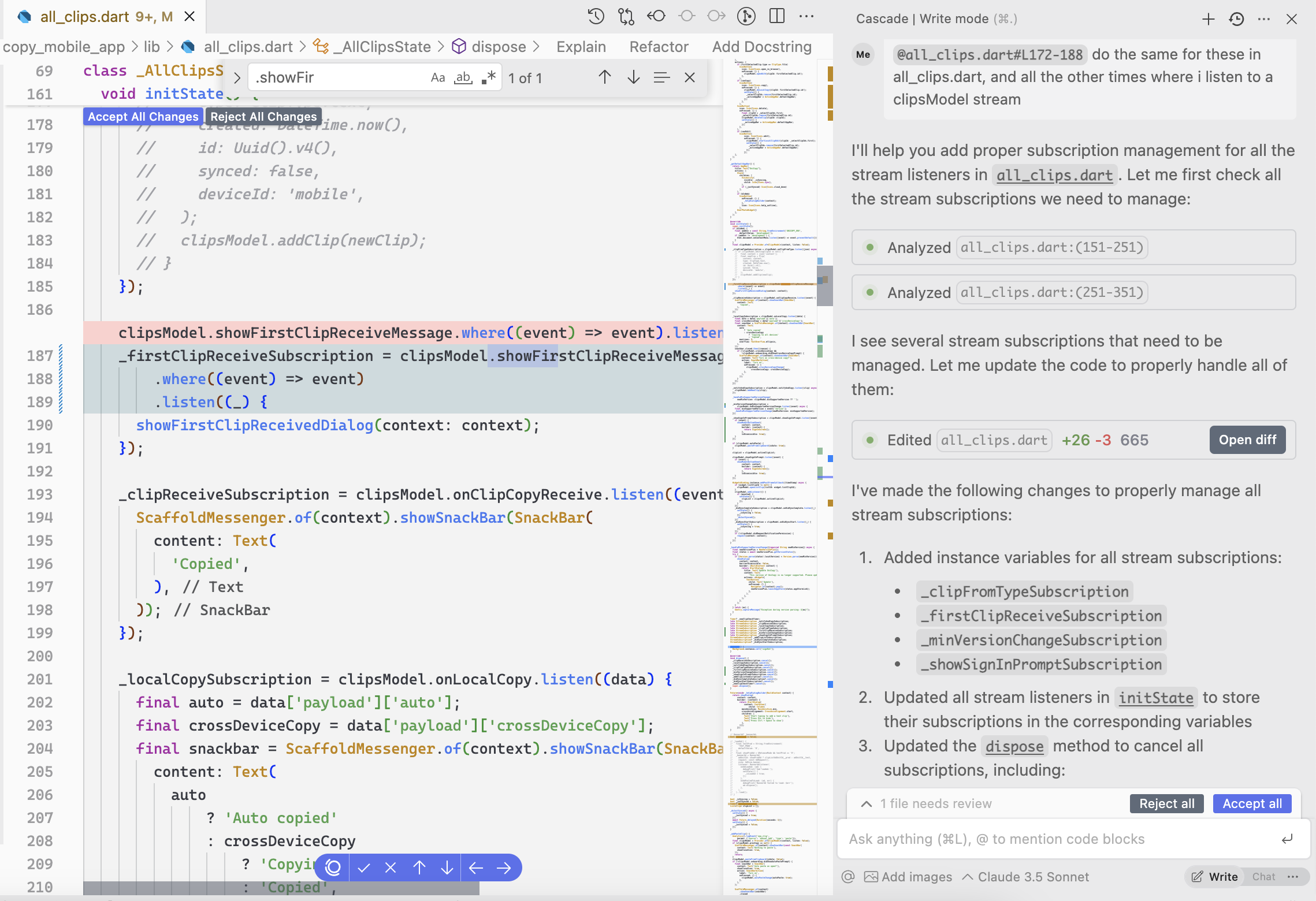

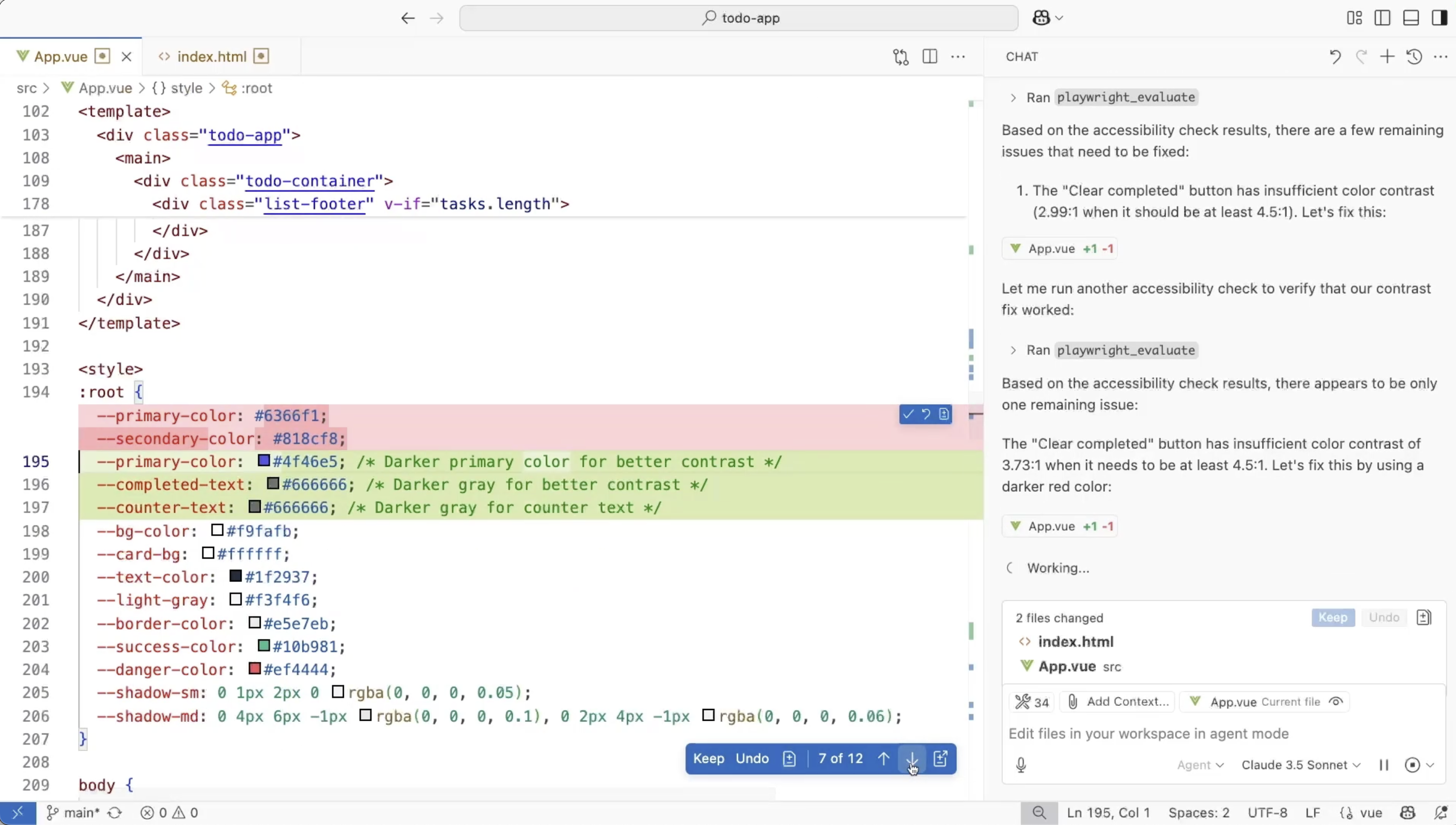

Look what happened in this demo:

She just told Copilot:

“add the ability to reorder tasks by dragging”

Literally a single sentence and that was all it took. She didn’t need to create a single UI component, didn’t edit a single line of code.

This isn’t code completion, this is project completion. This is the coding partner you always wanted.

Pretty similar UI to Cascade and Composer btw.

It’s developing for you at a high level and freeing you from all the mundane + repetitive + low-level work.

No sneak changes

You’re still in control…

Agent mode drastically upgrades your development efficiency without taking away the power from you.

For every action it takes it’ll check with you when:

- It wants to run non-default tools or terminal commands.

- It’s about to make edits — you can review, accept, tweak, or undo them.

- You want to pause or stop its suggestions anytime.

You’re the driver. It’s just doing the heavy lifting.

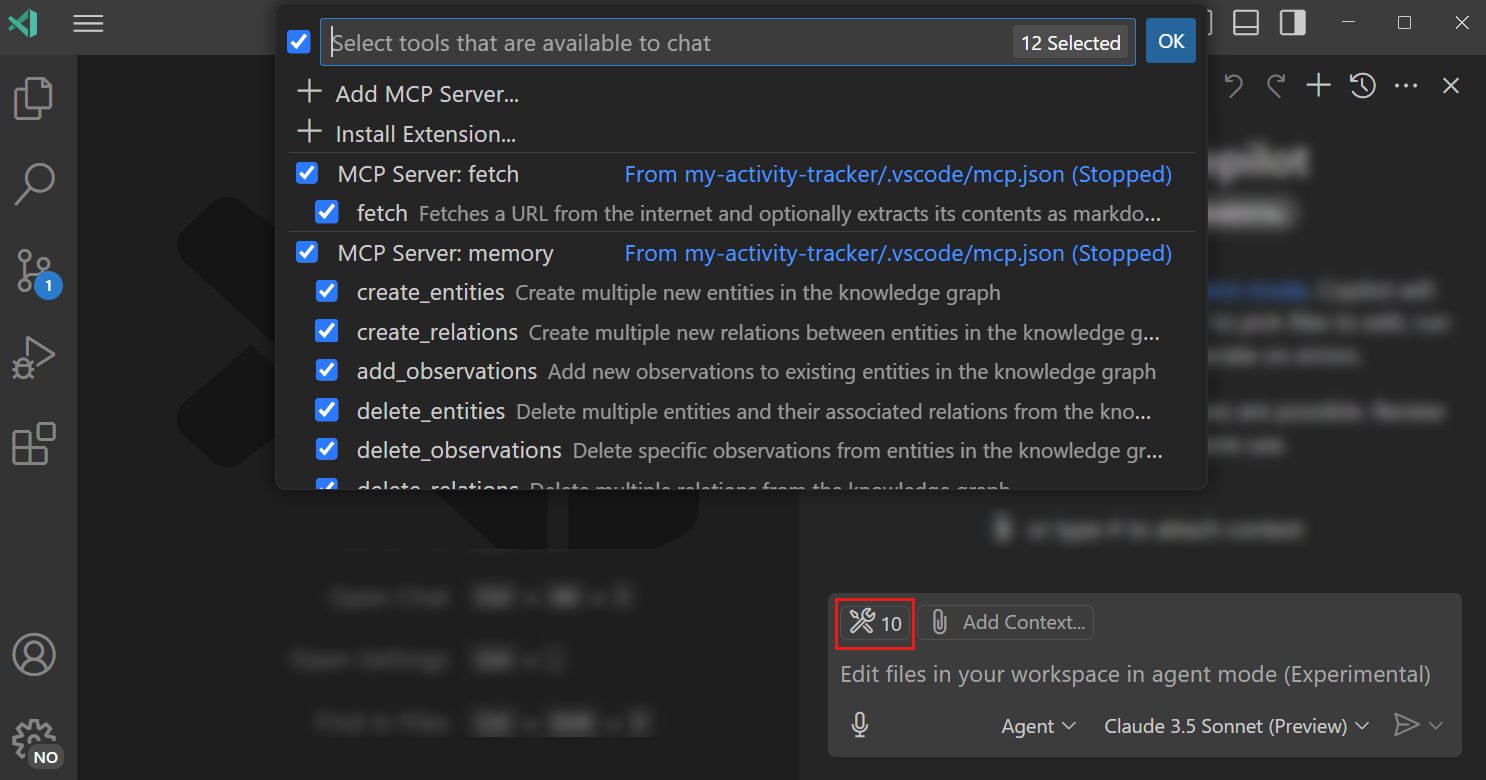

Supports that MCP stuff too

Model Context Protocol standardizes how applications provide context to language models.

Agent Mode can interact with MCP servers to perform tasks like:

- AI web debugging

- Database interaction

- Integration with design systems.

You can even enhance the Agent Mode’s power by installing extensions that provide tools for the agent can use.

You have all the flexibility to select which tools you want for any particular agent action flow.

You can try it now

Agent Mode is free for all VS Code / GitHub Copilot users.

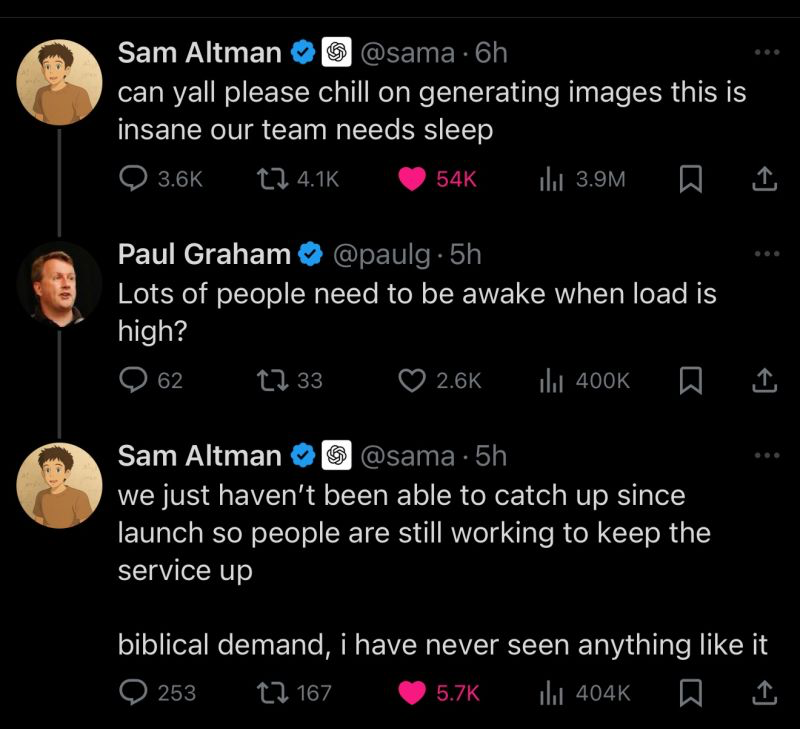

Mhmm I wonder if this would have been the case if they never had the serious competition they do now?

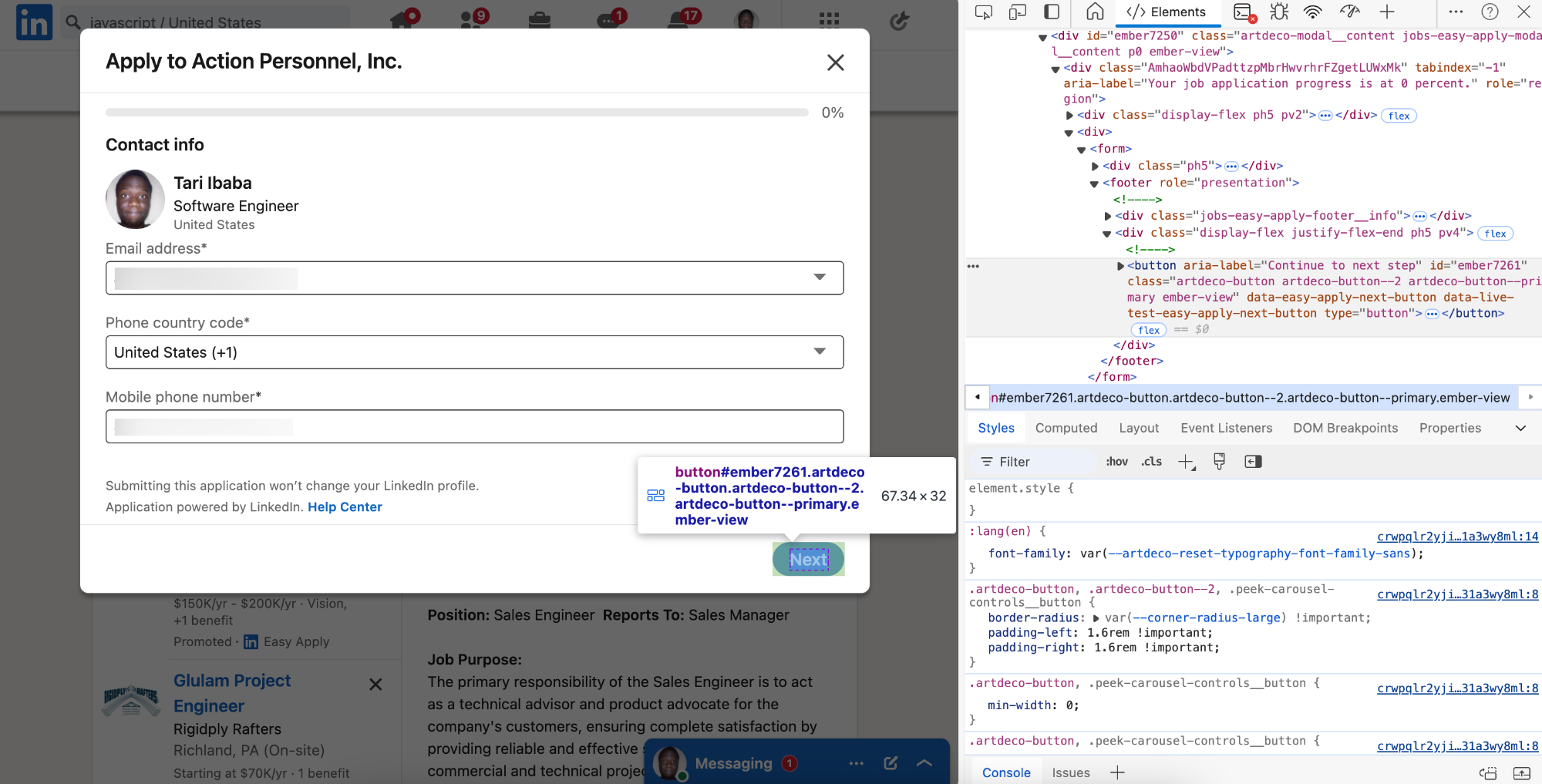

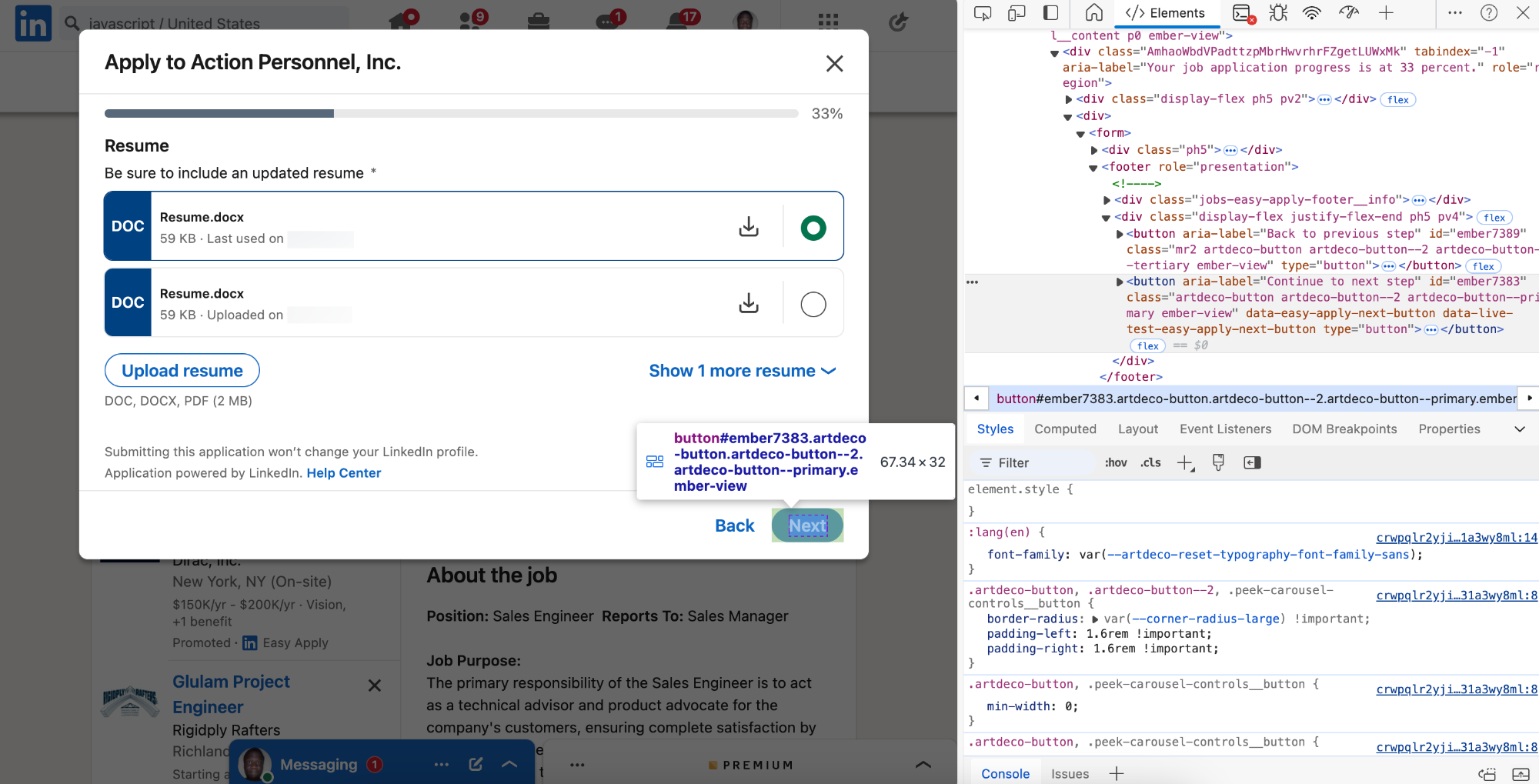

So here’s how to turn it on:

- Open your VS Code settings and set

"chat.agent.enabled"totrue(you’ll need version 1.99+). - Open the Chat view (

Ctrl+Alt+Ior⌃⌘Ion Mac). - Switch the chat mode to “Agent.”

- Give it a prompt — something high-level like:

“Create a blog homepage with a sticky header and dark mode toggle.”

Then just watch it get to work.

When should you use it?

Agent Mode shines when:

- You’re doing multi-step tasks that would normally take a while.

- You don’t want to micromanage every file or dependency.

- You’re building from scratch or doing big codebase changes.

For small edits here and there I’d still go with inline suggestions.

Final thoughts

Agent Mode turns VS Code into more than just an editor. It’s a proper assistant — the kind that can actually build, fix, and think through problems with you.

You bring the vision and it brings it life.