Claude Code’s new voice mode just changed AI coding forever

Wow Anthropic is on fire — now they just gave us this brilliant new voice mode feature for Claude Code — and this is going to totally transform the way so many developers interact with AI coding tools moving forward.

Instead of carefully typing every instruction — you can now speak your intent directly to an AI agent that understands and works inside your codebase.

From prompt-writing to near-real-time collaboration — now communicating closer than ever to the speed of thought — explaining problems, delegating tasks, and refining instructions naturally and intuitively.

And it’s not just a generic speech recognition — this was built specifically for coding.

With seamless activation, real-time streaming transcription, and seamless voice-plus-keyboard input, Claude Code is going to start feeling less like a chatbot and more like a peer-to-peer coding partner.

Enable it with 1 command

The setup is intentionally lightweight.

- You just type

/voiceto enable voice mode. - No external dictation tool or additional setup is required.

- Voice becomes simply another input layer inside the existing workflow.

Fine-tuned for coding, not generic conversation

Claude Code voice mode isn’t just speech added to a chatbot.

The key point is that the transcription itself is optimized for coding workflows, not everyday conversation. That means it’s tuned to handle the kinds of things developers actually say when working:

- syntax-heavy phrases

- function and class names

- file paths and CLI commands

- library names and technical terminology

So that means we can say things like:

- “Open

auth-middleware.tsand trace where the token validation fails.” - “Refactor the

UserServiceclass to use dependency injection.” - “Run the test suite and show me the failing cases.”

And Claude Code can reliably capture and act on those instructions.

Voice becomes a way to direct a coding agent, not just chat with one.

Zero-cost transcription lowers the barrier

This is one of the biggest selling points:

Voice transcription tokens are free.

This removes a major adoption barrier.

Benefits include:

- No need to worry about usage costs while speaking

- Easier to use voice for rough or exploratory prompts

- Encourages natural thinking out loud during development

If transcription were metered, people would hesitate to use it casually. Removing that friction makes voice a default option when it’s faster.

Real-time streaming is what makes it usable

The feature supports real-time streaming transcription.

This means:

- Your speech appears in the prompt as you talk

- Voice and keyboard input work together

- You can seamlessly switch between speaking and typing

Example hybrid flow:

- Speak the high-level task

- Type a specific filename or function

- Continue speaking to explain constraints or context

This hybrid interaction is what makes voice mode genuinely useful instead of gimmicky.

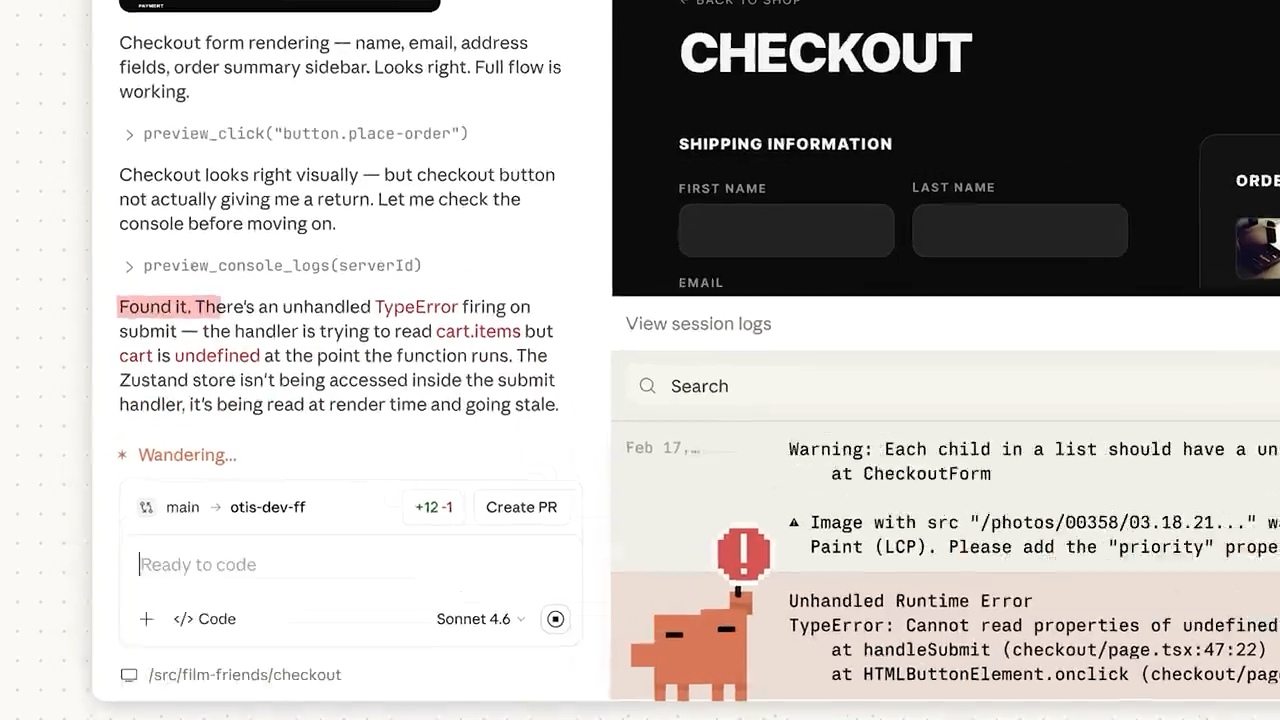

How to use it

The workflow follows a simple three-step loop.

1. Activate

- Type

/voicein Claude Code. - If your account has access, voice mode will enable immediately.

2. Speak

- Hold Space to talk.

- Release the key when finished.

- Your speech is transcribed directly into the prompt.

You can mix:

- spoken instructions

- typed edits

- additional clarifications

in the same prompt.

3. Execute

Once your request is ready:

- Claude Code processes it like any normal instruction.

- It can explain code, modify files, or execute tasks depending on permissions.

Why this matters

1. Communicate intent much faster

Speech is often faster than typing, especially for complex requests.

Voice works best for:

- multi-step instructions

- exploratory prompts

- long explanations of a problem

Example:

Instead of typing:

Trace the error path for this authentication bug and suggest the minimal safe fix

You can simply say it.

Speaking removes the friction of composing a perfectly structured prompt.

2. High-level intent is easier to express out loud

Voice naturally encourages higher-level thinking.

When speaking, people tend to include:

- goals

- tradeoffs

- uncertainties

- constraints

Like:

- “I think this bug is somewhere in the auth middleware…”

- “We probably shouldn’t change the public API…”

- “Try the smallest fix first.”

That additional context helps the AI understand what you actually want, not just what you typed.

3. The hybrid workflow is the real power move

The biggest advantage isn’t voice alone.

It’s the voice + keyboard workflow.

Benefits include:

- Keep your eyes on the code while speaking instructions

- Avoid stopping to craft perfectly typed prompts

- Maintain flow while navigating files and debugging

This reduces micro-context switching, which is one of the biggest productivity drains in development workflows.

4. Stay productive for much longer

Long coding sessions can be physically demanding.

Voice mode helps by reducing:

- repetitive typing

- hand strain

- keyboard fatigue

Possible ergonomic benefits:

- alternate between typing and speaking

- maintain better posture during long sessions

- sustain focus for longer periods

Voice won’t replace keyboards—but it can balance the workload on your hands.

5. Spoken language often gives Claude better context

People naturally provide more context when speaking.

Compared to typing, spoken instructions often include:

- more explanation

- clearer reasoning

- additional situational details

For an AI coding assistant, this extra context improves:

- understanding of the problem

- reasoning about potential fixes

- the quality of generated solutions

In other words, speaking can actually improve the clarity of your request.

Claude Code’s new Voice Mode is here to reduce the distance between thinking and delegating work.

This isn’t just a new input method.

It’s a more natural way to direct AI-powered development workflows—one that keeps you focused on the code while communicating intent at the speed of thought.