DeepSeek really destroyed OpenAI and ChatGPT without even trying

Big Tech truly got the shock of their lives from China.

They really thought they were light years ahead of everyone else just because they had all the money in the world.

But DeepSeek just taught them a lesson never to forget.

After these tech giants blindly poured all those billions and billions of dollars into their models in desperate attempts to stay ahead in the AI race.

DeepSeek spent just a tiny tiny fraction of that — less than $6 million — to train a model that destroys 97% of all the major models like GPT-4 and Gemini in every way.

And far far cheaper to run too — China 😅

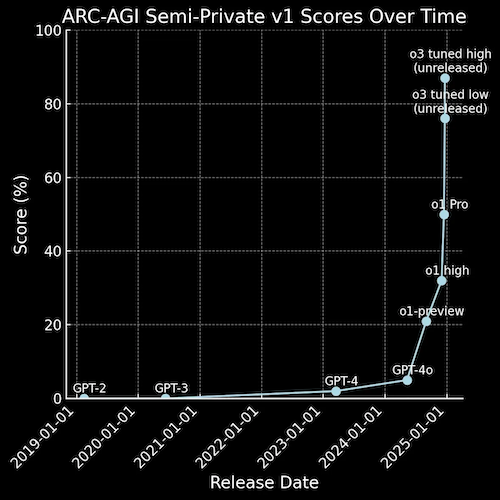

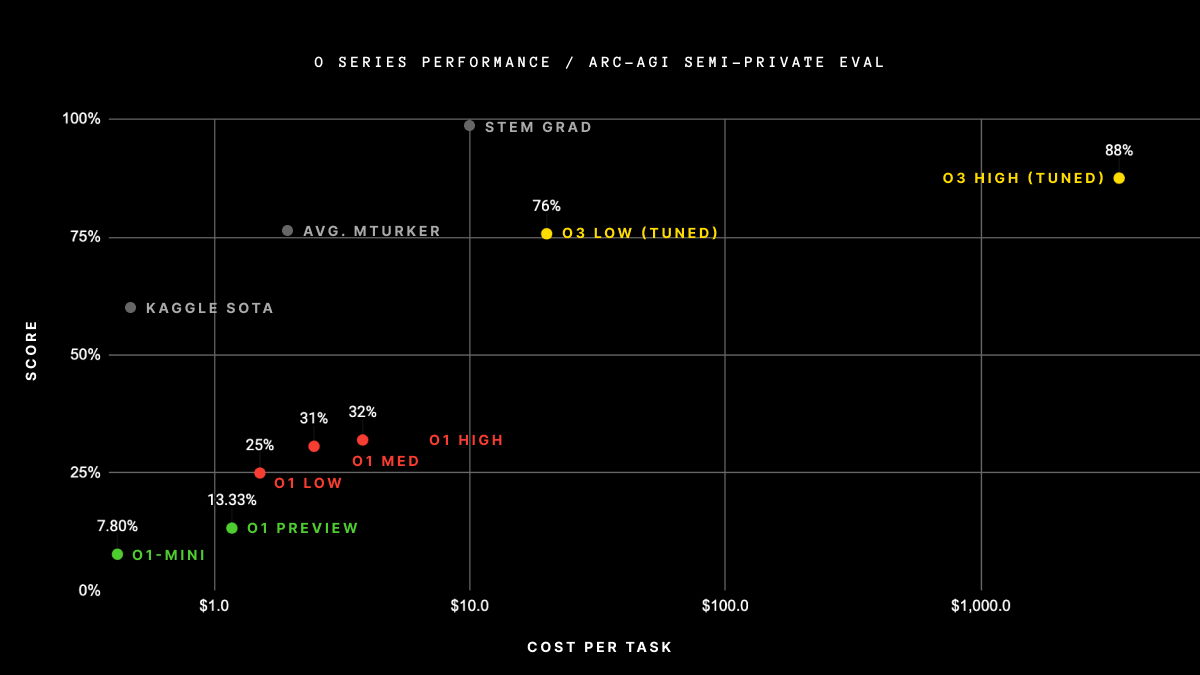

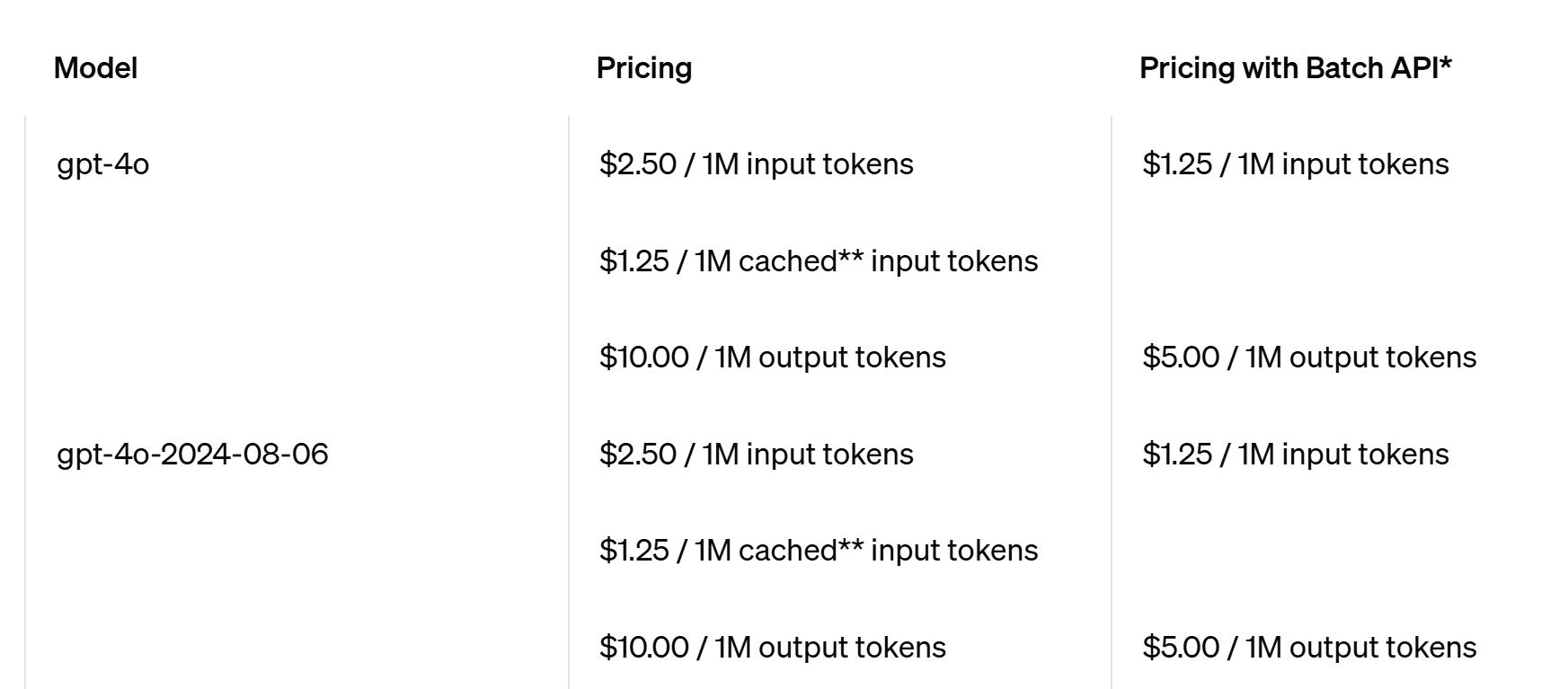

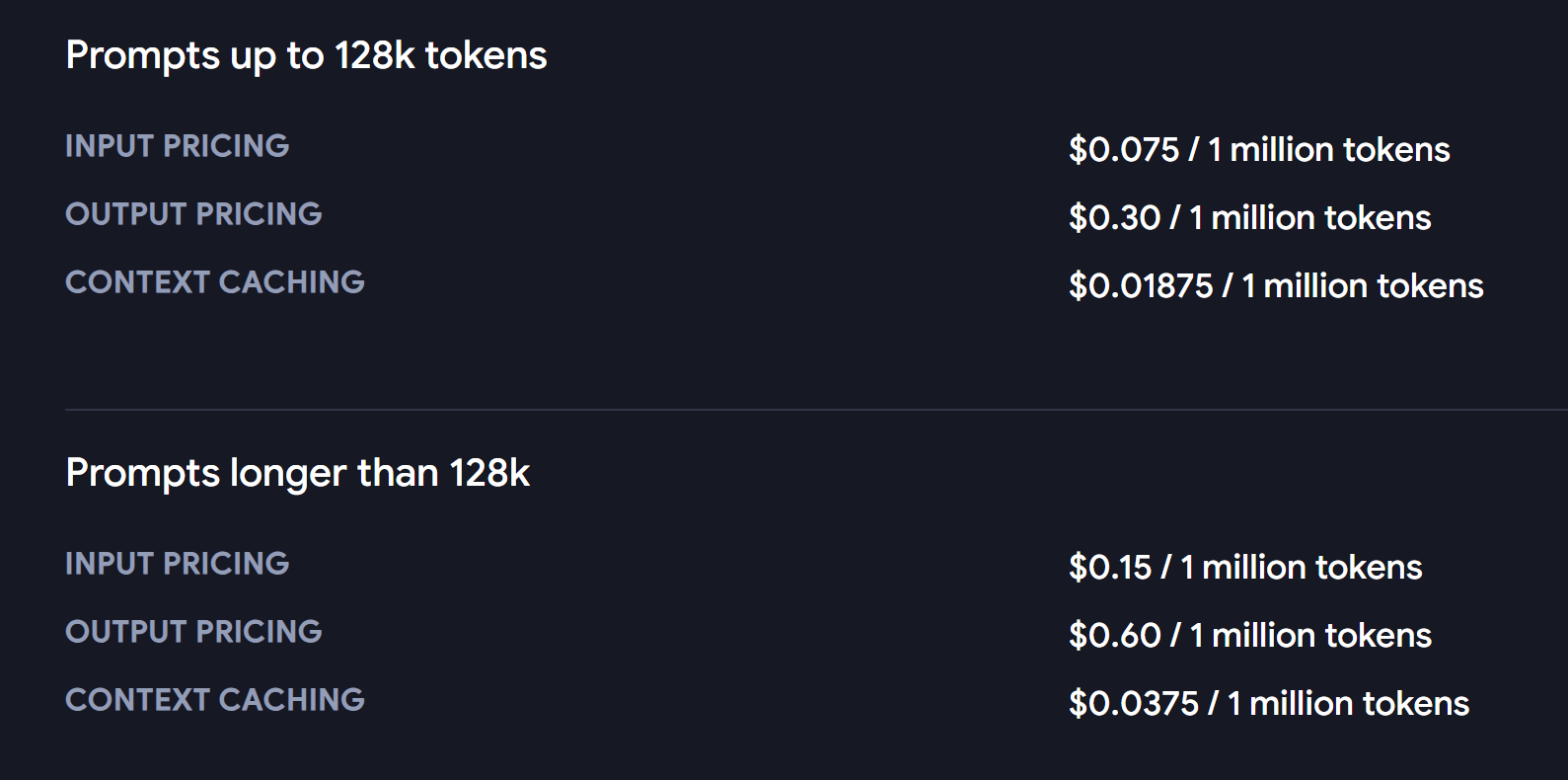

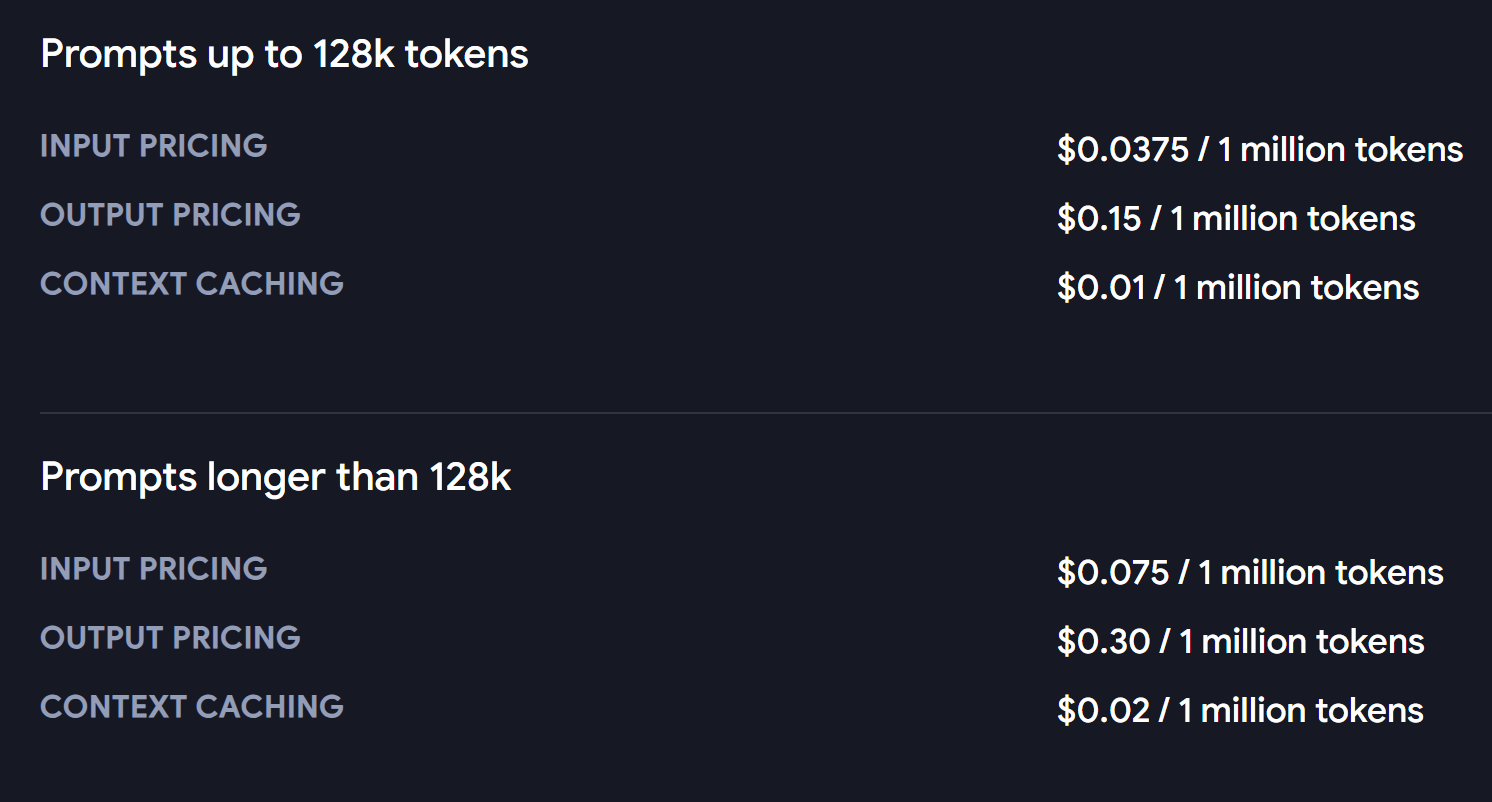

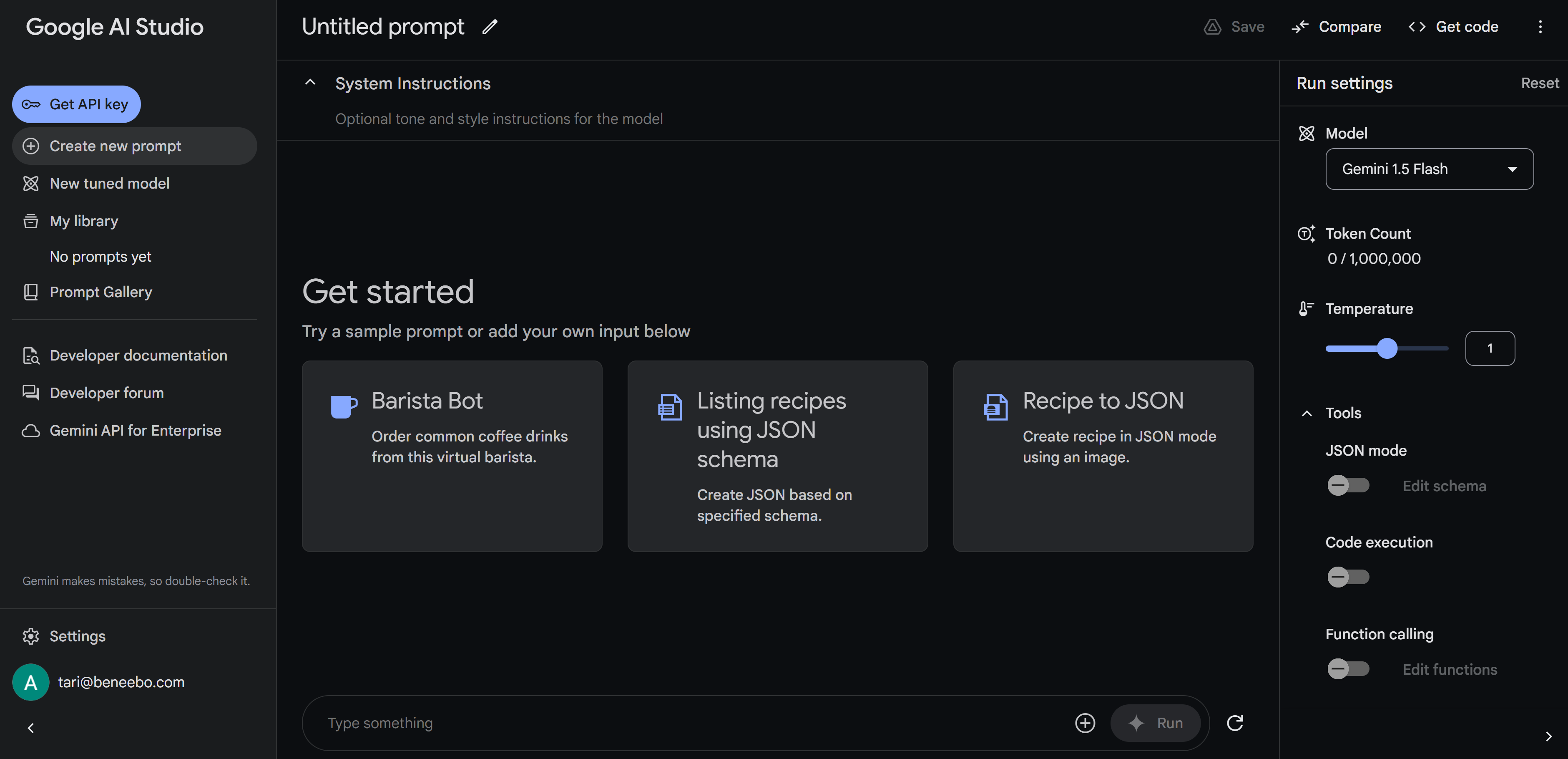

You easily see how DeepSeek is by far the most cost-efficient of all the major models.

And not just relatively efficient but more intelligent on an absolute measurement.

Only o1 can compare — and you can see just how ridiculously expensive it is — just look at the crazy gap to DeepSeek and all the rest.

DeepSeek is at least 20 times cheaper than o1 and yet matches it in every way.

Well well well.

So all those heavily funded genius computer scientists working on all those models — got thoroughly outclassed by a tiny side project from like 50 random guys from China.

And then the final nail in the coffin — open-source and free to use.

These tech giants tried so hard to keep the inner workings of all their fancy models from the public — so many trillions to made from being the first and only to achieve and control the holy grail of superintelligence, right?

Lol remember when OpenAI used to actually be open…

But now this one-year-old startup just came out of absolutely nowhere and crashed the entire pro-profit party.

Not just open-source but with MIT license — meaning you get to do basically whatever you want with it.

The entire algorithm is all out in the open for everybody and their dog to see. And test and run.

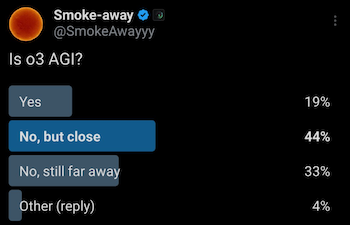

Many users have already been talking about how much more creative and clever the DeepSeek feels compared to ChatGPT.

With all of this it wasn’t surprising to see their official apps rocket to the top of the charts on both app stores.

It’s funny how all this comes just a few days after the so-called Stargate Project that’s costing as much as $500 billion dollars.

These huge US tech companies have been swimming in so much cash and have been getting lazy.

Their main focus seemed to be just pumping in as much cash as possible to fatten up their models — and then hoping that the models just keep improving from getting bigger and bigger.

GPT-3.5 — 175 billion paramters

GPT-4 — 1.8 trillion parameters

GPT-4 was largely better but was it anywhere close to TEN times as better? Of course not. It seemed even worse at some tasks.

Instead of trying to improve how they train the models and looking for ways to improve on the transformer LLM architecture.

They just kept doubling down on model size and raw computing power

Gobbling up chips from Nvidia and shooting their stock price to the moon ($600 billion wiped out in the last few days btw)

Trying to build massive NUCLEAR-powered data centers (really?)

Now DeepSeek just educated them on how much better a model with the same resources can be with superior training methods.

It’s a wake-up call that spread panic across the US stock market.

The disruption will hopefully send back more researchers back to the drawing board to focus on what matters, leading to more solid AI progress across the board.