o3! Wow! AGI has finally been achieved now?! No way!!

Lol.

So when is someone finally going to tell us what “AGI” means?

Or are we going to keep moving the goalposts to keep our heads in sands about the inevitable?

Okay so sure, it wasn’t AGI when ChatGPT first shocked everyone including OpenAI in 2022 with dynamic responses on any single topic. I remember many were playing dumb back then and calling it glorified autocomplete.

It wasn’t AGI when GPT passed the Bar and SAT.

It wasn’t AGI when GPT smashed the Turing test. Never mind that what makes a Turing test has kept changing for years as AI gets more and more advanced — another way of moving the goalposts.

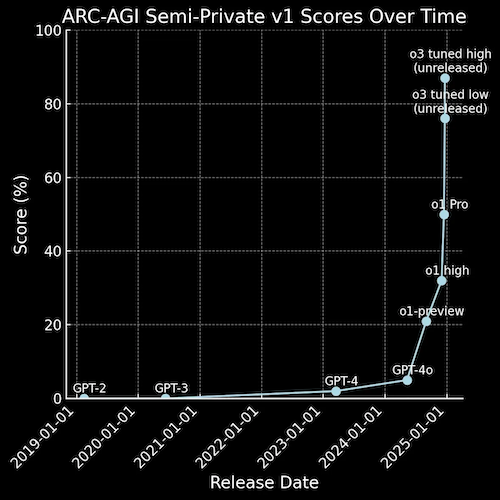

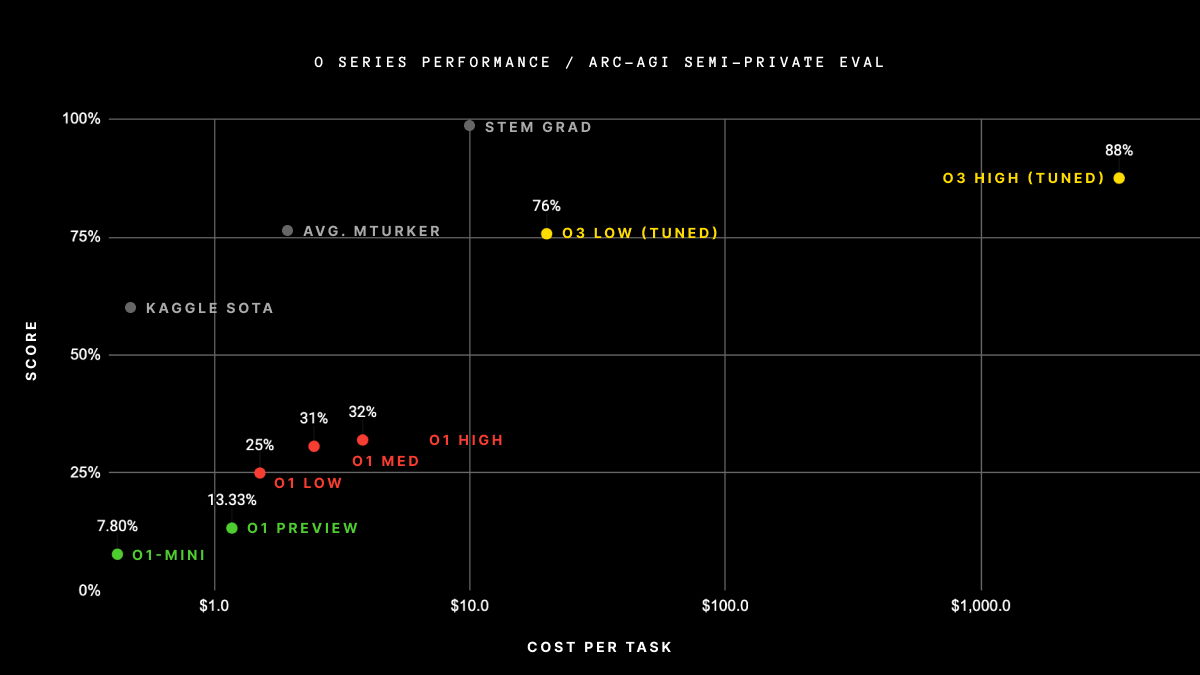

Now we have o3 scoring 87.5% in the ARC-AGI benchmarks… are we there yet?

Damn, look at how much that stuff costs tho. $2,500 per task on the high end? But of course it will eventually come down — right? Right?

No way in hell they’re going to give this away for free. Maybe we can expect a new $2000 plan soon.

But is it “AGI”?

How good does AI have to get before you say it’s “general”?

o3 destroys PhDs in standardized tests, gets 96.7 in one of the toughest math exams in the world, beats almost every single competitive coder on Codeforces…

ChatGPT could already do therapy, write poems and song lyrics you could never dream of, generate personalized workout plans, explain weird French translations…

But oh no, it couldn’t possibly be “general” intelligence, it’s just glorified autocomplete.

Or no it needs to be an omnipotent god before we can call it AGI.

Why do we even treat AGI in such a binary way? Is it or it’s not? A onetime ultimate final destination after which all our jobs get wiped out instantly and we’re doomed.

The job loss is already here and it’s happening steadily but surely, as AGI-lity advances.

You say for AGI it needs to be able to learn and reason, but GPT can’t already do that? And what do you really mean by “learn” and “reason”?

When you upload a PDF to ChatGPT that it’s never seen before and it answers every single question you ask far faster than if you slogged away reading it yourself, it didn’t “learn”? But for you you would have “learned” right? Or was it still glorified autocomplete? But not you right? You have “real” intelligence.

There’s a reason why tools like AutoGPT and BabyAGI were such big deals. They were the first AI agents.

They could create a step-by-step plan to achieve any goal using the tools at their disposal — while checking if its actions were in line with the plan.

And when you think of it, this is basically what we humans do almost every single moment of our lives, even if we don’t realize it.

Life is all goals, conscious or sub-conscious, short-term or long-term — eat, tell a joke, get rich, write an article, run for your life, cast your vote, kiss…

We break down the goals into smaller sub-goals and use the tools we have to achieve them — our legs, our speech, our devices, our money, and so much more.

These tools weren’t perfect but they still had promises glimpses of success in many demos that went viral across the internet.

They showed us what’s possible with AI agents, and now we everyone rushing to build the most advanced agent

These agents will only continue to get better and better at complex problem solving and reasoning. After a certain point the only thing limiting them will be the tools you connect them to.

We’re already seeing the progress — just look at what Google’s new Project Mariner agent in action

Coding tools like Copilot and the new agentic Windsurf IDE are already making software dev easier than ever before.

o3-powered agents will be even more powerful those of previous models.

Fact is whether you call these tools AGI or not, they’re already doing things we never dreamed they would be able to.

“Creative” jobs like writing, visual art, and UI design are already falling to AI. Now we have video generators upon us that will only rapidly improve as enter 2025.

The AI takeover is happening and it’s not stopping, whether they meet your definition of AGI or not.