OpenAI just launched a new model (“o1”) and it’s HUGE.

Before now there’d been a lot of mystery about an upcoming “Strawberry” model, subtly hinted at by Sal Altman in a cryptic tweet last month.

The isn’t just another version of GPT. This is something completely different.

OpenAI designed it to excel in tasks that require deeper reasoning—things like solving multi-step problems, writing intricate code (bad news for devs?), and even handling advanced math.

It doesn’t just predict the next word. It’s been trained to “think”.

But didn’t AI models already do this? Not quite.

o1 is different because it’s been trained using reinforcement learning. A training approach that lets the model learn from its mistakes, getting better over time at reasoning through complex tasks.

How good is it?

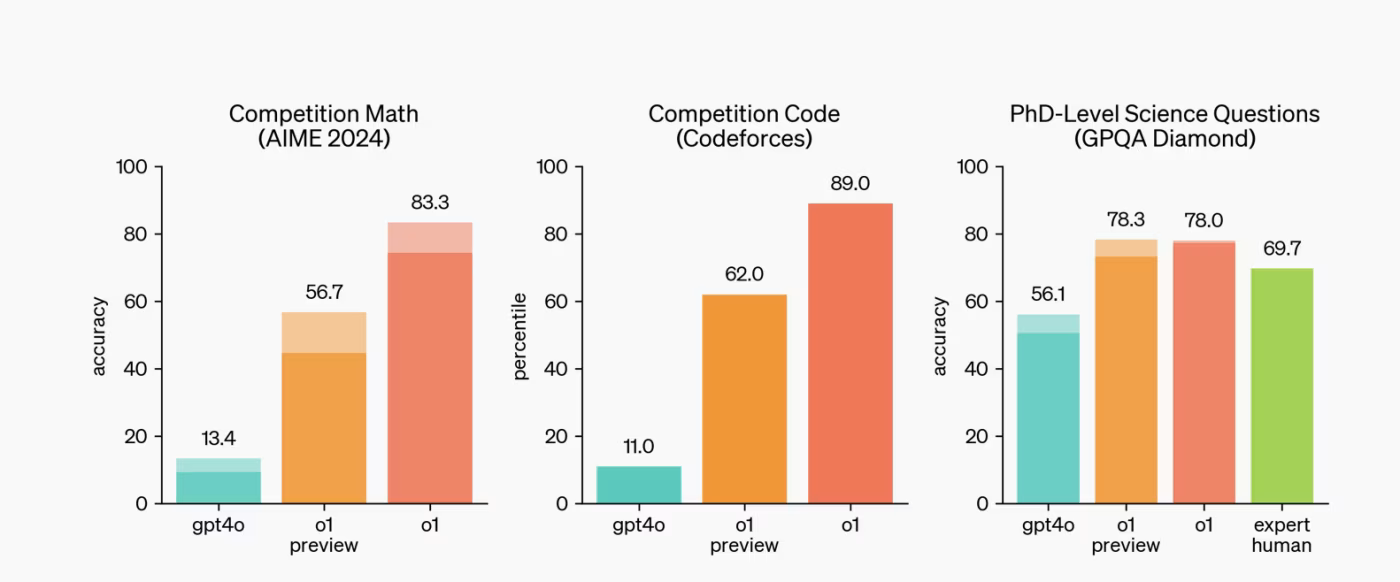

OpenAI tested o1 on International Mathematics Olympiad problems and results were jaw-dropping: o1 correctly solved 83% of them.

And how many was last year’s all-powerful GPT-4 able to solve?

13%.

Literally 13 — imagine how bad GPT-3 would have been? You know that wowed us all in 2022. That’s not just an improvement—it’s a massive leap.

Also massive enough to make it as intelligent as Physics and Chemistry PhD students in benchmark tests — incredible.

The price tag

Here’s where things get a bit tricky.

If you’re looking to use o1 through the API, be ready to pay.

The cost is $15 per million input tokens and $60 per million output tokens. Compare that to GPT-4’s $5 and $15, and you can see the difference.

For large scale apps with tons of users this adds up much quickly.

Is it worth it? Well that depends.

o1 is slower when handling simple tasks. So if you just want to know the capital of Spain then it’s a overkill. There’s no thinking there, just basic memory retrieval.

GPT-4 is still more efficient for everyday queries with moderate complexity.

How does it work?

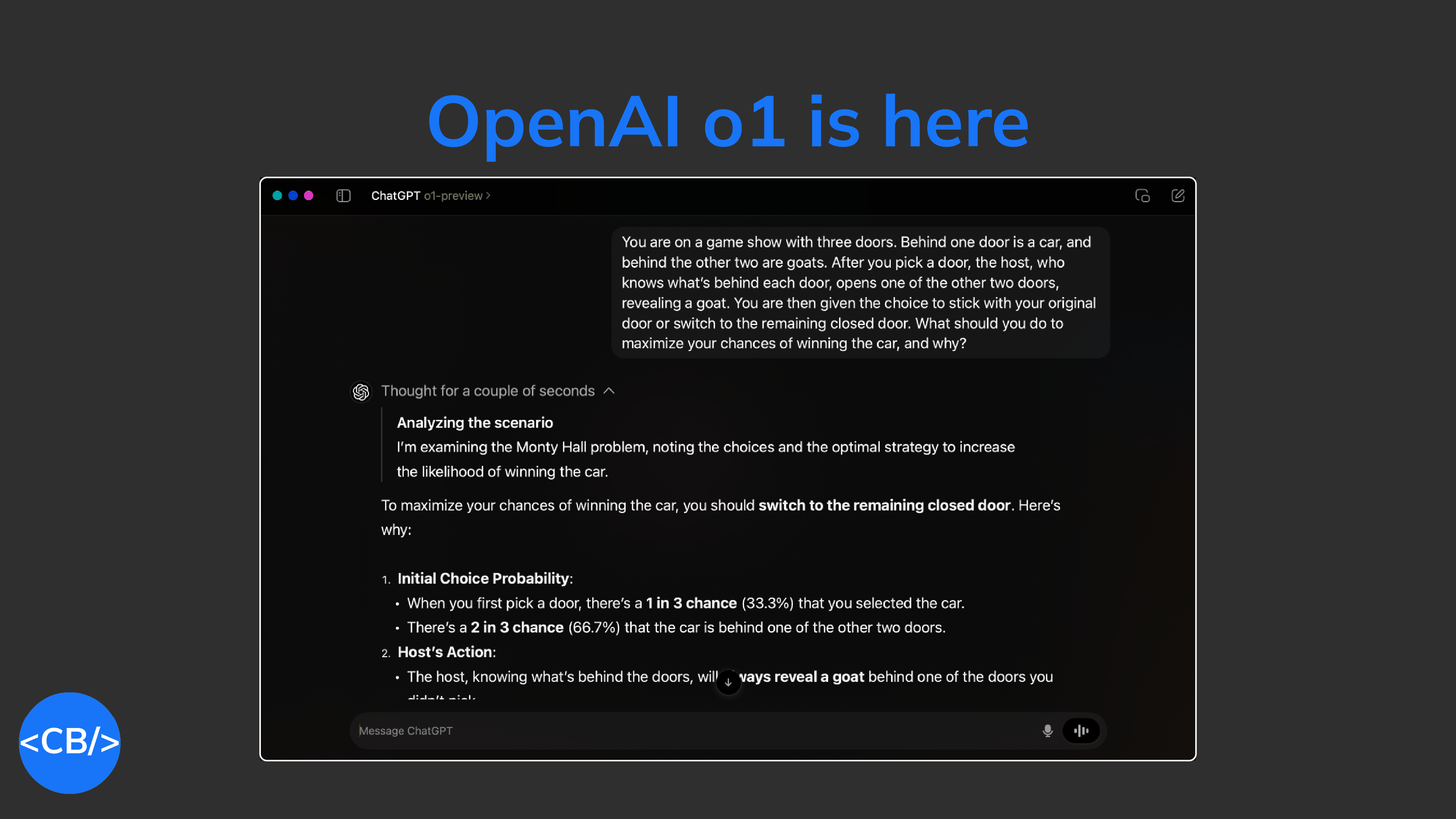

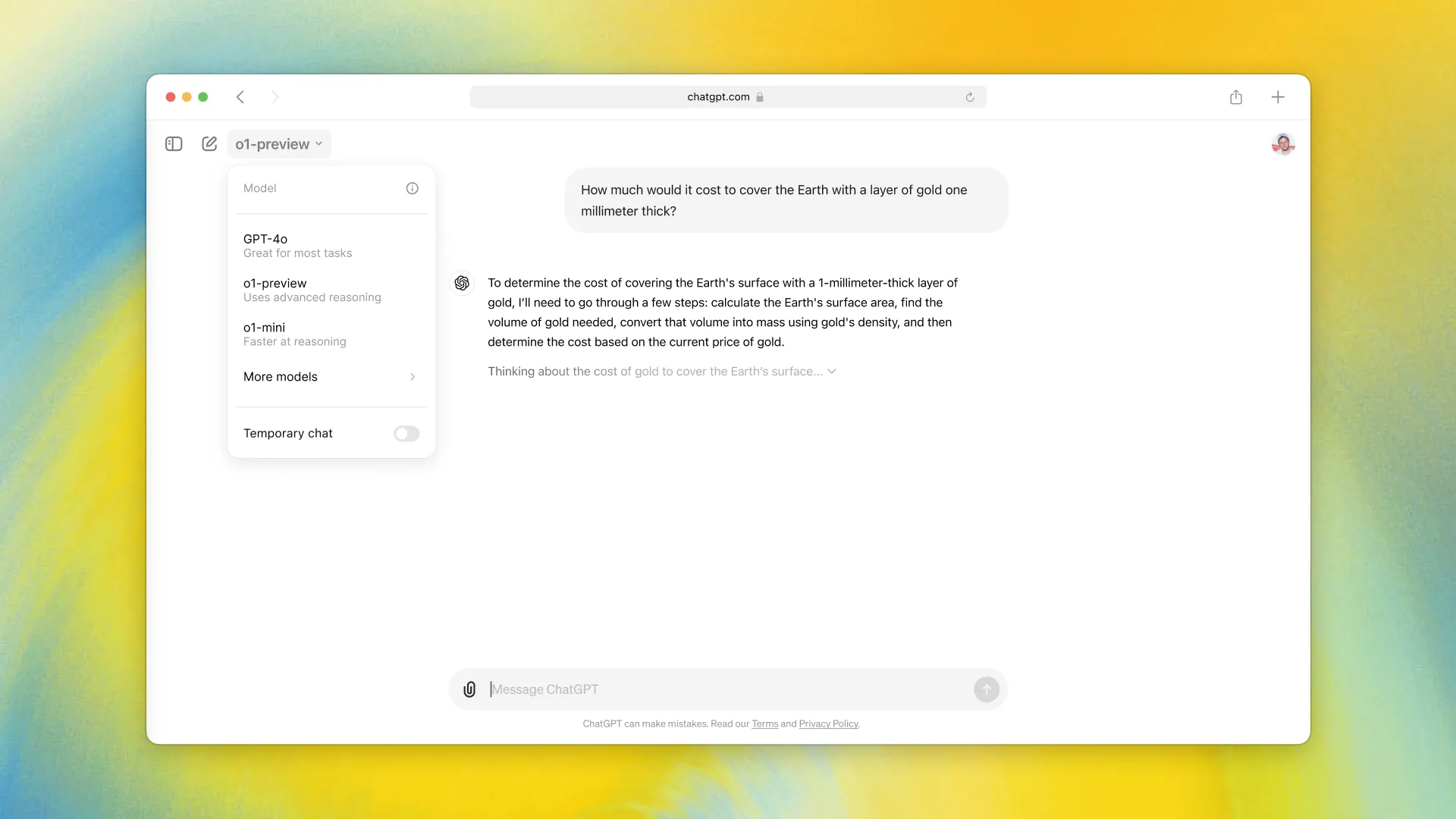

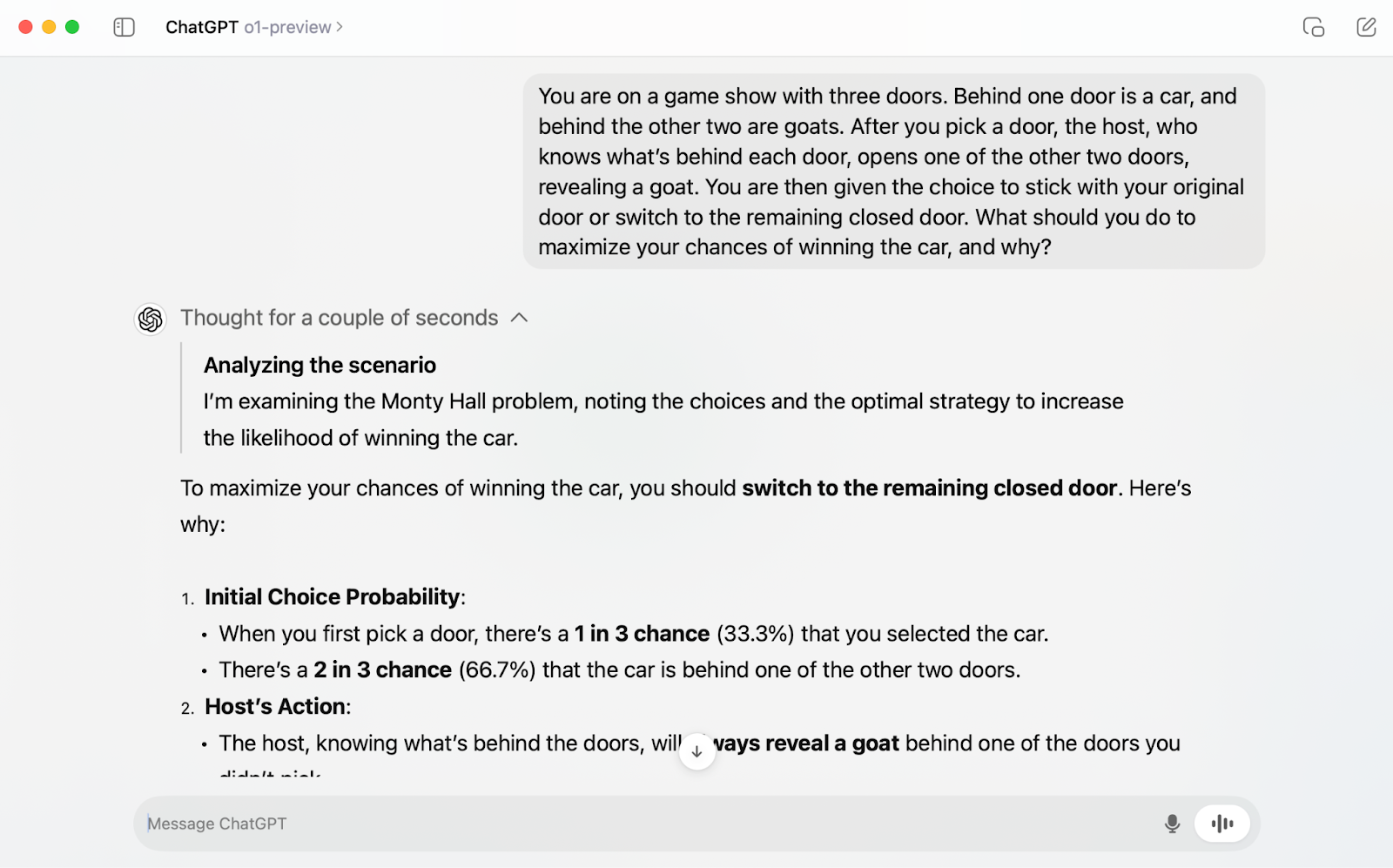

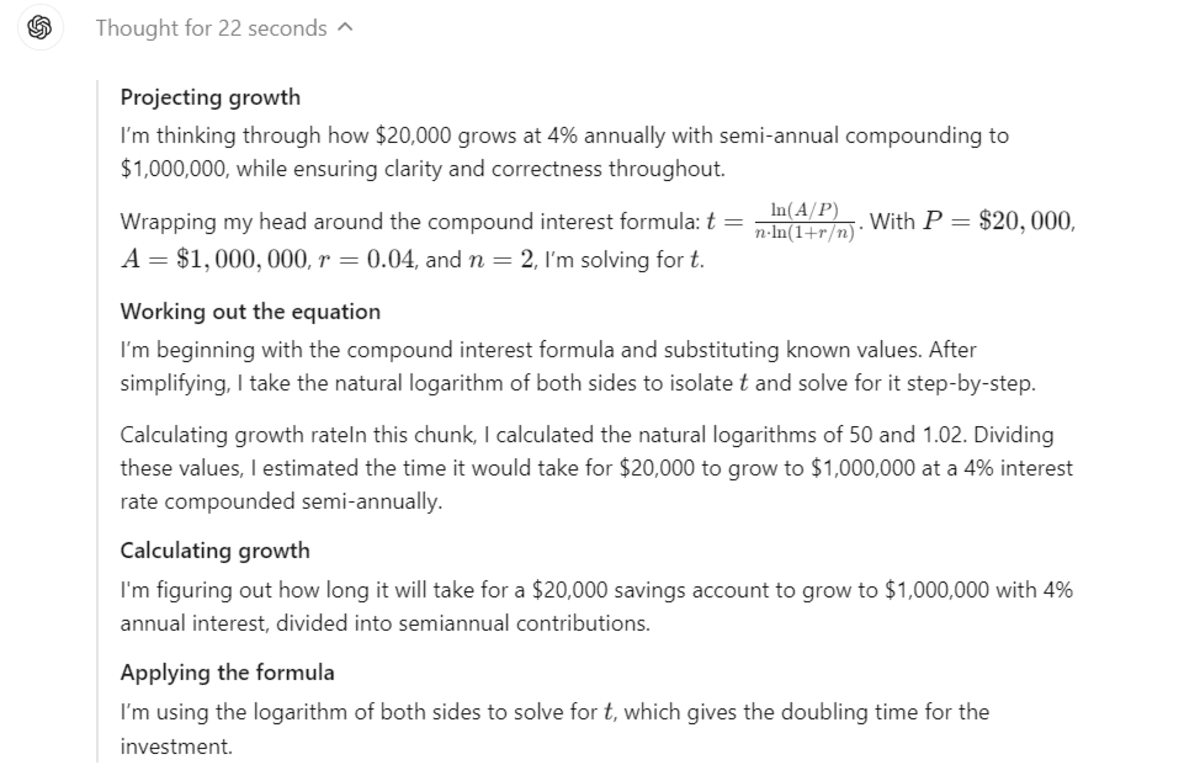

What really makes o1 stand out is how it thinks through problems.

Instead of just spitting out answers, it explains its reasoning step by step. It’s like watching someone carefully work through a tough math problem, showing their process as they go.

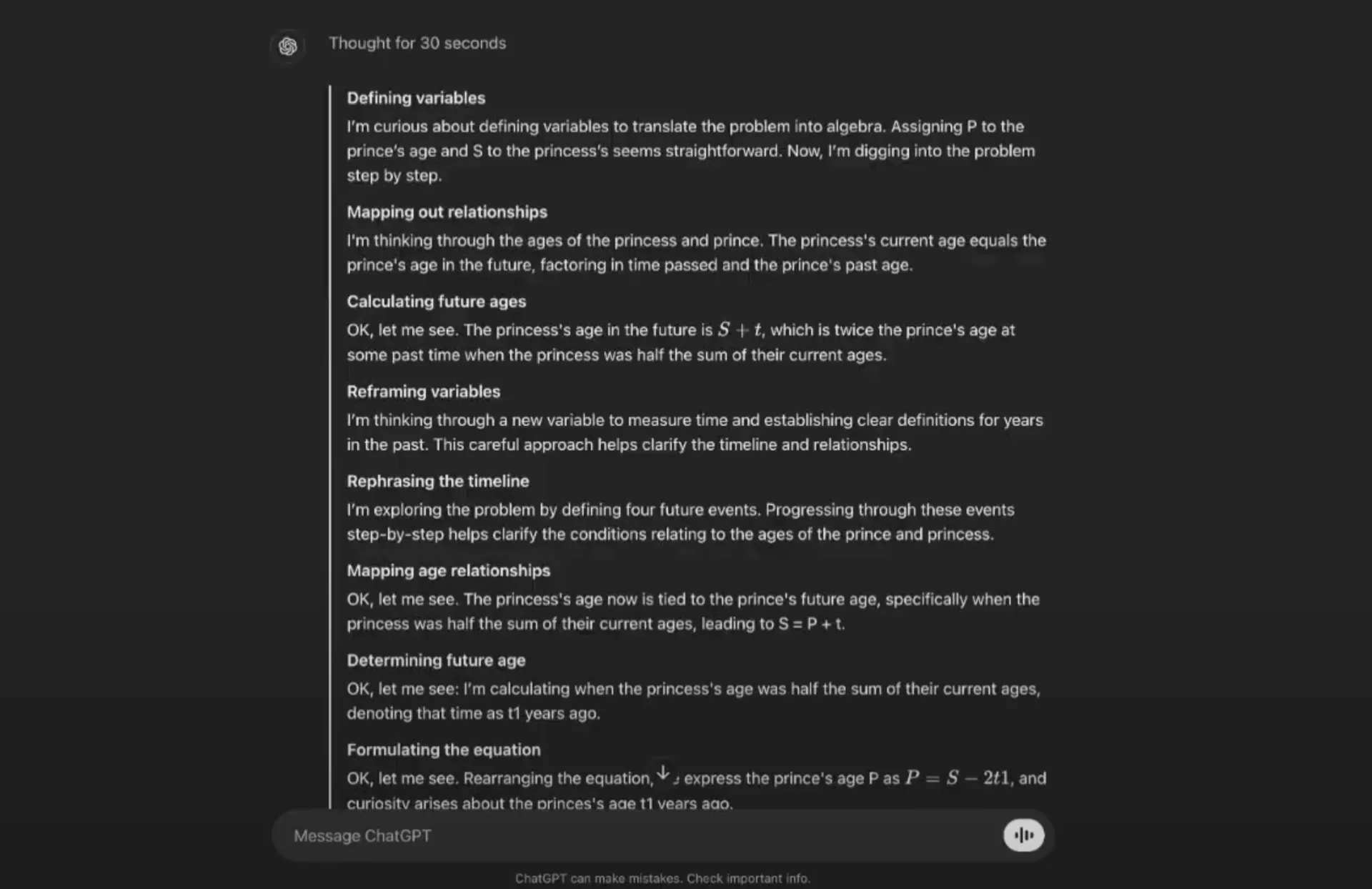

OpenAI ran a demo where o1 solved a logic puzzle involving the ages of a prince and princess.

The model broke down the puzzle in real time, walking through the logic in a way that felt almost human. It didn’t just give the answer—it explained how it got there. This “chain of thought” approach gives o1 a serious edge when tackling complex challenges.

It’s kind of like an LLM agent, break down goals into sub-goals, possibly doing some internal multi-prompting.

Or like AutoGPT (lol) — you know that overhyped stuff no one talks about anymore.

But it’s not perfect. When you ask it simple questions, it can overthink things. For instance, ask it where oak trees grow in America, and you might get an entire essay. o1 is built for depth, not speed.

And of course it can get the chain of thought wrong — like in this popular code cracking problem.

Where o1 shines

o1 opens up new possibilities in fields like science, coding, and advanced problem-solving.

OpenAI mentioned it’s especially useful for scientific research—like generating formulas for quantum physics or solving tricky coding challenges.

In coding competitions like Codeforces, o1 performed in the 89th percentile. That’s impressive, given these contests aren’t just about writing code—they demand serious problem-solving skills.

Even in everyday tasks, o1 proves its value when complexity is high.

I saw this interesting instance where someone asked o1 to help plan a Thanksgiving dinner for 11 people with only two ovens. The model didn’t just give a recipe; it created a detailed cooking schedule, even suggesting renting an extra oven to make the day easier. That’s the kind of thinking it brings to the table.

Worth the hype?

o1 won’t replace GPT-4 for most day-to-day tasks. It’s slower, pricier, and can be overkill for simple queries. But when you’ve got a problem that needs serious brainpower—whether it’s writing complex code or solving advanced math problems—o1 is unmatched.

It probably won’t be your go-to for everything, but it’s a powerful tool for those moments when you need something that thinks, not just computes.

So, what do you think? Is o1 something you’ll be using, or are you sticking with GPT-4 for now?