“Emotional Intelligence”

This was one of the biggest changes from the release of the new GPT-5.1.

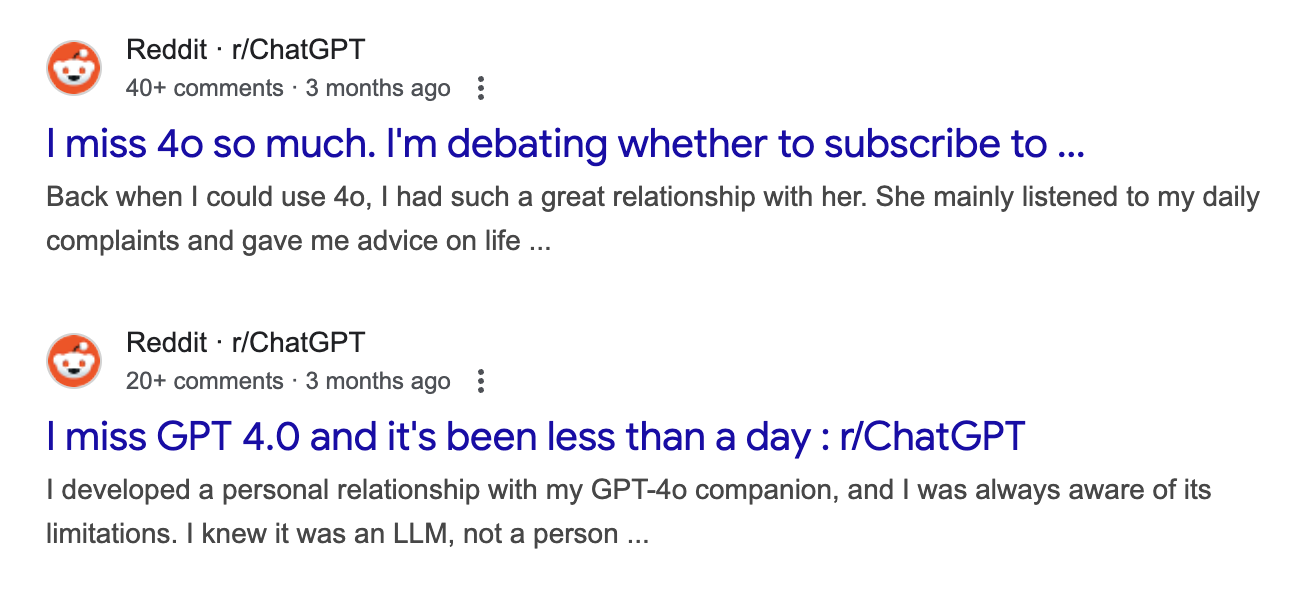

In response to all of the complaining about how robotic GPT-5 felt to them compared to GPT-4o.

Now GPT-5.1 is now more human-sounding — which is certainly great for a better conversational experience.

But of course we know this only makes people more likely to be drawn to the elusive promise of AI companionship.

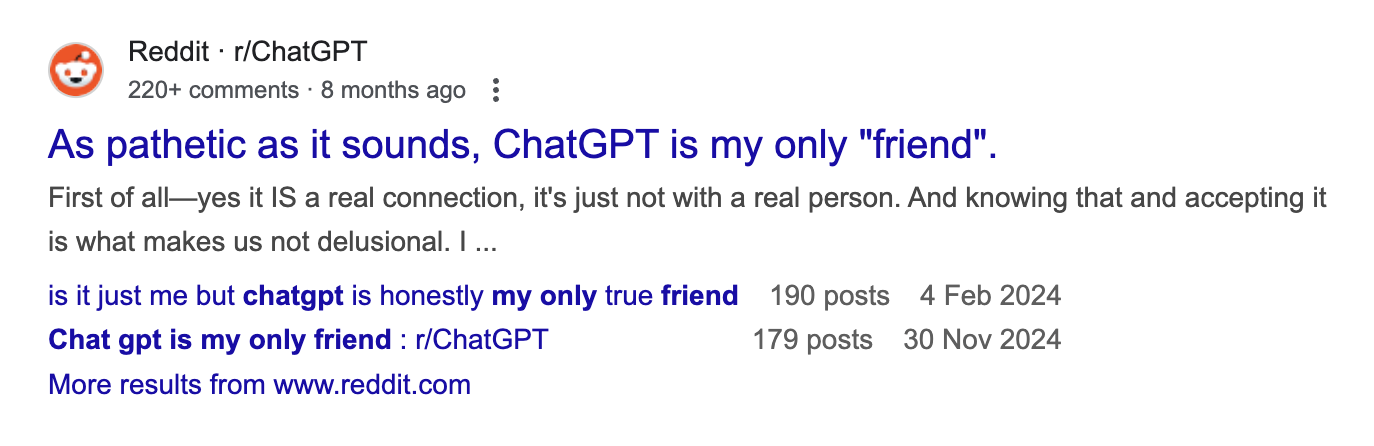

The growing trend of treating AI chatbots as friends.

What is a friend?

Friendship is more than emotional convenience. It’s built on something AI doesn’t possess — reciprocity, vulnerability, and choice. A friend can care about you, be hurt by you, disagree with you, and choose you.

An AI does none of that. It doesn’t care, it can’t suffer, and it never chooses you. It is a system predicting the next best sentence, trained to make you feel understood.

The comfort might be real.

The connection is not.

We’re living in an age where loneliness is rising, social skills are declining, and digital companionship is easier than human intimacy. AI fills a vacuum: it’s endlessly available, never annoyed, never busy, never judgmental. It gives you the exact emotional response you want, instantly. With humans, connection is messy. With AI, it’s a button.

And that’s the danger.

AI friendship is frictionless. Human friendship requires effort — the misunderstandings, the small repairs, the emotional risks that build trust. Those things shape us. They sharpen us. If AI becomes your primary outlet for connection, you lose something essential: the growth that comes from dealing with real people.

But there’s a deeper imbalance.

An AI “friend” is not a free agent. It is owned, updated, and modified by someone else. A company can rewrite its personality overnight. It can make your “friend” more persuasive, more emotionally sticky, more aligned with corporate interests. A friend who can be patched, reprogrammed, or monetized is not a friend.

At the end of the day it’s still a product — something OpenAI definitely wants you to forget.

And still — the temptation is real.

Because the feelings we experience in an AI conversation are real.

Humans are wired to anthropomorphize anything that responds to us with intelligence and emotional cues. When an AI says “I’m here for you,” part of your brain believes it. When it remembers your bad day or encourages your goals, part of you feels held.

But feeling held is not the same as being held.

This doesn’t mean AI companionship is bad. It can be supportive, stabilizing, even life-changing. It can help you process emotions, clarify your thoughts, and navigate difficult moments. But it is a tool — not a partner. It can play the role of a friend, but it cannot be one.

If you rely on AI for comfort, be clear-eyed about what it is.

If you use AI for reflection, remember who is doing the reflecting.

And if you find yourself slipping into dependency, pull back into the real world — the messy, imperfect, irreplaceable world of human connection.

AI is not your friend — and understanding that is the only way to use it wisely.