His AI bot made thousands of job applications automatically while he slept — only for him to wake up an interview request in the morning.

Over the course of 1 month he got dozens of job interviews — over 50.

And of course he’s not the only one — we now have several services out there that can do this.

But we can build it ourselves and start getting interviews on autopilot.

Looking at this demo already confirms my expectation that the service would be best as a browser extension.

No not best — the only way it can work.

Lol of course no way LinkedIn’s gonna let you get all that juicy job data with a public API.

So scraping and web automation is the only way.

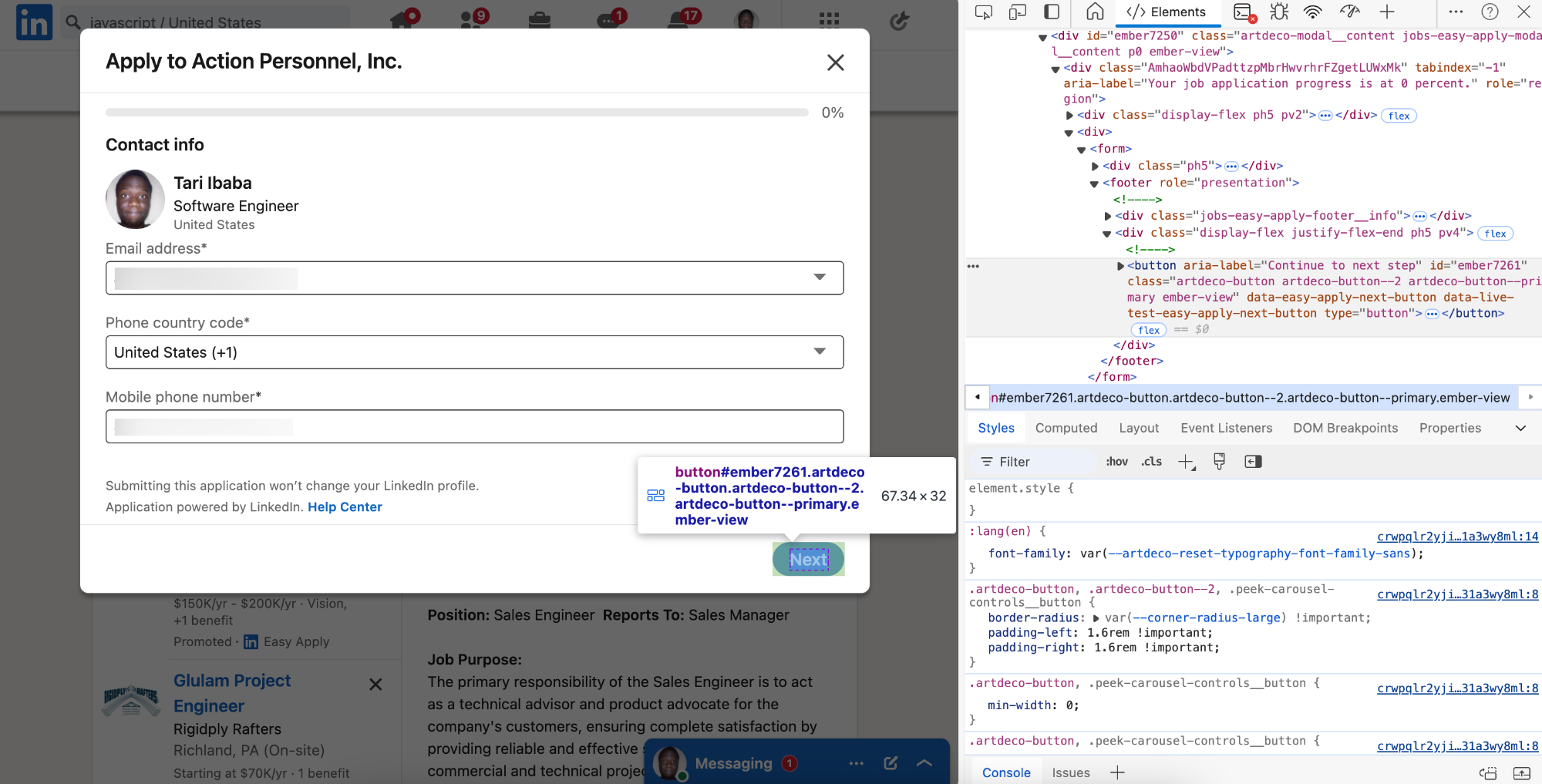

So now if we want to set it up for LinkedIn like it is here.

Of course we can just go ahead and start the automation — we need some important input.

Looking at the input and their data types

Skills — list of text so string array/list

Job location — string of course, even though they could be an geolocation feature to automate this away.

Number of jobs to apply — too easy

Experience level and job type – string

If I’m building my own personal bot then I can just use hardcoded variables for all these instead of creating a full-blown UI.

So once you click the button it’s going to go straight to a LinkedIn URL from the Chrome extension page

Look at the URL format:

linkedin.com/jobs/search/?f_AL=true&keywords=product%20management%20&f_JT=F&start=0So it’s using some basic string interpolation to search the LinkedIn Jobs page with one of the skills from the input.

And we can rapidly go through the list with start query that paginates the items

And now this is where we start the web scraping.

We’d need to start parsing the list and items to get useful information for our application goals.

Like looking for jobs with low applications to boost our chances.

You can get a selector for the list first.

const listEl = document.querySelector('.scaffold-layout__list');

Now we’d need selectors to uniquely identify a particular list item.

So each list item is going to be a <li> in the <ul> in the .scaffold-layout__list list.

And we can use the data-occludable-job-id attribute as the unique identifier.

Now we can process this li to get info on the job from the list item.

const listEl = document.querySelector(

".scaffold-layout__list ul"

);

const listItems = listEl.children;

for (const item of listItems) {

// ...

}Like to find jobs that have that “Be an early applicant” stuff:

for (const item of listItems) {

const isEarlyApplicant = item.textContent.includes(

"Be an early applicant"

);

}Also crucial to only find jobs with “Easy Apply” that let us apply directly on LinkedIn instead of a custom site, so we can have a consistent UI for automation.

for (const item of listItems) {

// ...

const isEasyApply = item.textContent.includes("Easy Apply");

}We can keep querying like this for whatever specific thing we’re looking for.

And when it matches we click to go ahead with applying.

for (const item of listItems) {

// ...

if (isEarlyApplicant && isEasyApply) {

item.click();

}

}Find a selector for the Easy Apply button to auto-click:

for (const item of listItems) {

// ...

if (isEarlyApplicant && isEasyApply) {

item.click();

const easyApplyButton = document.querySelector(

"button[data-live-test-job-apply-button]"

);

// delay here with setTimeout or something

easyApplyButton.click();

}

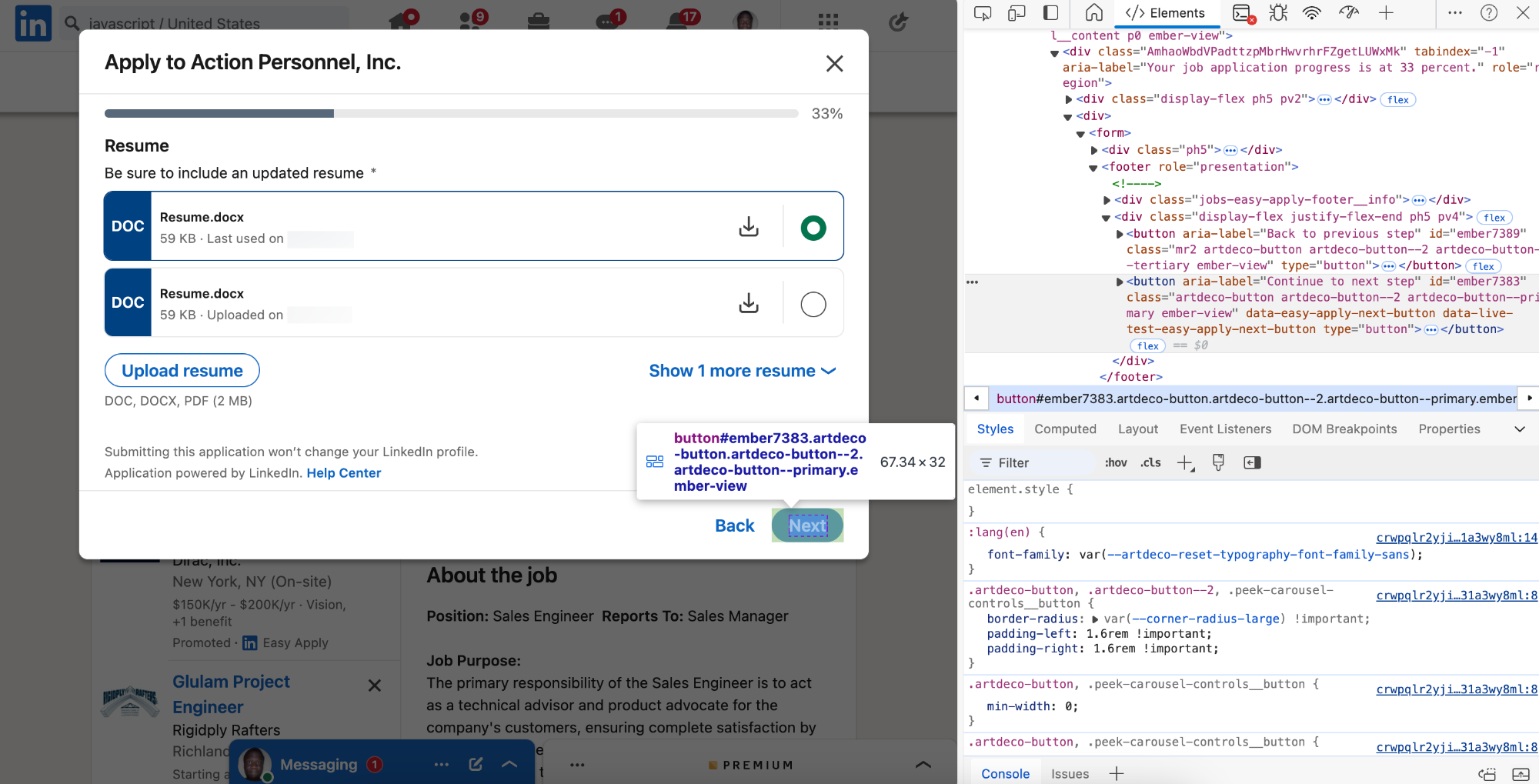

}Do the same for the Next button here:

And then again.

Now in this late stage things get a bit more interesting.

How would we automate this?

The questions are not all from a pre-existing list — they could be anything.

And looks like service I’ve been showing demos of didn’t even do this step properly — it just put in default values — 0 years of experience for everything — like really?

Using an LLM would be a great idea — for each field I’ll extract the question and the expected answer format and give this to the LLM.

So that means I’ll also need to provide resume-ish data to the LLM — so

I’ll use our normal UI inspection to get the title.

function processFreeformFields() {

const modal = document.querySelector(

".jobs-easy-apply-modal"

);

const fieldset = modal.querySelector(

"fieldset[data-test-form-builder-radio-button-form-component]"

);

const questionEl = fieldset.querySelector(

"span[data-test-form-builder-radio-button-form-component__title] span"

);

const question = questionEl.textContent;

}

function processFreeformFields() {

// ...

const options = fieldset.querySelectorAll(

"div[data-test-text-selectable-option]"

);

const labels = [];

for (const option of options) {

labels.push(option.textContent);

}

}Now we have all the data for the LLM:

async function processFreeformFields() {

// ...

const questionEl = fieldset.querySelector(

"span[data-test-form-builder-radio-button-form-component__title] span"

);

const question = questionEl.textContent;

const options = fieldset.querySelectorAll(

"div[data-test-text-selectable-option]"

);

const labels = [];

for (const option of options) {

labels.push(option.textContent);

}

const { answers } = await sendQuestionsToLLM([

{ question, options: labels, type: "select" },

]);

const answer = answers[0];

const answerIndex = labels.indexOf(answer);

const option = options[answerIndex];

option?.click();

}

So we can do something like this for all the fields to populate the input object we’ll send to the LLM in the prompt.

And with this all that’s left is clicking the button to submit the application

I could also do some parsing to detect when this success dialog shows.

I could use a JavaScript API like Mutation Observer to detect when this element’s visibility property changes — like the display property changing from 'none' to 'block'.

But with this I’d have successfully auto-applied for the job and I can move on to the next item in the jobs list.

const listEl = document.querySelector(

".scaffold-layout__list ul"

);

const listItems = listEl.children;

for (const item of listItems) {

// ...

}Or the next page of jobs

const page = 1;

// 0 -> 25 to go to next page

const nextPage = `linkedin.com/jobs/search/?f_AL=true&keywords=${skills[0]}&f_JT=F&start=${page * 25}`