Google’s new AI tool moves us one step closer to the death of IDE

What if the next generation of developers never opens a code editor?

This is the serious case Google is making with their incredible new natural language coding tool, Opal.

This isn’t just another no-code tool.

This is a gamble on re-thinking the very idea of coding.

Opal is a visual playground where anyone can build AI-powered “mini apps” without writing a single line of code.

Launched under Google Labs in July 2025.

You tell it what you want—“summarize a document and draft an email reply”—and Opal responds with a working, visual flow. Inputs, model calls, outputs—all wired up. You can tweak steps via a graph interface or just… keep chatting.

It’s not an IDE. It’s not even a low-code tool. It’s something stranger:

A conversational, modular AI agent that builds, edits, and is the app.

A big deal

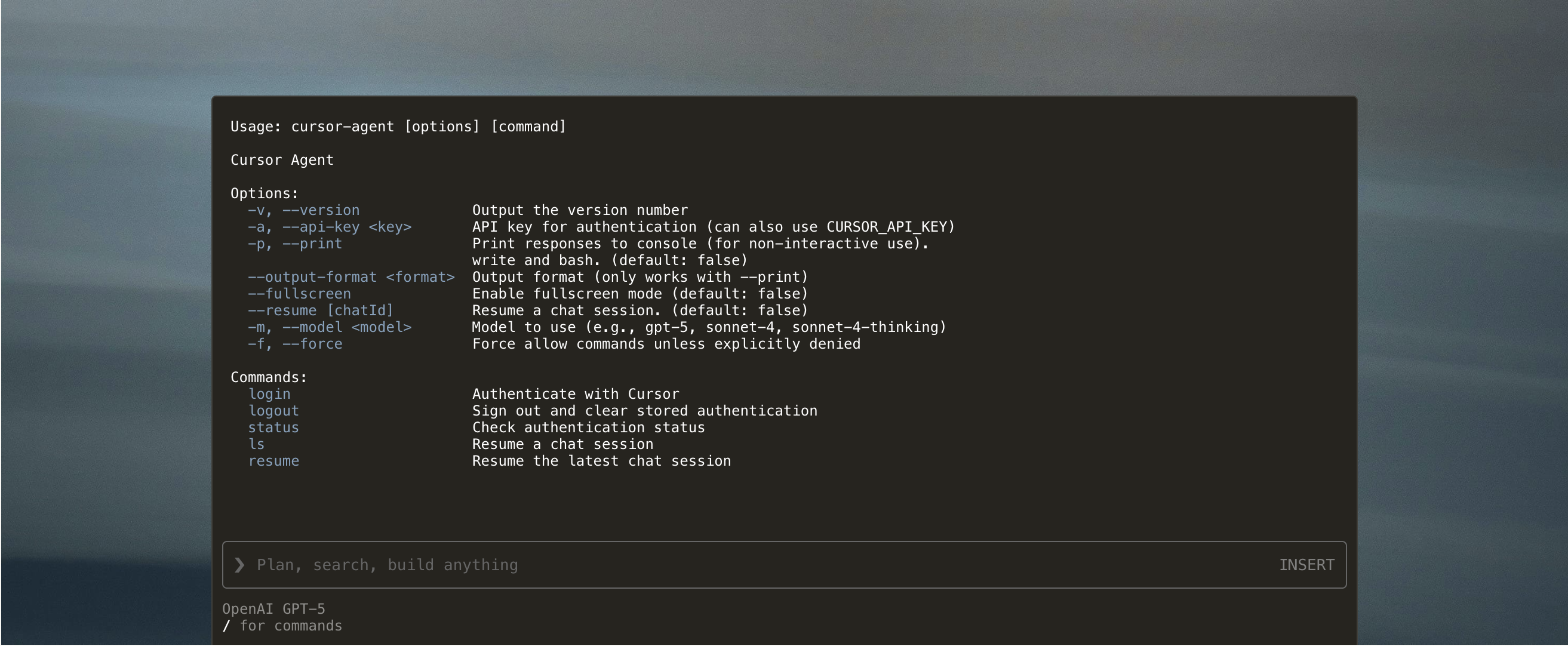

Traditional development tools like IDEs and terminals and frameworks were all built with the same mindset — humans write code to tell computers what to do.

But Opal says:

Humans describe outcomes. The AI figures out the how.

It’s the opposite of what we’ve spent decades optimizing for:

- No syntax.

- No debugging.

- No deployment targets.

Just outcomes.

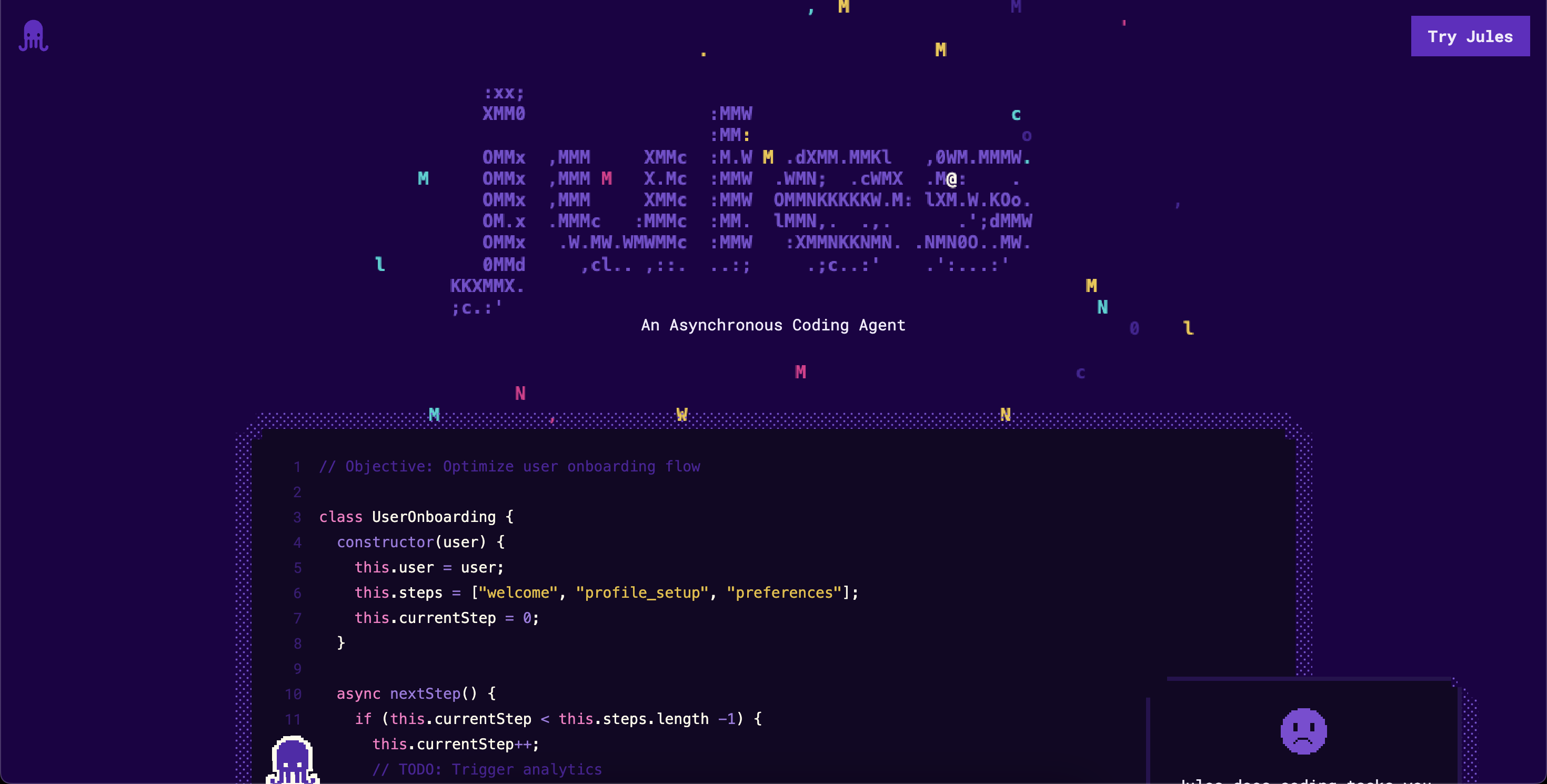

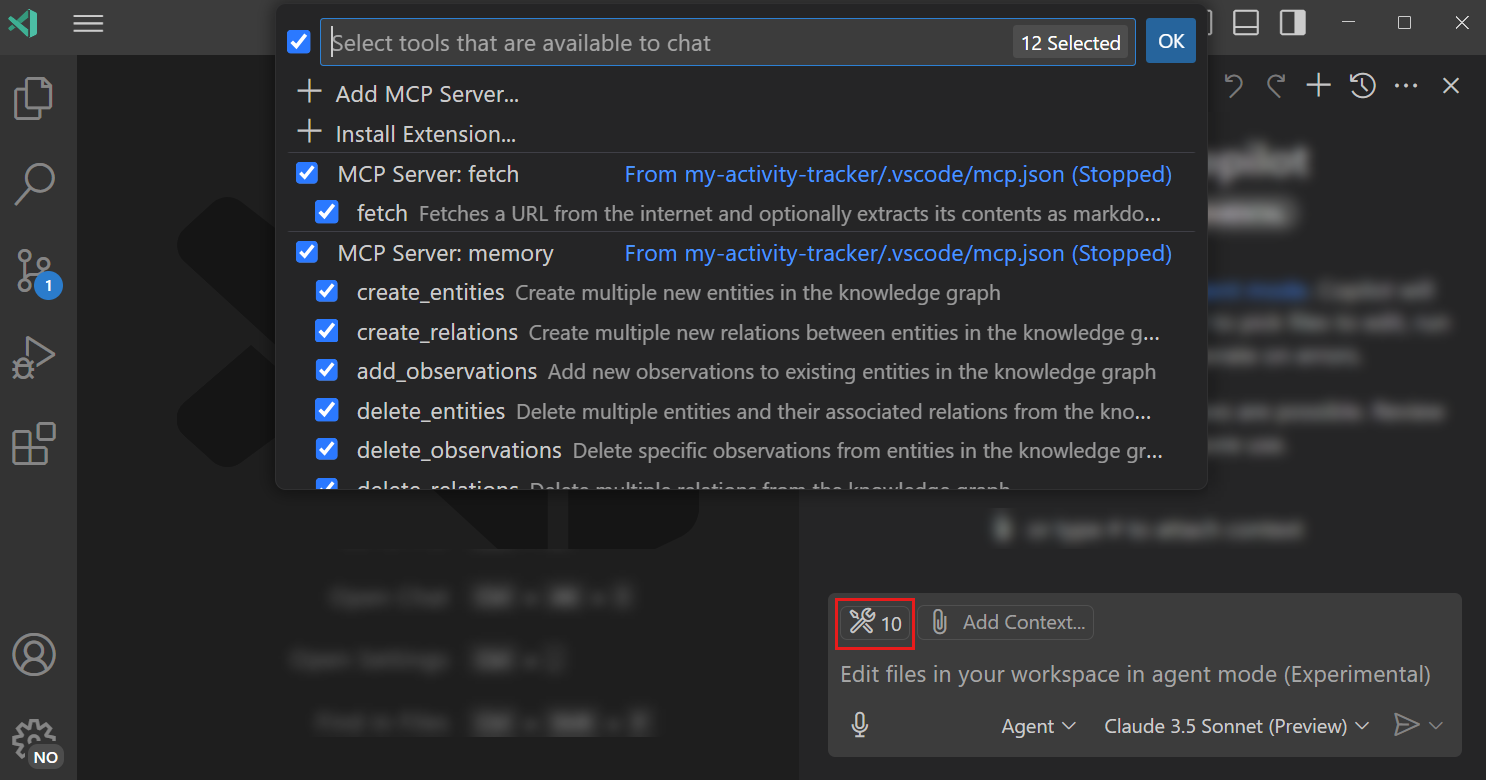

And it’s not alone. Google’s Jules can already work on your repo autonomously. Their Stitch tool can generates UIs from napkin sketches. Stitch + Jules + Opal = a future where the IDE becomes invisible.

This is also something similar we see in tools like OpenAI Codex to a lesser but important extent.

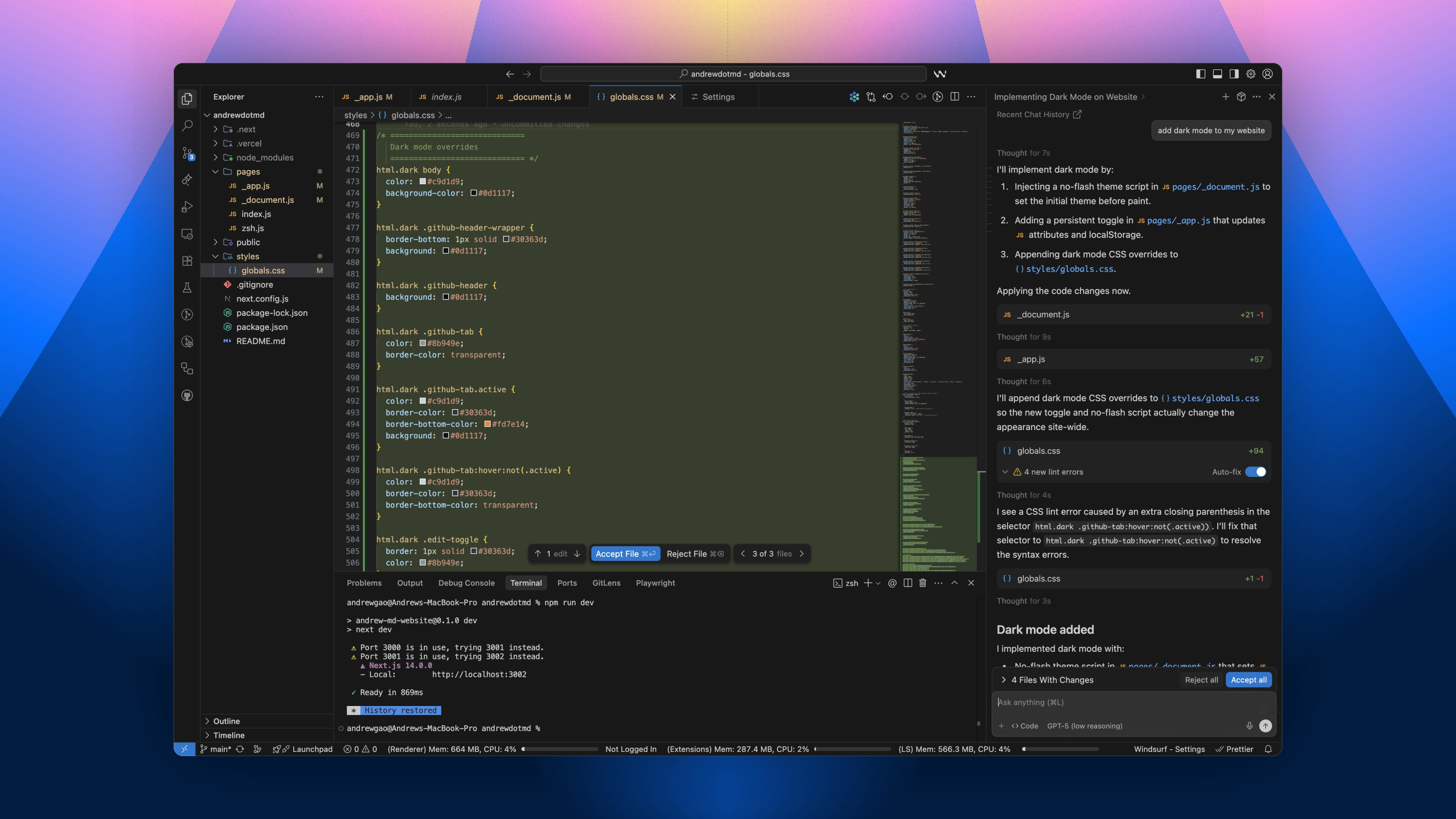

For the first time in the history of software development, we can make massive, significant changes to our codebase without having to ever open the IDE and touch code.

Opal vs IDEs

Where an IDE assumes:

- You know the language

- You own the repo

- You debug with your brain

- You ship and maintain your code

Opal assumes:

- You don’t need to know how anything works

- You want it running now

- The AI handles the logic

- The environment is the product

It’s the Uber of programming: you don’t need to build the car. You just say where you want to go.

Confidence

The tradeoff:

- Opal is fast but opaque.

- Code is slow but transparent.

There’s no Git. No static typing. No test suite. You’re trusting the AI to do the right thing—and if it breaks, you might not even know why.

But that’s the point. This isn’t supposed to do what an IDE does.

This is supposed to make you forget you ever needed one.

For this to ever happen — we have to have an incredibly high level of confidence — that the changes being made are exactly what we specify and every ambiguity is accounted for.

Will code become obsolete?

Not yet. Not for large systems. Not for fine-tuned control.

But Opal shows us a real possibility:

- That small tools can be spoken into existence.

- That AI will eat the scaffolding, the glue code, the boring parts.

- That someday, even real products might be built in layers of AI-on-AI—no React, no Docker, no IDE in sight.

It’s not just no-code. It’s post-code.

Welcome to the brave new world. Google’s already building it.