Developers are absolutely loving the new GPT-5 (def not all tho, ha ha).

It’s elevating our software development capabilities to a whole new level.

Everything is getting so much more effortless now:

You’ll see how it built the website so easily from the extremely detailed prompt from start from finish:

On SWE-bench Verified, which tests real GitHub issues inside real repos, GPT-5 hits 74.9% — the highest score to date.

Some people seem to really hate GPT-5 tho…

“SO SLOW!”

“HORRIBLE!”:

Not too sure what slowness they’re talking about.

I was even thinking it was noticeably faster than prev models when I first tried it in ChatGPT. Maybe placebo?

On Aider Polyglot, which measures how well it edits code via diffs, it reaches 88%.

“BLOATED”.

“WASTES TOKENS”.

GPT-5 can chain tool calls, recover from errors, and follow contracts — so it can scaffold a service, run tests, fix failures, and explain what changed, all without collapsing mid-flow.

“CLUELESS”.

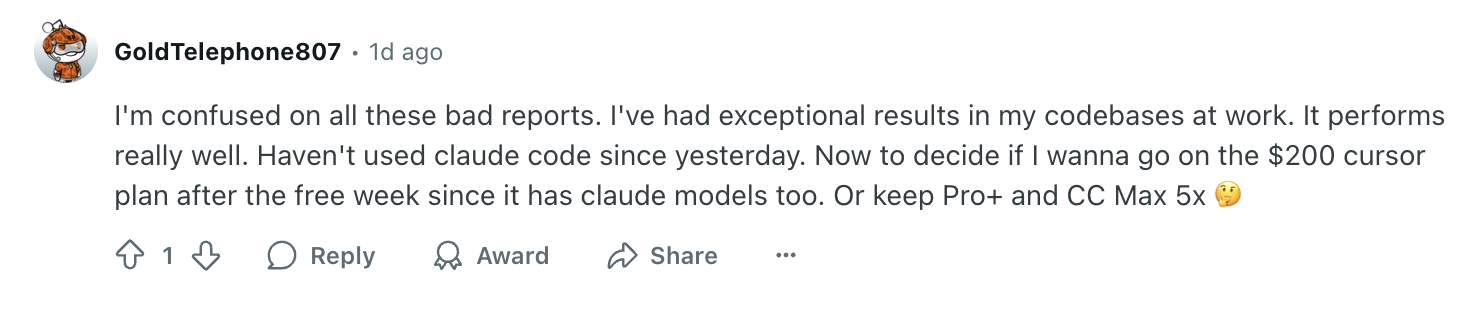

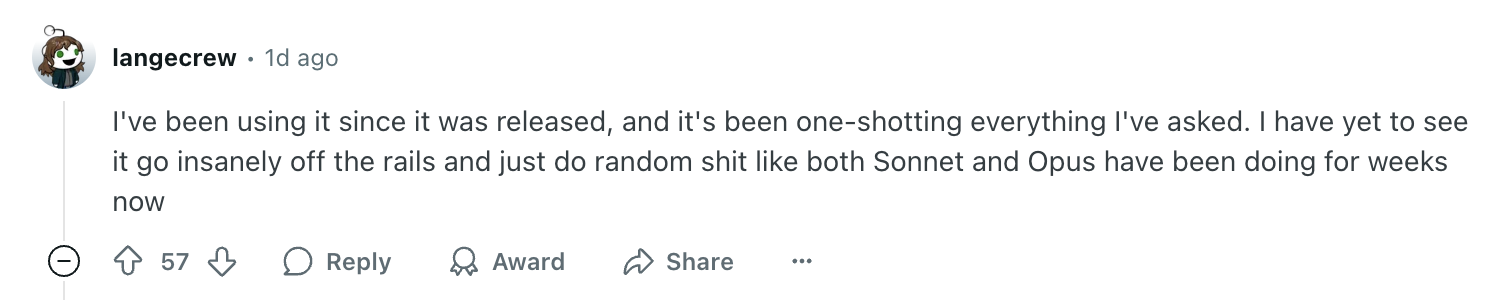

But for many others these higher benchmark scores aren’t just theoretical — it’s making real impact in real codebases from real developers.

“Significantly better”:

See how Jetbrains Junie assistant so easily used GPT-5 to make this:

“The best”

“Sonnet level”

It’s looking especially good for frontend development, especially designing beautiful UIs.

In OpenAI’s tests, devs preferred GPT-5 over o3 ~70% of the time for frontend tasks. You can hand it a one-line brief and get a polished React + Tailwind UI — complete with routing, state, and styling that looks like it came from a UI designer.

The massive token limits GPT-5 has ensure that your IDEs have more than enough context from your codebase to give the most accurate results.

With ~400K total token capacity (272K input, 128K reasoning/output), GPT-5 can take entire subsystems — schemas, services, handlers, tests — and make precise changes. Long-context recall is stronger — so it references the right code instead of guessing.

GPT-5 is more candid when it lacks context and less prone to fabricate results — critical if you’re letting it touch production code.

Like it could ask you to provide more information instead of making stuff up — or assuming you meant something else that it’s more familiar with (annoying).

gpt-5, gpt-5-mini, and gpt-5-nano all share the same coding features, with pricing scaled by power.

The sweet spot for most devs: use minimal reasoning for micro-edits and bump it up for heavy refactors or migrations.

GPT-5 makes coding assistance feel dependable.

It handles the boring 80% so you can focus on the valuable 20%, and it does it with context, precision, and a lot less hand-holding.