OpenAI just dropped a brand new model to try to rise above the DeepSeek craze and get back into the spotlight.

And it looks like they’ve been pretty successful with that…

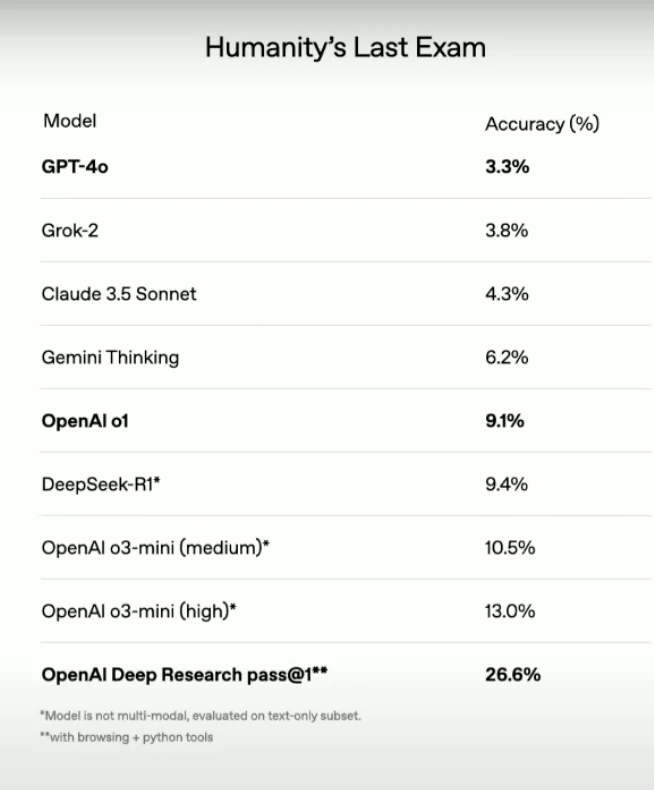

o3-mini breaks boundaries and re-overtakes DeepSeek in key areas, including in this incredibly tough AI benchmark that no other model could reach even 10% accuracy in…

Doubling down on their questionable naming scheme to release o3 mini — a seemingly faster and smaller version of the o3 that came out a few weeks back.

And the biggest deal here is the price — it’s free to use.

Showing just how major the DeepSeek blow was — from confident delusions of a $2000 per month subscription for o3, to now letting anyone access it.

They’ve gotten a huge huge reality check from the competition.

Now they’re going to be thinking long and hard about pricing anytime a new model comes out.

So they’re actually 3 sub-tiers for the o3 mini-tier: low, medium, and high.

Pretty cool to see smaller o3-mini outperform even the default version of o1 in areas like coding and math.

But DeepSeek is still better in certain areas — like the coding:

But o3-mini did outclass DeepSeek on an extremely difficult AI benchmark called Humanity’s Last Exam (HLE).

HLE is basically a set of 3,000 questions across dozens of subjects like math and natural science — it’s like the OG benchmark to check just how advanced a model is at reasoning and knowledge.

So it’s free for everyone right now, but of course not exactly…

It still has the cap like all the other super expensive models like o1 — even paying Plus users have this cap.

And you only get o3-mini low and o3-mini medium for free.

Sneaky clever naming — they can still technically say they made “o3” free, when you don’t even get the best sub-tier of the mini-tier of o3.

But I guess this just means we’ll be getting a new DeepSeek model soon (lol)? Since they apparently trained their r1 model with tons of OpenAI model data.

And couldn’t OpenAI also “steal” this new DeepSeek training method to create a derived model using o3 data? That could even be more accurate than DeepSeek since they’d have access to the data directly.

It’s clear everyone is really buckling up now… Google too announced a model shortly after the DeepSeek news — Gemini 2.0 Pro.

Simply couldn’t ignore the speed at which this thing blew up… #1 in App Store and Play Store in like how many days? No way…

One thing we can see this — investing so much money to stay ahead of the AI race and maintain market share, MAY not be worth it after all.

If it’s always going to be this easy for competitors to catch up to new models and with lower prices, will they ever be able to eventually get an worthwhile ROI in the future from all this investment?

How much longer can they continue to justify the value to investors and keep getting all those billions and billions?

And clearly there’s no other real moat to keep users locked in, as we saw with how people rushed to download DeepSeek.

It may not be long before we starting seeing ChatGPT experience enshittification as they try to squeeze out any cash possible.

Maybe we’ll start seeing ads in ChatGPT like Bing Chat does now, ha ha! Even kind of surprising to see how Google’s Gemini still hasn’t gotten any ads at this point.

Unless they figure out how to cut model costs drastically, they’re going to have to keep burning more cash while still keeping prices as low as possible — hoping not to get outshined by the competition so they can keep getting more cash to burn.

One thing’s clear: the next few months will decide a lot.